Oracle Sorting: Much Less Memory Required!

We recently had a performance issue on a large production databbase, and I tracked the problem down to a monstrous sort. The sql required a big hash join, but even worse, it had to sort over a billion rows. Given the fields involved, I estimated the data being sorted at 100 GB.

I assumed that Oracle would need the same size—about 100 GB of PGA memory, to do this sort. This seemed impossible—how could this ever work? It seemed like our server would never have that much spare memory available.

Well, I was right, and also wrong. It’s true that our server never had that much memory available, but it didn’t matter, since it didn’t need that much.

Oracle’s Tricky MethodIt turns out that efficient sorts—even of huge data sets, only require a modest amount of memory. In my particular case, Oracle only needed 100 MB—about 1000x less than I thought. Oracle doesn’t need to put the entire data set in memory; rather, it uses a clever method to accomplish the sort in parts. There are dozens of different algorithms that allow efficient sorting of huge amounts of data. They accomplish this by working on small sets of data at a time, and putting the intermediate results into “buckets,” sort of like a hashing routine.

Oracle keeps track of how it handles big sorts. Look in the view, V$Sql_Workarea. It classifies the sorts as either Optimal, One Pass, or Multi Pass. Here’s the key: Optimal and One Pass will work fine; only the Multi-Pass ones will have big degradation. It’s called, “One Pass,” because Oracle has to do one more pass through the data.

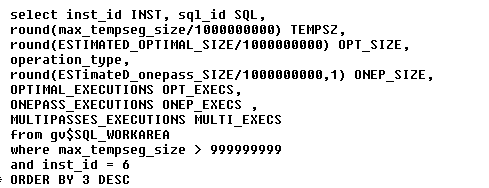

Here’s one way to display the current sorts. The output below has been slightly changed (sql_id’s modified).

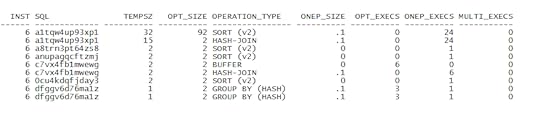

Recent Sorts

Works Surprisingly Well

In this example shown above, only one sql potentially required a big sort area. Note that Oracle performed 24 One Pass executions, and zero “Multi” executions. In other words, performance will likely be fine.

I admit I was surprised by the sophistication of Oracle.