What do you think?

Rate this book

165 pages, ebook

Published June 1, 2024

In Trump’s America, there will be hard choices for everyone, including the billionaires. Though it may be less hard for them. Vance has said he wants to deregulate crypto and unshackle AI. He’s said he’d dismantle Biden’s attempts to place safeguards around AI development.____________

He said the US needs to double its energy production, partly to fuel artificial intelligence. Trump said he will fast-track the approvals for new power plants, which companies can site next to their plants – something not currently possible under regulations. Worryingly, he declared that companies will be able to fuel it with anything they want, and have coal as a backup, “good, clean coal”.So far, Aschenbrenner's predictions seem remarkably accurate.

"American national security must come first, before the allure of free-flowing Middle Eastern cash, arcane regulation, or even, yes, admirable climate commitments"

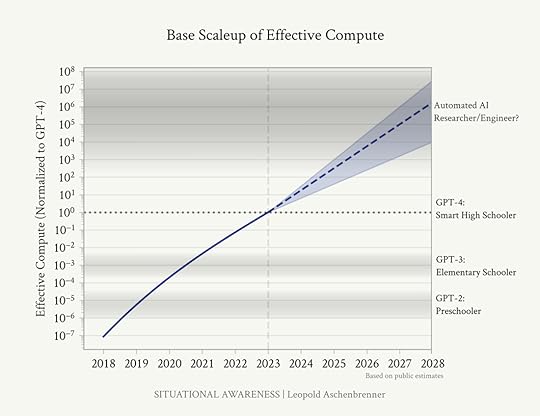

One neat way to think about this is that the current trend of AI progress is proceeding at roughly 3x the pace of child development. Your 3x-speed-child just graduated high school; it’ll be taking your job before you know it!

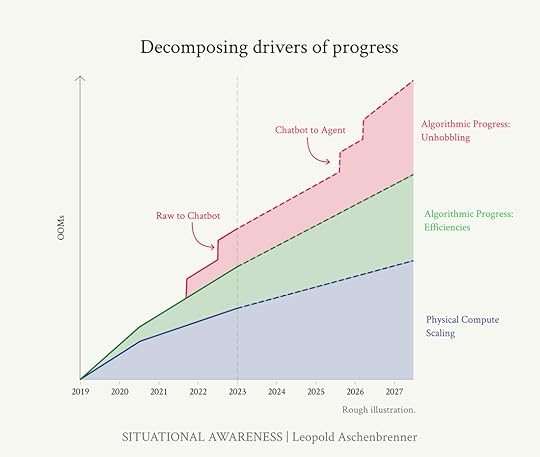

Or consider adaptive compute: Llama 3 still spends as much compute on predicting the “and” token as it does the answer to some complicated question, which seems clearly suboptimal.

GPT-4 has the raw smarts to do a decent chunk of many people’s jobs, but it’s sort of like a smart new hire that just showed up 5 minutes ago

We’d be able to run millions of copies (and soon at 10x+ human speed) of the automated AI researchers.

Imagine 1000 automated AI researchers spending a month-equivalent checking your code and getting the exact experiment right before you press go. I’ve asked some AI lab colleagues about this and they agreed: you should pretty easily be able to save 3x-10x of compute on most projects merely if you could avoid frivolous bugs, get things right on the first try, and only run high value-of-information experiments.

The superintelligence we get by the end of it could be quite alien. We’ll have gone through a decade or more of ML advances during the intelligence explosion, meaning the architectures and training algorithms will be totally different (with potentially much riskier safety properties).

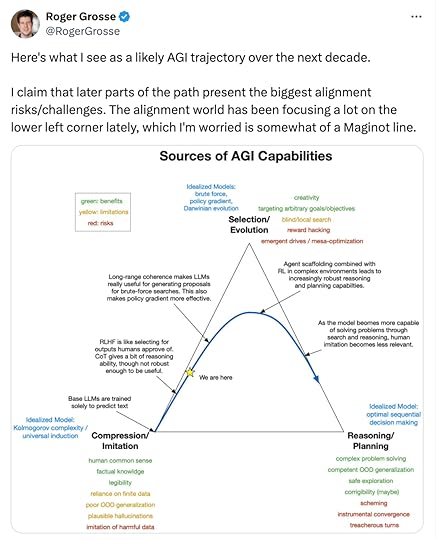

RLHF relies on humans being able to understand and supervise AI behavior, which fundamentally won’t scale to superhuman systems.

By default, it may well learn to lie, to commit fraud, to deceive, to hack, to seek power, and so on

The primary problem is that for whatever you want to instill the model (including ensuring very basic things, like “follow the law”!) we don’t yet know how to do that for the very powerful AI systems we are building very soon.

... What’s more, I expect that within a small number of years, these AI systems will be integrated in many critical systems, including military systems (failure to do so would mean complete dominance by adversaries).

in the next 12-24 months, we will develop the key algorithmic breakthroughs for AGI, and promptly leak them to the CCP

But the AI labs are developing the algorithmic secrets—the key technical breakthroughs, the blueprints so to speak—for the AGI right now (in particular, the RL/self-play/synthetic data/etc “next paradigm” after LLMs to get past the data wall). AGI-level security for algorithmic secrets is necessary years before AGI-level security for weights. These algorithmic breakthroughs will matter more than a 10x or 100x larger cluster in a few years

a healthy lead will be the necessary buffer that gives us margin to get AI safety right, too ... the difference between a 1-2 year and 1-2 month lead will really matter for navigating the perils of superintelligence

The binding constraint on the largest training clusters won’t be chips, but industrial mobilization—perhaps most of all the 100GW of power for the trillion-dollar cluster. But if there’s one thing China can do better than the US it’s building stuff.

What US/China AI race folk sound like to me:

There are superintelligent super technologically advanced aliens coming towards earth at .5 C. We don't know anything about their values. The most important thing to do is make sure they land in the US before they land in China.

The intelligence explosion will be more like running a war than launching a product. ... I find it an insane proposition that the US government will let a random SF startup develop superintelligence. Imagine if we had developed atomic bombs by letting Uber just improvise.

It seems pretty clear: this should not be under the unilateral command of a random CEO. Indeed, in the private-labs-developing-superintelligence world, it’s quite plausible individual CEOs would have the power to literally coup the US government.