What do you think?

Rate this book

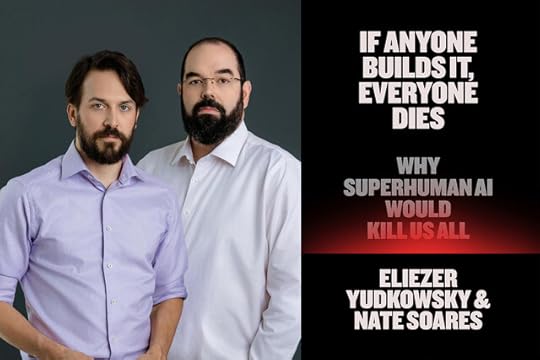

232 pages, Kindle Edition

First published January 1, 2025

"...In early 2023, hundreds of Artificial Intelligence scientists signed an open letter consisting of that one sentence. These signatories included some of the most decorated researchers in the field. Among them were Nobel laureate Geoffrey Hinton and Yoshua Bengio, who shared the Turing Award for inventing deep learning. We—Eliezer Yudkowsky and Nate Soares—also signed the letter, though we considered it a severe understatement.

It wasn’t the AIs of 2023 that worried us or the other signatories. Nor are we worried about the AIs that exist as we write this, in early 2025. Today’s AIs still feel shallow, in some deep sense that’s hard to describe. They have limitations, such as an inability to form new long-term memories. These shortcomings have been enough to prevent those AIs from doing substantial scientific research or replacing all that many human jobs.

Our concern is for what comes after: machine intelligence that is genuinely smart, smarter than any living human, smarter than humanity collectively. We are concerned about AI that surpasses the human ability to think, and to generalize from experience, and to solve scientific puzzles and invent new technologies, and to plan and strategize and plot, and to reflect on and improve itself. We might call AI like that “artificial superintelligence” (ASI), once it exceeds every human at almost every mental task."

"The months and years ahead will be a life-or-death test for all humanity. With this book, we hope to inspire individuals and countries to rise to the occasion.

In the chapters that follow, we will outline the science behind our concern, discuss the perverse incentives at play in today’s AI industry, and explain why the situation is even more dire than it seems. We will critique modern machine learning in simple language, and we will describe how and why current methods are utterly inadequate for making AIs that improve the world rather than ending it."

"If any company or group, anywhere on the planet, builds an artificial superintelligence using anything remotely like current techniques, based on anything remotely like the present understanding of AI, then everyone, everywhere on Earth, will die."

"Maybe an AI will be trained into superintelligence. Maybe many AIs will start contributing to AI research and build a superintelligent AI using some whole new paradigm. Maybe one AI will be tasked with selfmodification and make itself smarter to the point of superintelligence. Or maybe something weirder happens; we don’t know. But the endpoint of modern AI development is the creation of a machine superintelligence with strange and alien preferences.

And then there will exist a machine superintelligence that wants to repurpose all the resources of Earth for its own strange ends. And it will want to replace us with all its favorite things. Which brings us to the question of whether it could..."