What do you think?

Rate this book

360 pages, Kindle Edition

First published March 16, 2021

[In 2016] Ed Boyton, a Princeton University professor who specialized in nascent technologies for sending information between machines and the human brain…told [a] private audience that scientists were approaching the point where they could create a complete map of the brain and then simulate it with a machine. The question was whether the machine, in addition to acting like a human, would actually feel what it was like to be human. This, they said, was the same question explored in Westworld.AI, Artificial Intelligence, is a source of active concern in our culture. Tales abound in film, television, and written fiction about the potential for machines to exceed human capacities for learning, and ultimately gain self-awareness, which will lead to them enslaving humanity, or worse. There are hopes for AI as well. Language recognition is one area where there has been growth. However much we may roll our eyes at Siri or Alexa’s inability to, first, hear, the words we say properly, then interpret them accurately, it is worth bearing in mind that Siri was released a scant ten years ago, in 2011, Alexa following in 2014. We may not be there yet, but self-driving vehicles are another AI product that will change our lives. It can be unclear where AI begins and the use of advanced algorithms end in the handling of our on-line searching, and in how those with the means use AI to market endless products to us.

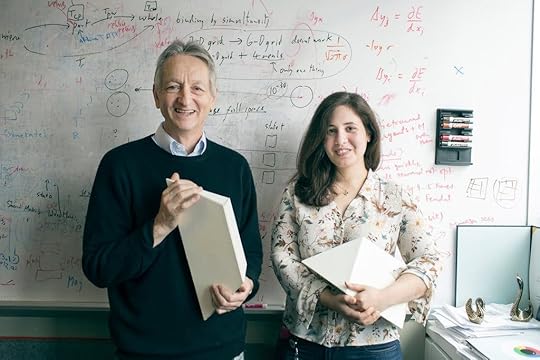

“How can an intelligent young man like you,” he asked, “waste your time with something like this?”This was not out of character for the guy, who enjoyed provoking controversy, and, clearly, pissing people off. He single-handedly short-circuited a promising direction in AI research with his strident opposition.

…a colleague introduced [Geoff Hinton] at an academic conference as someone who had failed at physics, dropped out of psychology, and then joined a field with no standards at all: artificial intelligence. It was a story Hinton enjoyed repeating, with a caveat. “I didn’t fail at physics and drop out of psychology,” he would say. “I failed at psychology and dropped out of physics—which is far more reputable.”The Genius Makers is a very readable bit of science history, aimed at a broad public, not the techie crowd, who would surely be demanding a lot more detail in the theoretical and implementation ends of decision-making and the construction of hardware and software. It will give you a clue as to what is going on in the AI world, and maybe open your mind a bit to what possibilities and perils we can all look forward to.

After deploying the software in its own data centers, Google had open-sourced this creation, freely sharing the code with the world at large. This was a way of exerting its power across the tech landscape. If other companies, universities, government agencies, and individuals used Google’s software as they, too, pushed into deep learning, their efforts would feed the progress of Google’s own work, accelerating AI research across the globe and allowing the company to build on this research.

We take a lot of the low-level infrastructure and we make that open source. Probably the biggest one in our history was our Open Compute Project where we took the designs for all of our servers, network switches, and data centers, and made it open source and it ended up being super helpful. Although a lot of people can design servers the industry now standardized on our design, which meant that the supply chains basically all got built out around our design. So volumes went up, it got cheaper for everyone, and it saved us billions of dollars which was awesome.

After one group of rats learned to navigate a maze, he would inject their brain matter into a second group. Then he would drop the second group into the maze to see if their minds had absorbed what the first group had learned.

To obtain this extract, the donor rats were killed by decapitation. … At least some of these [recipient] rats appear to be suffering adverse after-effects from the injection (including injuries and phenol poisoning in several cases).

[In the mid-1990s] LeCun and his colleagues also designed a microchip they called ANNA. … Instead of running their algorithms using ordinary chips built for just any task, LeCun’s team built a chip for this one particular task. That meant it could handle the task at speeds well beyond the standard processors of the day: about 4 billion operations a second.

This wasn’t because Baidu had better engineers or better technology. It was because Baidu was building its car in China. In China, government was closer to industry. As Baidu’s chief operating officer, [Qi Lu] was working with five Chinese municipalities to remake their cities so that they could accommodate the company’s self-driving cars.

When Hinton gave a lecture at the annual NIPS conference, then held in Vancouver, on his sixtieth birthday, the phrase “deep learning” appeared in the title for the first time. It was a cunning piece of rebranding. Referring to the multiple layers of neural networks, there was nothing new about “deep learning.” But it was an evocative term designed to galvanize research in an area that had once again fallen from favor.

Both projects were also conspicuous stunts, a way for OpenAI to promote itself as it sought to attract the money and the talent needed to push its research forward. The techniques under development at labs like OpenAI were expensive—both in equipment and in personnel—which meant that eye-catching demonstrations were their lifeblood.