Cal Newport's Blog

November 28, 2025

David Grann and the Deep Life

Last year, the celebrated New Yorker writer David Grann spoke with Nieman Storyboard about his book, The Wager. The interviewer asked Grann how he manages to keep coming across the kind of stories that most writers would dream of finding, even once in their lives.

Here’s how Grann responded:

“Coming up with the right idea is the hardest part. First, you try to find a story that grips you and has subjects that are fascinating. Then, you ask: Are there underlying materials to tell that story?… The third level of interrogation is: Does the story have another dimension, richer themes, or trap doors that lead you places?”

He later adds:

“I spend a preliminary period ruthlessly interrogating ideas as I come across them, even though it’s time-consuming and a bit frustrating. I don’t want to wake up two years into a book project saying, ‘This isn’t going anywhere.’”

These quotes caught my attention because their relevance extends beyond the craft of writing and to the broader concern of cultivating depth in a world increasingly mired in digitally-enhanced shallowness.

In life, the types of deep projects that free us from these shallows–whether by transforming our career into something remarkable or making our personal lives richer–require a massive investment of time and effort. This includes:

Given these demands, it’s common to either lose interest in these projects once they get going or to be so intimidated by the path ahead that you never get started in the first place.

Grann’s advice helps with both issues. By raising the bar for considering a deep project–relentlessly examining, researching, and studying the reality of a pursuit before finally deeming it worthy–you’ll naturally end up giving serious consideration to fewer ideas. And those that do make it through this gauntlet will be so compelling that you’re much more likely to get started and stick with them.

This pre-commitment vetting is often a missing piece when discussing grand goals. Online “hustle culture” voices often emphasize activity for its own sake: Get started! Delay is for the weak! Craftsmen like Grann, on the other hand, understand that fundamental to the art of deep accomplishment is the patient search for the right subject.

The post David Grann and the Deep Life appeared first on Cal Newport.

November 23, 2025

When it Comes to AI: Think Inside the Box

James Somers recently published an interesting essay in The New Yorker titled “The Case That A.I. Is Thinking.” He starts by presenting a specific definition of thinking, attributed in part to Eric B. Baum’s 2003 book What is Thought?, that describes this act as deploying a “compressed model of the world” to make predictions about what you expect to happen. (Jeff Hawkins’s 2004 exercise in amateur neuroscience, On Intelligence, makes a similar case).

Somers then talks to experts who study how modern large language models operate, and notes that the mechanics of LLMs’ next-token prediction resemble this existing definition of thinking. Somers is careful to constrain his conclusions, but still finds cause for excitement:

“I do not believe that ChatGPT has an inner life, and yet it seems to know what it’s talking about. Understanding – having a grasp of what’s going on – is an underappreciated kind of thinking.”

Compare this thoughtful and illuminating discussion to another recent description of AI, delivered by biologist Bret Weinstein on an episode of Joe Rogan’s podcast.

Weinstein starts by (correctly) noting that the way a language model learns the meaning of words through exposure to text is analogous to how a baby picks up parts of language by listening to conversations.

But he then builds on this analogy to confidently present a dramatic description of how these models operate:

“It is running little experiments and it is discovering what it should say if it wants certain things to happen, etc. That’s an LLM. At some point, we know that that baby becomes a conscious creature. We don’t know when that is. We don’t even know precisely what we mean. But that is our relationship to the AI. Is the AI conscious? I don’t know. If it’s not now, it will be, and we won’t know when that happens, right? We don’t have a good test.”

This description conflates and confuses many realities about how language models actually function. The most obvious is that once trained, language models are static; they describe a fixed sequence of transformers and feed-forward neural networks. Every word of every response that ChatGPT produces is generated by the same unchanging network.

Contrary to what Weinstein implies, a deployed language model cannot run “little experiments,” or “want” things to happen, or have any notion of an outcome being desirable or not. It doesn’t plot or plan or learn. It has no spontaneous or ongoing computation, and no updatable model of its world – all of which implies it certainly cannot be considered conscious.

As James Somers argues, these fixed networks can still encode an impressive amount of understanding and knowledge that is applied when generating their output, but the computation that accesses this information is nothing like the self-referential, motivated, sustained internal voices that humans often associate with cognition.

(Indeed, Somers specifically points out that our common conceptualization of thinking as “something conscious, like a Joycean inner monologue or the flow of sense memories in a Proustian daydream” has confused our attempts to understand artificial cognition, which operates nothing like this.)

~~~

I mention these two examples because when we talk about AI, they present two differing styles.

In Somers’s thoughtful article, we experience a fundamentally modern approach. He looks inside the proverbial black box to understand the actual mechanisms within LLMs that create the behavior he observed. He then uses this understanding to draw interesting conclusions about the technology.

Weinstein’s approach, by contrast, is fundamentally pre-modern in the sense that he never attempts to open the box and ask how the model actually works. He instead observed its behavior (it’s fluent with language), crafted a story to explain this behavior (maybe language models operate like a child’s mind), and then extrapolated conclusions from his story (children eventually become autonomous and conscious beings, therefore language models will too).

This is not unlike how pre-modern man would tell stories to describe natural phenomena, and then react to the implication of their tales; e.g., lightning comes from the Gods, so we need to make regular sacrifices to keep the Gods from striking us with a bolt from the heavens.

Language model-based AI is an impressive technology that is accompanied by implications and risks that will require cool-headed responses. All of this is too important for pre-modern thinking. When it comes to AI, it’s time to start our most serious conversations by thinking inside the box.

The post When it Comes to AI: Think Inside the Box appeared first on Cal Newport.

November 16, 2025

Why Can’t AI Empty My Inbox?

The address that I use for this newsletter has long since been overrun by nonsense. Seemingly every PR and marketing firm in existence has gleefully added it to the various mailing lists that they use to convince their clients that they offer global reach. I recently received, for example, a message announcing a new uranium mining venture. Yesterday morning, someone helpfully sent me a note to alert me that “CPI Aerostructures Reports Third Quarter and Nine Month 2025 Results.”

Here’s the problem: this is also the address where my readers send me interesting notes about my essays, or point me toward articles or books they think I might like. I want to read these messages, but they’re often hidden beneath unruly piles of digital garbage.

So, I decided to see if AI could solve my problem.

The tool I chose was called Cora, as it was among the more aggressive options available. Its goal is to reduce your inbox to messages that actually require your response, summarizing everything else in a briefing that it delivers twice a day.

Cora’s website notes that, on average, ninety percent of our emails don’t require a reply, “so then why do we have to read them one by one in the order they came in?” Elsewhere, it promises: “Give Cora your Inbox. Take back your life.”

This all sounded good to me. I activated Cora and let it loose.

~~~

I detail the story of my experience with Cora in my latest article for The New Yorker, which is titled “Why Can’t A.I. Manage My E-Mail?”, and was published last week.

Ultimately, the tool did a good job. This inbox has indeed been reduced to a much smaller collection of messages that almost all actually interest me. The AI is sometimes overzealous and filters some messages that it should have left behind, but I can find those in the daily briefings, and nothing that arrives here is urgent business, so the stakes are low.

The bigger question I ask in this article, however, is whether AI will soon be able to go beyond filtering messages to answering them on our behalf, automating the task of email altogether. This would be a big deal:

I’ve come to believe that the seemingly humble task of checking e-mail—that unremarkable, quotidian backbeat to which digital office culture marches—is something more profound. In 1950, Alan Turing argued in a seminal paper that the question “Can machines think?” can be answered with a so-called imitation game, in which a computer tries to trick an interrogator into believing it’s human. If the machine succeeds, Turing argued, we can consider it to be truly intelligent. Seventy-five years later, the fluency of chatbots makes the original imitation game seem less formidable. Yet no machine has yet conquered the inbox game. When you look closer at what actually goes into this Sisyphean chore, an intriguing thought emerges: What if solving e-mail is the Turing test we need now?

Cora, as it turns out, cannot solve the Inbox Game – it can organize your messages, but not handle them on your behalf. Neither can any other tool I surveyed, from SuperHuman to SaneBox. As I go on to explain in my article, this is not for lack of trying: there are key technical obstacles that make answering emails something AI tools aren’t yet close to solving.

I encourage you to read my full article for the entire computer science argument. But I want to emphasize here the conclusion I reached: even with their current constraints, which limit AI-based tools mainly to filtering and summarizing messages, there’s still much room for them to evolve into increasingly interesting and useful configurations.

In my article, for example, I watched a demo of an experimental AI tool that transforms the contents of your inbox into a narrative “intelligence briefing.” You then tell the tool in natural language what you want it to do – “tell Mary to send me a copy of that report and I’ll take a look” – and it writes and sends messages on your behalf. The possibilities here are intriguing!

Here’s how I ended my piece:

“Although A.I. e-mail tools will probably remain constrained…they can still have a profound impact on our relationship with a fundamental communication technology. …Recently, I returned from a four-day trip and opened my Cora-managed inbox. I found only twenty-four new e-mails waiting for my attention, every one of them relevant. I was still thrilled by this novel cleanliness. Soon, a new thought, tinged with some unease, crept in: This is great—but how could we make it better? I’m impatient for what comes next.”

This is the type of AI that interests me. Not super-charged chatbot oracles, devouring gigawatts of energy to promise me wise answers to any conceivable query, or the long-promised agents that can automate my tasks completely. But instead, practical improvements to chores that have long been a source of anxiety and annoyance.

I don’t need HAL 9000; an orderly inbox is enough for now.

The post Why Can’t AI Empty My Inbox? appeared first on Cal Newport.

November 10, 2025

Forget Chatbots. You Need a Notebook.

Back in 2012, as a young assistant professor, I traveled to Berkeley to attend a wedding. On the first morning after we arrived, my wife had a conference call, so I decided to wander the nearby university campus to work on a vexing theory problem my collaborators and I had taken to calling “The Beast.”

I remember what happened next because I wrote an essay about the experience. The tale starts slow:

“It was early, and the fog was just starting its march down the Berkeley hills. I eventually wandered into an eucalyptus grove. Once there, I sipped my coffee and thought.”

I eventually come across an interesting new technique to circumvent a key mathematical obstacle thrown up by The Beast. But this hard-won progress soon presented a new issue:

“I realized… that there’s a limit to the depth you can reach when keeping an idea only in your mind. Looking to get the most out of my new insights, and inspired by my recent commitment to the textbook method, I trekked over to a nearby CVS and bought a 6×9 stenographer’s notebook…I then forced myself to write out my thoughts more formally. This combination of pen and paper notes with the exotic context in which I was working ushered in new layers of understanding.”

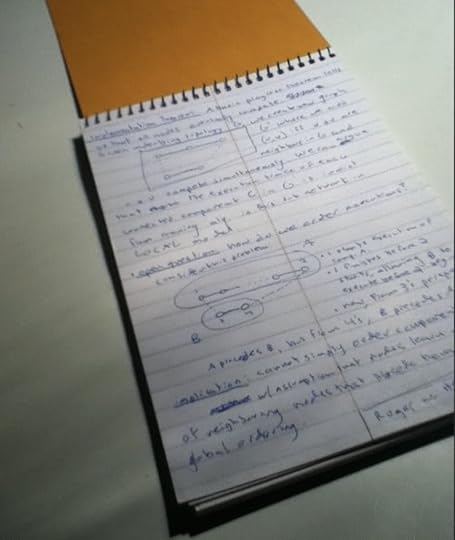

I even included a nostalgically low-resolution photo of these notes:

More than a decade later, I can’t remember exactly which academic paper I was working on in that eucalyptus grove, but based on some clues from the photo above, I’m pretty sure it was this one, which was published the following year and received a solid 65 citations.

I revisited this essay on my podcast this week. The activity it captured seemed a strong rebuke to the current vision of a fast-paced, digitized, AI-dominated workplace that Silicon Valley keeps insisting we must all embrace.

There’s a deeply human satisfaction to retreating to an exotic location and wrestling with your own mind, scratching a record of your battle on paper. The innovations and insights produced by this long thinking are deeper and more subversive than the artificially cheery bullet points of a chatbot.

The problem facing knowledge work in our current moment is not that we’re lacking sufficiently powerful technologies. It’s instead that we’re already distracted by so many digital tools that there’s no time left to really open the throttle on our brains.

And this is a shame.

Few satisfactions are more uniquely human than the slow extraction of new understanding, illuminated through the steady attention of your mind’s eye.

So, grab a notebook and head somewhere scenic to work on a hard problem. Give yourself enough time, and the enthusiastic clamor about a world of AI agents and super-charged productivity will dissipate to a quiet hum.

The post Forget Chatbots. You Need a Notebook. appeared first on Cal Newport.

November 3, 2025

Why Are We Talking About Superintelligence?

A couple of weeks ago, Ezra Klein interviewed AI researcher Eliezer Yudkowsky about his new, cheerfully-titled book, If Anyone Builds it, Everyone Dies.

Yudkowsky is worried about so-called superintelligence, AI systems so much smarter than humans that we cannot hope to contain or control them. As Yudkowsky explained to Klein, once such systems exist, we’re all doomed. Not because the machines will intentionally seek to kill us, but because we’ll be so unimportant and puny to them that they won’t consider us at all.

“When we build a skyscraper on top of where there used to be an ant heap, we’re not trying to kill the ants; we’re trying to build a skyscraper,” Yudkowsky explains. In this analogy, we’re the ants.

In this week’s podcast episode, I go through Yudkowsky’s interview beat by beat and identify all the places where I think he’s falling into sloppy thinking or hyperbole. But here I want to emphasize what I believe is the most astonishing part of the conversation: Yudkowsky never makes the case for how he thinks we’ll succeed in creating something as speculative and outlandish as superintelligent machines. He just jumps right into analyzing why he thinks these superintelligences will be bad news.

The omission of this explanation is shocking.

Imagine walking into a bio-ethics conference and attempting to give an hour-long presentation about the best ways to build fences to contain a cloned Tyrannosaurus. Your fellow scientists would immediately interrupt you, demanding to know why, exactly, you’re so convinced that we’ll soon be able to bring dinosaurs back to life. And if you didn’t have a realistic and specific answer—something that went beyond wild extrapolations and a general vibe that genetics research is moving fast—they’d laugh you out of the room…

But in certain AI Safety circles (especially those emanating from Northern California), such conversations are now commonplace. Superintelligence as an inevitability is just taken as an article of faith.

Here’s how I think this happened…

In the early 2000s, a collection of overlapping subcultures emerged from tech circles, all loosely dedicated to applying hyper-rational thinking to improve oneself or the world.

One branch of these movements focused on existential risks to intelligent life on Earth. Using a concept from discrete mathematics called expected value, they argued that it can be worth spending significant resources now to mitigate an exceedingly rare future event, if the consequences of such an event would be sufficiently catastrophic. This might sound familiar, as it’s the logic that Elon Musk, who identifies with these communities, uses to justify his push toward us becoming a multi-planetary species.

As these rationalist existential risk conversations gained momentum, one of the big topics pursued was rogue AI that becomes too powerful to contain. Thinkers like Yudkowsky, along with Oxford’s Nick Bostrom, and many others, began systematically exploring all the awful things that could happen if an AI became sufficiently smart.

The key point about all of this philosophizing is that, until recently, it was all based on a hypothetical: What would happen if a rogue AI existed?

Then ChatGPT was released, triggering a general vibe of rapid advancement and diminishing technological barriers. As best I can tell, for many in these rationalist communities, this event caused a subtle, but massively consequential, shift in their thinking: they went from asking, “What will happen if we get superintelligence?” to asking, “What will happen when we get superintelligence?”

These rationalists had been thinking, writing, and obsessing over the consequences of rogue AI for so long that when a moment came in which suddenly anything seemed possible, they couldn’t help but latch onto a fervent belief that their warnings had been validated; a shift that made them, in their own minds, quite literally the potential saviors of humanity.

This is why those of us who think and write about these topics professionally so often encounter people who seem to have an evangelical conviction that the arrival of AI gods is imminent, and then dance around inconvenient information, falling back on dismissal or anger when questioned.

(In one of the more head-turning moments of their interview, when Klein asked Yudkowsky about critics–such as myself–who argue that AI progress is stalling well short of superintelligence, he retorted: “I had to tell these Johnny-come-lately kids to get off my lawn.” In other words, if you’re not one of the original true believers, you shouldn’t be allowed to participate in this discussion! It’s more about righteousness than truth.)

For the rest of us, however, the lesson here is clear. Don’t mistake conviction for correctness. AI is not magic; it’s a technology like any other. There are things it can do and things it can’t, and people with engineering experience can study the latest developments and make reasonable predictions, backed by genuine evidence, about what we can expect in the near future.

And indeed, if you push the rationalists long enough on superintelligence, they almost all fall back on the same answer: all we have to do is make an AI slightly smarter than ourselves (whatever that means), and then it will make an AI even smarter, and that AI will make an even smarter AI, and so on, until suddenly we have Skynet.

But this is just a rhetorical sleight-of-hand—a way to absolve any responsibility for explaining how to develop such a hyper-capable computer. In reality, we have no idea how to make our current AI systems anywhere near powerful enough to build whole new, cutting-edge computer systems on their own. At the moment, our best coding models seem to struggle with consistently producing programs more advanced than basic vibe coding demos.

I’ll start worrying about Tyrannosaurus paddocks once you convince me we’re actually close to cloning dinosaurs. In the meantime, we have real problems to tackle.

The post Why Are We Talking About Superintelligence? appeared first on Cal Newport.

October 27, 2025

What If Lincoln Had a Smartphone?

Back in 2008, when I was still early in my writing career, I published an essay on my blog that posed a provocative question: Would Lincoln Have Been President if He Had Email? This was one of my early attempts to grapple with problems like digital distraction and focus that would eventually evolve into my books Deep Work and A World Without Email. And at its core was a troubling notion that occurred to me in response to watching a documentary about our sixteenth president:

If the Internet is robbing us of our ability to sit and concentrate, without distraction, in a Lincoln log cabin style of intense focus, we must ask the obvious question: Are we doomed to be a generation bereft of big ideas?

If Lincoln had access to the internet, in other words, would he have been too distracted to become the self-made man who ended up transforming our fledgling Republic?

In this early essay, I leaned toward the answer of “yes.” But in the years since, I’ve become a bit of a Lincoln obsessive, having read more than half a dozen biographies. This has led me to believe that my original instincts were flawed.

Lincoln, of course, didn’t have to contend with digital devices. Still, the rough frontier towns in Indiana and Illinois, where he spent much of his formative years, offered their own analog version of the same general things we fear about the modern internet.

They featured a relentless push toward numbing distraction, most notably in the form of alcohol. “Incredible quantities of whiskey were consumed,” wrote William Lee Miller in Lincoln’s Virtues, “the custom was for every man to drink it, on all occasions that offered.”

There was also the threat of “cancellation” embodied in actual violent mobs, and no shortage of efforts to radicalize or spread hate, such as the antipathy toward Native Americans, which Miller described as a “ubiquitous western presence” at the time.

And yet, Lincoln somehow avoided these traps and rose well above his initial station. There are many factors at play in this narrative, but one, in particular, is hard to ignore: he sharpened his mind with books.

Here are various quotes about young Lincoln, offered by his stepmother, Sarah Bush Lincoln, who encouraged this interest:

“Abe read all the books he could lay his hands on.” “I induced my husband to permit Abe to read and study at home, as well as at school…we took particular care when he was reading not to disturb him–we would let him read on till he quit of his own accord.”“While other boys were out hooking watermelons and trifling away their time, he was studying his books–thinking and reflecting.”Lincoln used books to develop his brain in ways that opened his world, and enabled him to see new opportunities and imagine more meaningful futures–providing a compelling alternative to the forces conspiring to keep him down

Lurking in here is advice for our current moment. To move beyond the distracted darkness of the online world, we might, in a literal sense, take a page from Lincoln and work toward growing our minds instead of pacifying them.

The post What If Lincoln Had a Smartphone? appeared first on Cal Newport.

October 20, 2025

Is Sora the Beginning of the End for OpenAI?

On my podcast this week, I took a closer look at OpenAI’s new video generation model, Sora 2, which can turn simple text descriptions into impressively realistic videos. If you type in the prompt “a man rides a horse which is on another horse,” for example, you get, well, this:

AI video generation is both technically interesting and ethically worrisome in all the ways you might expect. But there’s another element of this story that’s worth highlighting: OpenAI accompanied the release of their new Sora 2 model with a new “social iOS app” called simply Sora.

This app, clearly inspired by TikTok, makes it easy for users to quickly generate short videos based on text descriptions and consume others’ creations through an algorithmically curated feed. The videos flying around this new platform are as outrageously stupid or morally suspect as you might have guessed; e.g.,

Or,

The Sora app, in other words, takes the already purified engagement that fuels TikTok and removes any last vestiges of human agency, resulting in an artificial high-octane slop.

It’s unclear whether this app will last. One major issue is the back-end expense of producing these videos. For now, OpenAI requires a paid ChatGPT Plus account to generate your own content. At the $20 tier, you can pump out up to 50 low-resolution videos per month. For a whopping $200 a month, you can generate more videos at higher resolutions. None of this compares favorably to competitors like TikTok, which are exponentially cheaper to operate and can therefore not only remain truly free for all users, but actually pay their creators.

Whether Sora lasts or not, however, is somewhat beside the point. What catches my attention most is that OpenAI released this app in the first place.

It wasn’t that long ago that Sam Altman was still comparing the release of GPT-5 to the testing of the first atomic bomb, and many commentators took Dario Amodei at his word when he proclaimed 50% of white collar jobs might soon be automated by LLM-based tools.

A company that still believes that its technology was imminently going to run large swathes of the economy, and would be so powerful as to reconfigure our experience of the world as we know it, wouldn’t be seeking to make a quick buck selling ads against deep fake videos of historical figures wrestling. They also wouldn’t be entertaining the idea, as Altman did last week, that they might soon start offering an age-gated version of ChatGPT so that adults could enjoy AI-generated “erotica.”

To me, these are the acts of a company that poured tens of billions of investment dollars into creating what they hoped would be the most consequential invention in modern history, only to finally realize that what they wrought, although very cool and powerful, isn’t powerful enough on its own to deliver a new world all at once.

In his famous 2021 essay, “Moore’s Law for Everything,” Altman made the following grandiose prediction:

“My work at OpenAI reminds me every day about the magnitude of the socioeconomic change that is coming sooner than most people believe. Software that can think and learn will do more and more of the work that people now do. Even more power will shift from labor to capital. If public policy doesn’t adapt accordingly, most people will end up worse off than they are today.”

Four years later, he’s betting his company on its ability to sell ads against AI slop and computer-generated pornography. Don’t be distracted by the hype. This shift matters.

The post Is Sora the Beginning of the End for OpenAI? appeared first on Cal Newport.

October 13, 2025

What Neuroscience Teaches Us About Reducing Phone Use

This week on my podcast, I delved deep into the neural mechanisms involved in making your phone so irresistible. To summarize, there are bundles of neurons in your brain, associated with your short-term motivation system, that recognize different situations and then effectively vote for corresponding actions. If you’re hungry and see a plate of cookies, there’s a neuron bundle that will fire in response to this pattern, advocating for the action of eating a cookie.

The strength of these votes depends on an implicit calculation of expected reward, based on your past experiences. When multiple actions are possible in a given situation, then, in most cases, the action associated with the strongest vote will win out.

One way to understand why you struggle to put down your phone is that it overwhelms this short-term motivation system. One factor at play is the types of rewards these devices create. Because popular services like TikTok deploy machine learning algorithms to curate content based on observed engagement, they provide an artificially consistent and pure reward experience. Almost every time you tap on these apps, you’re going to be pleasantly surprised by a piece of content and/or find a negative state of boredom relieved—both of which are outcomes that our brains value.

Due to this techno-reality, the votes produced by the pick-up-the-phone neuron bundles are notably strong. Resisting them is difficult and often requires the recruitment of other parts of your brain, such as the long-term motivation system, to convince yourself that some less exciting activity in the current moment will lead to a more important reward in the future. But this is exhausting and often ineffective.

The second issue with how phones interact with your brain is the reality that they’re ubiquitous. Most activities associated with strong rewards are relatively rare—it’s hard to resist eating the fresh-baked cookie when I’m hungry, but it’s not that often that I come across such desserts. Your phone, by contrast, is almost always with you. This means that your brain’s vote to pick up your phone is constantly being registered. You might occasionally resist the pull, but its relentless presence means that it’s inevitably going to win many, many times as your day unfolds.

~~~

Understanding these neural mechanisms is important because they help explain why so many efforts to reduce phone use fail—they don’t go nearly far enough!

Consider, for example, the following popular tips that often fall short…

Increase Friction

This might mean moving the most appealing apps to an inconvenient folder on your phone, or using a physical locking device like a Brick that requires an extra step to open your phone. These often fail because, from the perspective of your short-term motivation systems, these mild amounts of friction only decrease your expected reward by a small amount, which ultimately has little impact on the strength of its vote for you to pick up your phone.

Make Your Phone Grayscale

There is an idea that eliminating bright colors from your phone’s screen will somehow disrupt the cues that lead you to pick it up. This also often fails because colors have very little to do with your brain’s expected reward calculation, which is based on more abstract benefits, such as pleasant surprise and the alleviation of boredom.

Moderate Your Use with Rules

It’s also common to declare clear rules about how much you will use each type of app; e.g., “only 30 minutes of Instagram per day.” The problem is that such rules are abstract and symbolic, and have limited interaction with your short-term motivation systems, which deal more with the physical world and immediate rewards.

Detox Regularly

Another common tactic is to “detox” by taking regular time away from your phone, such as a weekly Internet Shabbat, or an annual phone-free meditation retreat. These practices can boast many benefits, but they’re not nearly long enough to start diminishing the learned rewards that drive your motivation system. It would take many months away from your phone before your brain began to forget its benefits.

~~~

So what does work? Our new understanding of our brains points toward two obvious strategies that are both boringly basic and annoyingly hard to stick to.

First, remove the reward signals by deleting social media or any other app that monetizes your attention from your phone. If your phone no longer delivers artificially consistent rewards, your brain will rapidly reduce the expected reward of picking it up.

Second, minimize your phone’s ubiquity by keeping it charging in your kitchen when at home. If you need to look something up or check in on a messaging app, go to your kitchen. If you need to listen to a podcast while doing chores, use wireless earbuds or wireless speakers. If your phone isn’t immediately accessible, the corresponding neuronal bundles in your motivation system won’t fire as often or as strongly.

In the end, here’s what’s clear: Our brains aren’t well-suited for smartphones. We might not like this reality, but we cannot ignore it. Fixing the issues this causes requires more than some minor tweaks. We have to drastically change our relationship to our devices if we hope to control their impact.

The post What Neuroscience Teaches Us About Reducing Phone Use appeared first on Cal Newport.

October 6, 2025

The Great Alienation

Last week, I published an essay about the so-called Great Lock In of 2025, a TikTok challenge that asks participants to tackle self-improvement goals. I argued that this trend was positive, especially for Gen Z, because the more you take control of your real life, the easier it becomes to take control of your screens.

In response, I received an interesting note from a reader. “The biggest challenge with this useful goal Gen Z is pursuing,” he wrote, “is they don’t know what to do.”

As he then elaborates:

“Most of them are chasing shiny objects that others are showing whether on social media or in real life. And when they (quickly) realize it’s not what they want, they leave and jump on to something else…this has been a common problem across generations. But Gen Z, and youngsters after it, are making things worse by scrolling through social media hoping to find their purpose by accident (or by someone telling them what they should do).”

Here we encounter one of the most insidious defense mechanisms that modern distraction technology deploys. By narrowing its users’ world to ultra-purified engagement, these platforms present a fun-house mirror distortion of what self-improvement means: shredded gym dwellers, million-subscriber YouTube channels, pre-dawn morning routines. Because these “shiny” goals are largely unattainable or unsustainable, those motivated to make changes eventually give up and return to the numbing comfort of their screens.

By alienating its users from the real world, these technologies make it difficult for them to ever escape the digital. To succeed with the Great Lock In, we need to resolve the Great Alienation.

~~~

At the moment, I’m in the early stages of writing a book titled The Deep Life. It focuses on the practical mechanisms involved in discerning what you want your life to be like and how to make steady progress toward these visions.

At first glance, this might seem like an odd book for me to write, given that my work focuses primarily on technology’s impacts and how best to respond to them. When we observe something like Gen Z’s struggles with the Great Lock In, however, it becomes clear that this book’s topic actually has a lot to do with our devices. Figuring out how to push back on the digital will require more attention paid to improving the analog.

The post The Great Alienation appeared first on Cal Newport.

September 29, 2025

The Great Lock In of 2025

If there’s one thing that I’m always late to discover, it has to be online youth trends. True to form, I’m only now starting to hear about the so-called “Great Lock In of 2025.”

This idea began circulating on TikTok over the summer. Borrowing the term ‘lock in’, which is Gen Z slang for focusing without distraction on an important goal, this challenge asks people to spend the last four months of 2025 working on the types of personal improvement resolutions that they might otherwise defer until the New Year. “It’s just about hunkering down for the rest of the year and doing everything that you said you’re going to do,” explained one TikTok influencer, quoted recently in a Times article about the trend.

Listeners of my podcast know that I’m a fan of the strategy of dedicating the fall to making major changes in your life. My episode on this topic, How to Reinvent Your Life in 4 Months, which I originally aired in 2023 and re-aired this past summer, is among my most popular – boasting nearly 1.5 million views on YouTube.

To me, however, the more significant news contained in this trend is the generalized concept of ‘lock in’, which has become so popular among Gen Z that the American Dialect Society voted it the “most useful” term of 2024.

Critically, ‘lock in’ seems to have been defined in reaction to smartphones. “I think that we live in an era where it’s very easy to be distracted and that we’re on our phones a lot,” explained language science professor Kelly Elizabeth Wright, in the Times. “‘Lock in’ really came up in these last couple years, where people are saying, like, ‘I have to make myself focus. I have to get into a state where I am free from distraction to accomplish, essentially, anything.’”

This matters because defining positive alternatives to negative habits is an effective way to reduce them.

When I first published Deep Work, which centers on the importance of undistracted focus in your professional life, people already knew that spending their days frantically checking email probably wasn’t good. They only felt motivated to change, however, when presented with a positive alternative.

Ideas like ‘locking in’ might provide a similar influence for Gen Z’s collective smartphone addiction. It’s one thing to be told again and again that your devices are bad, but it’s another to experience a clear vision of the good that’s possible once you put them away. When you experience life in its full analog richness, the allure of the digital diminishes.

The irony of the Great Lock In of 2025, of course, is that it started on TikTok – the ultimate digital distractor. My hope is that by the New Year, this challenge will no longer be trending; not because people gave up, but because they’re not online enough anymore to talk about it.

The post The Great Lock In of 2025 appeared first on Cal Newport.

Cal Newport's Blog

- Cal Newport's profile

- 10003 followers