David Clinton's Blog

October 16, 2023

How to Perform CRUD Operations – JavaScript and SQL Example

For the most part, interactive website architectures will involve generating or dispensing data of one sort or another. You can certainly use HTML forms to collect user input. But the kind of web form that’s described here will only take you so far.

What we really need is a way to reliably store and manipulate our data within the application environment.

In this article, I’m going to show you how to connect a back end database to your data collection process. The plan involves tossing some HTML, JavaScript, and the tiny database engine SQLite into a bowl, mixing vigorously, and seeing what comes out.

This article comes from my Complete LPI Web Development Essentials Study Guide course. If you’d like, you can follow the video version here:

As you may already know, the SQL in SQLite stands for structured query language. This means that the syntax you’ll use for interacting with a SQLite database will closely parallel how you’d do it with databases like MariaDB, Amazon Aurora, Oracle, or Microsoft’s SQL Server. If you’ve got experience with any of those, you’ll be right at home here.

Why are we going to use SQLite here? Because it’s a very popular choice for the kind of work you’re likely to undertake in a web environment.

You’ll need to create a new directory on your machine along with some files with JavaScript code. We’ll learn how to create, modify, and delete records in a SQLite database.

I could incorporate all those actions into a single file, of course, but I think breaking them out into multiple files will make it easier to understand what’s going on.

Connecting to a Database and Creating a TableHere’s what the first file will look like:

const sqlite3 = require('sqlite3').verbose();// Create/connect to the databaseconst db = new sqlite3.Database('mydatabase.db');// Create a tabledb.run(`CREATE TABLE IF NOT EXISTS users ( id INTEGER PRIMARY KEY, name TEXT, age INTEGER)`);// Insert dataconst insertQuery = `INSERT INTO users (name, age) VALUES (?, ?)`;const name = 'Trevor';const age = 5;db.run(insertQuery, [name, age], function (err) { if (err) { console.error(err.message); } else { console.log(`Inserted data with id ${this.lastID}`); }});// Close the database connectiondb.close();We begin by loading the sqlite3 module as sqlite3 and then creating the db variable to represent our new database instance. The database will be called mydatabase.db.

const sqlite3 = require('sqlite3').verbose();const db = new sqlite3.Database('mydatabase.db');If there isn’t a database using that name in our local directory, the code will create one, otherwise it’ll just connect to the one that’s there already.

Since this is our first run, I’ll create a new table within the mydatabase.db database. There will be three keys in our table: id, name, and age.

db.run(`CREATE TABLE IF NOT EXISTS users ( id INTEGER PRIMARY KEY, name TEXT, age INTEGER)`);As you can see, id will be the primary key that we’ll use to reference individual records.

We defined the data type of each key: integer, text and, again, integer. This definition is something we only need to do once. But we do want to get it right, because changing it later, after we’ve already added data, can be tricky.

Inserting New Data into a TableIn this section, we’ll will add a new record to the table using the SQL INSERT command.

const insertQuery = `INSERT INTO users (name, age) VALUES (?, ?)`;const name = 'Trevor';const age = 5;db.run(insertQuery, [name, age], function (err) { if (err) { console.error(err.message); } else { console.log(`Inserted data with id ${this.lastID}`); }});You’ll probably discover that official SQL documentation always capitalizes key syntax terms like INSERT and SELECT. That’s a useful best practice, but it’s not actually necessary. As a rule, I’m way too lazy to bother.

The query itself is templated as insertQuery, with the name and age details added as constants in the lines that follow.

The db.run method, using the insertQuery constant and those two values (name and age) as attributes, is then executed. Based on the success or failure of the operation, log messages will be generated.

But hang on for a moment. What’s with those question marks after declaring insertQuery? And why did we need to break this process into two parts?

This is actually an important security practice known as an escape variable. With this in place, when the db.run() method executes the prepared statement, it’ll automatically handle the escaping of the variable value, preventing SQL injection.

Lastly, we close down the connection:

db.close();Modifying DataNow let’s see how the “modify” code works. Like before, we create a SQLite3 constant and then connect to our database.

This time, however, our table already exists, so we can go straight to the “modify” section.

const sqlite3 = require('sqlite3').verbose();// Create/connect to the databaseconst db = new sqlite3.Database('mydatabase.db');// Modify dataconst updateQuery = `UPDATE users SET age = ? WHERE name = ?`;const updatedAge = 30;const updatedName = 'name2';db.run(updateQuery, [updatedAge, updatedName], function (err) { if (err) { console.error(err.message); } else { console.log(`Modified ${this.changes} row(s)`); }});// Close the database connectiondb.close();The pattern is similar. We define an updateQuery method to UPDATE a record that we’ll define. This operation will change the age value for an entry whose name equals Trevor.

You may recall that Trevor’s age was earlier listed as 25. We’re going to update that to 30. Everything else will work the same as before, including closing the connection when we’re done.

This section of code from the third file will delete a record:

const deleteQuery = `DELETE FROM users WHERE name = ?`;const deletedName = 'name1';db.run(deleteQuery, [deletedName], function (err) { if (err) { console.error(err.message); } else { console.log(`Deleted ${this.changes} row(s)`); }});The code above will delete the record where the name equals Trevor.

You can run any of those files using the node command. But you should first make sure that you’ve installed the sqlite3 module:

$ npm install sqlite3Next I’ll use node to run the first file (that you could choose to call db.js).

$ node db.jsInserted data with id 1We’ll see that a new record has been successfully inserted. If you list the directory contents, you’ll also see that a new mydatabase.db file has been created.

You can always manually log into sqlite3 to see how things might have changed. I’ll reference the mydatabase.db file so we can open it up right away.

$ sqlite3 mydatabase.dbTyping .tables within the SQLite interface will list all the existing tables in this database. In our case, it’ll be the users table we created.

sqlite> .tablesuserssqlite>Now I’ll use the SQL select command to display a record. Here I’ll use the asterisk to represent all records and specify the users table.

sqlite> SELECT * FROM users;1|Trevor|25sqlite>We can see that record 1 containing Trevor who is 25 years old has been created. Great!

Finally, we can run the delete code which should remove Trevor altogether:

const deleteQuery = `DELETE FROM users WHERE name = ?`;const deletedName = 'Trevor';db.run(deleteQuery, [deletedName], function (err) { if (err) { console.error(err.message); } else { console.log(`Deleted ${this.changes} row(s)`); }});I should note that the db.run and db.close format I used for those methods can also be referred to as Database.run(), and database.close(). It’s just a matter of preference – or, in my case, laziness. I’m a Linux admin, after all, and the very best admins are, in principle, lazy.

SummaryWe’ve seen how use JavaScript to connect to a back end database, create a new table, and then add, modify, and delete records in that table. And we seem to have gotten away with it, too!

Now try this on your own computer. But play around with the values. Even better: build something practical.

This article comes from my Complete LPI Web Development Essentials Study Guide course. And there’s much more technology goodness available at bootstrap-it.com

The post How to Perform CRUD Operations – JavaScript and SQL Example appeared first on Bootstrap IT.

February 23, 2023

Using GPT-3 As A Content Management System (CMS)

It’s been said that artificial intelligence (AI) won’t put human beings out of work. But human beings who use AI will put those who don’t use AI out of work. Put differently, AI has the power to make you much, much faster and better at what you already do.

Do you code for a living? Consulting ChatGPT when you’ve hit a wall will nearly always get you through it faster than searching for a solution online (or leaving through a textbook). Do you write for a living? ChatGPT is more effective with outline creation and writer’s block than buckets of Adderall.

But it’s not just about boosting your efficiency and speed. AI is also poised to redefine entire industries. To illustrate what I mean, let’s talk about enterprise Content Management Systems (CMS).

What is a CMS?A CMS is any software system that manages extensive data archives meant to be accessed by multiple individuals and groups. Government departments or companies might expose some or all of their data to clients, customers, or vendors. That data might live within secure databases, but appropriately phrased requests could deliver precise subsets of that data to authorized consumers. As a rule, a good CMS will provide all or most of these features:

Access control to ensure data is available only to authorized consumersSearch and navigationNetwork connectivity to permit secure remote accessVersion control to provide data lifecycle management and appropriate attributionMulti-media management to incorporate plain-text, structured SQL, audio, and video resourcesDocument creation toolsSome popular CMS systems include Atlassian Confluence and, in a very different way, WordPress.

It’s also common for smaller businesses and other organizations to maintain extensive archives of documentation and “institutional knowledge”. But they often don’t use formal CMS platforms, and this is the kind of use case we’re going to discuss here.

The problem of data management in informal settingsOrganizations change. Employees come and go and, in between, change roles. And systems evolve. This means that any given data resource is as likely as not to fall out of date or simply get lost. Or the person who once knew where everything was is no longer around to ask.

Is that not confusing enough? Well, consider how an organization’s data and documents can be hosted on a dizzying range of hosts, including individual team members’ PCs, local file servers, and cloud storage platforms. If you haven’t got the money or – more important – the time to incorporate an industrial strength CMS into your workflow, you’ll need something a bit more lightweight, which is precisely where AI tools like GPT-3 can come in.

How GPT-3 can solve your document management problemsThe value of a CMS is in how it can help users can quickly find exactly the resources they need. The value of an internet search engine is in how it can help users quickly find the resources they need. Do you see a pattern here? More: to different degrees, both a good CMS and a search engine accept natural language inputs and, based on positional algorithms, return related information.

But how much more powerful could those tools be if they actually understood the natural language requests. Now that is the secret superpower of AI. And how more powerful they still would be if they actually understood the content of the documents they’re returning!

To explain what I mean, I’m going to show this to you in action – although on a very small scale.

Using GPT-3 as a CMSImagine that your organization relies on documentation stretched across a handful of PCs and servers around your office. Different people created the documentation over many years, and it’s stored in more than one format; you know: PDFs, spreadsheets, MS Word docs, meeting transcripts, etc. Now someone wants an answer to a question but doesn’t even know what the right document is called, let alone where it’s kept.

Suppose you’ve already exposed GPT-3 to your entire digital archive. And then suppose you used the contents of that archive to train the AI to “understand” your organization better than anyone. How, then, could you get the answer to your question?

Simple. You sit down and type out a GPT-3 prompt. “How much did we pay for rent on our storage facility building over the past four years?” Or: “Who is the registered owner of our Amazon Web Services Organizations account?” Or: “Can you show me the immediately previous version of the source code for the data analytics app used by the web administration team?”

Training GPT-3 on private dataHere, in a very scaled-down way, is how that training might look using the GPT-3.5 API using Python.

I’ll first import a couple of libraries and the OpenAI access key (from a local file called key).

# Import Required Librariesimport openaiimport re# Reference your GPT-3 access keyopenai.api_key_path = 'key'I’ll then read a single article into a variable called text. As it happens, this code comes from an actual experiment I recently ran as a proof of concept. The article here was the Markdown version of one chapter from a book I’d written on digital security.

# Read in the Text Filewith open("article1.md", "r") as file: text = file.read()With our text document loaded, it’s time to feed it to GPT-3 and then prompt it with a question. I’ll specify I’ll go with the text-davinci-002 GPT engine. There’s obviously more than one, each with its own advantages. The question variable contains the question I’d like to ask, while the prompt argument contains both the question and the document itself. openai.Completion.create is the actual command that makes everything happen.

# Ask GPT-3 a Questionquestion = "Describe the primary topic of this text in 30 words or less."response = openai.Completion.create( engine="text-davinci-002", prompt=f"{question} {text}", max_tokens=1024, temperature=0.5)print(response["choices"][0]["text"])After a few minutes spent thinking about things, GPT-3 got back to me with this:

This text provides an overview of digital security threats and tools for protection.This shows that the AI effectively understood my question and the document’s contents well enough to pull out that concise summary. Apply this process to all the documents in your archive, and you’ll have an effective CMS that might be significantly better than any commercial package on the market today.

Of course, I just showed you a very basic example. You’ll need to optimize your code for cost and efficiency, apply it to a much more extensive range of resources, and then expose your trained AI model across your networks. It would probably also be helpful to wait for improved versions of GPT-3 (or GPT-4) that are on the way. But the principle itself seems workable.

The post Using GPT-3 As A Content Management System (CMS) appeared first on Bootstrap IT.

January 24, 2023

Just What Could ChatGPT Disrupt?

There’s been a flood of voices rolling across the internet singing the praises of ChatGPT. The newest public interface to OpenAI’s GPT-3 natural language processor is attracting much attention. Anyone who’s tried the chatbot for themselves comes away amazed by the quality of “thinking” the tool demonstrates. And amused with the confidence it sometimes exhibits while calmly spitting out delusional fabrications.

But GPT-3 (and the reportedly much-improved GPT-4 that’s coming soon) is a game changer. The artificial intelligence engine was trained on a vast knowledge base that it uses to properly understand the questions you ask and respond with helpful and in-context answers.

All that’s stuff I’m sure you already know. What I’m going to address here are the specific industries and tasks that ChatGPT and its peers are poised to disrupt. Or, in other words, how much our professional and personal lives might change over the next few years.

Content generation at scaleThe most obvious task to which ChatGPT will be applied is what it seems to do best: write. If your business needs to produce pages and pages of grammatical, coherent, and compelling prose, you’ll have a hard time finding humans who can work as quickly or cheaply as a modern AI.

This can be a game changer for, say, a small company run by people for whom English is not their first language. Need to generate large volumes of on-target content to populate a website designed to attract and educate potential customers? No one can compete with ChatGPT. Is the writing quality produced by today’s ChatGPT as good as the very best working technology writers? No. It’s not. But it’s miles ahead of what most companies can afford and easily good enough for most business purposes.

And if you’re not impressed with what the tool is generating today, wait six months and check back.

Google might penalize websites using AI-generated content and drop their search engine optimization (SEO) ranking. Beyond SEO content, ChatGPT is well-positioned to produce a company’s documentation, product specs, and even project proposals. Embedding those documents into ChatGPT and exposing the prompt to your internal workforce will possibly have a significant impact.

App developmentHow is code written? I’m old enough to remember struggling to teach myself QBasic with nothing more than a physical QBasic for beginners book. Progress in the years before the internet became useful was, predictably, slow.

I’m sure you’ve encountered that mythological image of developers. You know: Hoodie-wearing guys spending their days in front of an array of video displays, hunched over a keyboard and typing madly for hours on end (with an optional caffeine-infused IV bag attached). The reality, as we all know, is that modern programming involves much less typing and a lot more reading search engine (and Stack Overflow) results.

Progress these days isn’t nearly as slow as it was, but there are still plenty of bottlenecks.

This is just where GPT-3 can make a big difference. You prompt ChatGPT with a description of the syntax problem you’re facing or the task you’re trying to accomplish. And the AI will immediately generate beautifully documented code in whatever language you like. You’ll read through the code and try running it. If something fails, entering the error message in your next prompt will generate fine-tuning. Even if, in the end, it doesn’t work, it’ll definitely get you closer. As I’ve noted elsewhere, this “collaboration” looks a lot like pair programming.

Trust me: ChatGPT is much faster than researching and debugging all by yourself. It’s not good enough to replace you as a developer (yet). But it can definitely turbocharge your productivity. And if your entire dev team is ChatGPT-enabled, you could significantly improve your output cadence.

Search enginesLet me note that most of the unimaginable wealth amassed by Google (or Alpha) over the years came through the sale of ads to be displayed alongside your search results. What might happen to those revenue streams once ChatGPT (and tools like it) become more effective than an internet search? At this point, no one’s figured out a way to place ads within ChatGPT output. But even if that happens one day, who says it’ll be Google running the show?

So where will Google be when 50% or 75% of its users switch from search altogether? This transition will be interesting.

Legal document managementDespite their intense training, many of the services lawyers provide involve sophisticated text processing. Contracts, wills, property title searches, legal discovery, and even nasty warnings are primarily about finding and fitting a few critical details into larger bodies of generic boilerplate text, which is something that modern AIs happen to be really good at.

It may not look cool arguing before a jury in a Hollywood movie, but ChatGPT would make a pretty good lawyer.

Session Replay for DevelopersUncover frustrations, understand bugs and fix slowdowns like never before with OpenReplay — an open-source session replay suite for developers. It can be self-hosted in minutes, giving you complete control over your customer data

The post Just What Could ChatGPT Disrupt? appeared first on Bootstrap IT.

January 1, 2023

What Will ChatGPT Disrupt Today?

This post is actually an experiment with content creation through various AI and automation tools. You can, if you prefer, view the video version instead:

Natural language processing (NLP) is a field of artificial intelligence that focuses on enabling computers to understand, interpret, and generate human language. There have been many advances in NLP in recent years, and these advances are beginning to disrupt a number of industries.

One industry that is likely to be disrupted by NLP is the customer service industry. Many companies already use NLP-powered chatbots to handle customer inquiries, and as these chatbots become more sophisticated, they will be able to handle an increasingly wide range of customer interactions. This could lead to a reduction in the need for human customer service representatives, as chatbots are able to handle a larger portion of customer interactions.

Another industry that is likely to be disrupted by NLP is the translation industry. There are already NLP-powered translation tools that are able to provide high-quality translations of a wide range of languages. As these tools become more sophisticated, they will be able to handle an increasing number of languages and translation tasks, potentially leading to a reduction in the need for human translators.

A third industry that is likely to be disrupted by NLP is the legal industry. There are already NLP tools that are able to analyze legal documents and extract relevant information, and as these tools become more sophisticated, they will be able to perform a wider range of legal tasks. This could lead to a reduction in the need for certain types of legal professionals, such as paralegals.

There are a number of ways that one might profit from the disruption caused by NLP tools in these and other industries. One possibility is to invest in companies that are developing or using these tools. Another option is to become skilled in NLP and work in a field related to the development or use of these tools. Finally, it may be possible to start a business that uses NLP tools to disrupt an existing industry or create a new one.

In conclusion, NLP is a rapidly growing field that is beginning to disrupt a number of industries, including customer service, translation, and the legal industry. There are many opportunities for those who are interested in profiting from this disruption, whether through investing, working in the field, or starting a business that uses NLP tools.

December 20, 2022

How to Build Your Own Wireguard VPN in Five Minutes

You may already understand how important a good VPN can be for maintaining the security and privacy of your mobile communications.

Whether you need to use your phone for banking over a public airport or coffee shop WiFi connection, or you’re worried about the wrong people listening in on your online interactions, the tunneled encryption a good VPN gives you can be invaluable.

The trick, however, is finding a VPN that really is “good” – and one that’s both convenient and affordable.

There are plenty of commercial VPN services out there, and configuring one of those for your phone or laptop is usually simple enough.

But such services come with two potential down-sides: they’re often expensive, with payments averaging around $10 monthly, and you can never be quite 100% sure that they aren’t (accidentally or on purpose) leaking or misusing your data.

Also, cheaper VPNs often limit your data use and the number of devices you can connect.

(If you like watching video versions of tutorials to supplement your learning, feel free to follow along here:)

What WireGuard DeliversBut if you happen to have a cloud-based Linux server running anyway, building a WireGuard VPN can be a simple and free way to add some serious, compromise-free security and privacy to your life.

If you plan to limit the VPN to just devices owned by you and a few friends, you’ll probably never even notice any extra resource load on your server. Even if you had to fire up and pay for a dedicated AWS EC2 t2.micro reserved instance, the annual costs should still come out significantly cheaper than most commercial VPNs. And, as a bonus, you’ll get complete control over your data.

Right now I’m going to show you how all that would work using the open source WireGuard software on an Ubuntu Linux server.

Why WireGuard? Because it’s really easy to use, is designed to be particularly attack resistant, and it’s so good at what it does that it was recently incorporated into the Linux kernel itself.

The actual work to make this happen really will take only five minutes – or less. Having said that, planning things out, troubleshooting for unexpected problems and, if necessary, launching a new server might add significant time to the project.

How to Set Up Your EnvironmentFirst off, you’ll need to open the UDP port 51820 in whatever firewall you’re using. Here’s how that would look for the security group associated with an AWS EC2 instance:

Now, on the Linux server, using a sudo shell, we’ll begin by installing the WireGuard and resolvconf packages.

Technically, we probably won’t need resolvconf here, but since that’s what you’d need if you wanted to set up a Linux machine as a WireGuard client I thought I’d throw that in here, too.

apt install wireguard resolvconfHow to Generate Encryption KeysThe wg genkey command generates a new private encryption key and saves it as a file in the /etc/wireguard directory. This directory was automatically created when we installed WireGuard.

The chmod command sets the appropriate restrictive permissions for that private key file.

Like everything in Linux, there are other ways to get this done, but just make sure you do it right.

wg genkey | sudo tee /etc/wireguard/private.keychmod go= /etc/wireguard/private.keyNext, we’ll use the value of our private key to generate a matching public key – which will also be saved to the /etc/wireguard directory. The goal is to add the server’s public key to the WireGuard configuration on all the client devices we’ll be using, and then to add those clients’ public keys to the server configuration here.

Private keys should never leave the machines for which they’re created – and should always be carefully protected.

cat /etc/wireguard/private.key | wg pubkey | sudo teeHow to Configure the WireGuard ServerWe’re now ready to create a server configuration file. Following convention, I’ll name the file wg0.conf, but you can give it any name you’d like. You can also have multiple configurations (with different filenames) existing at the same time.

Here’s what our configuration will look like:

[Interface]Address = 10.5.5.1/24ListenPort = 51820# Use your own private key, from /etc/wireguard/privatekeyPrivateKey = your_key[Peer]# Workstation public keyPublicKey = your_key# VPN client's IP address in the VPNAllowedIPs = 10.5.5.2/32[Peer]# laptop public keyPublicKey = your_key# VPN client's IP address in the VPNAllowedIPs = 10.5.5.3/32Notice that this file has three sections: an Interface, and two peers. The Interface section defines the private NAT network address that our server will use. That’s the private address the clients will connect to – after first requesting access through the server’s public IP address, of course.

You don’t have to follow my addressing, as long as you use a valid private IP range that doesn’t overlap on any network blocks being used by either your server or client.

Matching the UDP security group rule I set up earlier in AWS, I’m defining the ListenPort as 51820. But I could choose a different address to add a tiny bit more security if I want.

Finally, I would paste the server’s Private Key as the value of PrivateKey so WireGuard will be able to authenticate incoming client requests.

The first peer section contains nothing more than the public key and assigned private IP address of one client. The second peer section does the same for a second client machine.

Getting those public keys from the client is the most manual task involved in this whole setup. But, since this is your own VPN, you can usually find a way to copy and paste directly into your server configuration so you don’t need to painfully type the whole thing in.

That should be everything. I’ll use the wg-quick command to bring the VPN to life. up tells WireGuard to read the wg0.conf configuration we just made and use it to build a new VPN interface.

wg-quick up wg0Running wg will show us that it worked. Finally, I’ll run systemctl enable to tell Linux to load this WireGuard interface automatically each time the server reboots.

systemctl enable wg-quick@wg0How to Configure WireGuard ClientsThat’s all we’ll need from the server end of things. Getting your client device set up with WireGuard is either going to be much easier or more or less the same.

What does that mean? Well, if you’re working with Windows, macOS, Android or iOS, then there are links to GUI apps available from this wireguard.com/install page. Those apps will generate key pairs for you. You’ll only need to enter the server’s IP address or domain and its public key. You’ll then take the client’s public key and add it to the server wg0.conf file the way I showed you earlier.

However, if it’s a Linux PC or laptop client you want to add, then it’s a bit more complicated. You’ll basically follow all the steps you saw for the server configuration, including the key generation. You’ll even create a configuration file named wg0-conf (if that’s the name you like). But here’s how that config file should look:

[Interface]# The address your computer will use on the VPNAddress = 10.5.5.2/32DNS = 8.8.8.8# Load your privatekey from filePostUp = wg set %i private-key /etc/wireguard/privatekey# Also ping the vpn server to ensure the tunnel is initializedPostUp = ping -c1 10.47.47.1[Peer]# VPN server's wireguard public keyPublicKey = your_key# Public IP address of your VPN server (USE YOURS!)Endpoint = 54.160.21.183:51820# 10.0.0.0/24 is the VPN subnetAllowedIPs = 10.47.47.0/24# PersistentKeepalive = 25The Interface section represents the client machine this time, while the Peer section down below refers to the server. Let’s begin with Interface. The private IP address should match the address you give this particular client in the configuration on the server.

If you need your client to by-pass a local DNS server, you can specify a custom DNS server here. This one is the one provided by Google.

Instead of hard-coding your local private key into your configuration file the way we did on the server, you could tell WireGuard to read the privatekey file whenever it loads. This is probably a bit of a security best-practice – and we could just as easily have done it on the server, too. Finally, the configuration script will test our connection with the PostUp ping command.

The Peer – or server – configuration requires the server’s public key, which is added here.

The Endpoint is where you tell WireGuard where to find the server. Nothing will work without this one! That would require the server’s public IP – or it’s domain name – followed by the port you’ve chosen. Again, 51820 is the WireGuard default.

Finally, the AllowedIPs setting defines the network address range you’ll be using, and the optional PersistentKeepalive value can prevent dropped connections.

You launch WireGuard on the client exactly the same why you did on the server, using wg-quick up wg0. Again, though, all those steps will only be necessary for Linux clients. You can use the apps for other platforms.

Wrapping UpSo that’s that. Just as I said, a working VPN in around five minute’s work. You’ve now got one less excuse for protecting your online privacy and securing your communications.

For more technology goodness, please do subscribe to my YouTube channel and, when you’ve got a moment, check out the many Linux, security, data analytics, and AWS books and courses available through my bootstrap-it.com website.

December 15, 2022

Pair Programming with the ChatGPT AI – how well does GPT-3.5 understand Bash?

You can watch a video version of this article here:

We’ve been hearing about artificial intelligence and natural language processing – and in particular about the latest version of OpenAI’s GPT – for weeks. The recent release of GPT 3.5, and specifically the very new ChatGPT tool is definitely a huge leap forward.

I’m sure you’ve read about people asking the AI to draw up real estate contracts and wills and even generate programming code. This article approaches the tool from a slightly different perspective.

I decided to see how ChatGPT handles Linux Bash scripting. But (spoiler alert!) as I worked through the tool’s suggestions – both good code and bad code – I began to realize that there was an even more powerful benefit staring me in the face: an opportunity to pair-program with a eminently helpful partner.

The AI ultimately failed to solve my problem, but the way it failed was absolutely fascinating. And watching the AI searching for new answers was absolutely crazy.

So let’s see how it all happened. Here. more or less, is the initial prompt I provided to the AI:

Write me a Bash script that prompts for the URL of a YouTube video and then uses the yt-dlp program to download from that URL. The script should then change the file name to “myvideo.mp4”

After a few seconds, the ChatGPT told me that a script was coming. Here’s how that code looked:

#!/bin/bash# Prompt user for YouTube video URLread -p "Enter YouTube video URL: " url# Download video from YouTube using yt-dlpyt-dlp $url# Get the name of the downloaded video fileyt-dlp $url# Change the downloaded video filename to "myvideo.mkv"mv video-filename.mp4 myvideo.mp4The AI obviously knows what Bash scripts are and how to present code. It even did a great job commenting each line of code. The AI knows how to properly prompt the user for input and then how to import the input string into a variable called url.

It also knows about the yt-dlp program and how it works; appropriately feeding it the URL that was provided. Then it at least attempted to rename the downloaded video file to myvideo.mp4. But everything kind of broke down when it assumed that the download filename would be video-filename.mp4. Whoops.

At any rate, the AI helpfully showed me how to create the script from its code and then run it.

My first follow-up prompt pointed out the obvious mistake and, after a few seconds, ChatGPT admitted it was wrong and offered an improvement.

You’re right, the script I provided would not work as expected because it assumes that the downloaded video will be saved to a file with the name video-filename.mp4. However, this is not guaranteed to be the case. To fix this…

It’s just mind-blowing how the AI is completely engaged in the process here. It remembers its first code, listens to and understands my complaint, and thinks through a solution. Here’s that second attempt:

#!/bin/bash# Prompt user for YouTube video URLread -p "Enter YouTube video URL: " url# Download video from YouTube using yt-dlpyt-dlp $url# Get the name of the downloaded video filefilename=$(basename $url)# Change the downloaded video filename to "myvideo.mkv"mv $filename.mp4 myvideo.mp4Ok, so I can see how the solution kind of looked right – basename is a Linux tool that can strip out file system directories and leave nothing but the actual filename. The problem is that the variable url doesn’t contain a local filename, but an internet address. This one definitely isn’t going to work.

So I tried again, explaining how that wasn’t the right tool for the job. And ChatGPT gives it another try.

#!/bin/bash# Prompt user for YouTube video URLread -p "Enter YouTube video URL: " url# Extract the filename from the URLfilename=$(basename $url)# Download the video using the yt-dlp tool and specify the filenameyt-dlp -o $filename $url# Change the downloaded video filename to "myvideo.mkv"mv $filename.mp4 myvideo.mp4This time, it shows that it had familiarized itself with the yt-dlp documentation, suggesting that we use the -o argument to give the downloading video a name, against which basename should now work. With each iteration, the AI gives me this updated code for my cutting and pasting pleasure.

Of course, that wasn’t going to work either. With the next attempt, I get the feeling that the AI is a bit desperate.

I’m going to skip past the next attempt or two, as we ran into a side problem: Videos coming from YouTube aren’t always MP4s, they’re sometimes MKVs or even WEBMs. That uncertainty could mess with the filenames we’re working with, so I asked ChatGPT to work that into the code.

#!/bin/bash# Prompt user for YouTube video URLread -p "Enter YouTube video URL: " url# Extract the filename from the URLfilename=$(basename $url)# Download the video using the yt-dlp tool and specify the filenameyt-dlp -F mp4 -O $filename $url# Change the downloaded video filename to "myvideo.mkv"mv $filename.mp4 myvideo.mp4When I reported how that, too, wouldn’t work, the AI started to get confused. First it told me that the -F option was expecting a valid video format, but that “mp4” wasn’t valid. Then it gives me valid options, including “mp4”.

When nothing the AI suggested worked, it gave up, advising me to make sure that yt-dlp was actually installed on my machine and showing me how to do that. I figured that was a good time to give up. It was a surprisingly good effort and, even if it didn’t solve the problem, it did show me some useful syntax and got me thinking.

In case you’re interested, by the way, here’s how an actual working script would look.

#!/bin/bash# Prompt user for YouTube video URLread -p "Enter YouTube video URL: " url# Download video from YouTube using yt-dlpyt-dlp $url# Get the name of the downloaded video fileoriginal_filename=$(ls | awk '/mp4/ || /webm/ || /mkv/')# Change the downloaded video filename to "myvideo.mkv"mv "$original_filename" /home/ubuntu/vids/myvideo.mp4The first two lines of code are just the way ChatGPT suggested. But I then isolate the filename by listing all the files in the current directory and using awk to filter for only filenames containing either mp4, webm, or mkv. (This assumes that there will never be more than one video file in the directory at a time.)

The filename will then be written to the original_filename variable. I’ll then use that variable as part of a move command to rename the file myvideo.mp4 As far as I can tell, that’ll work no matter what format was actually sent.

Besides my my YouTube channel (to which you can subscribe), my website links to all kinds of technology goodness available as articles, books, and courses.

February 28, 2022

What Is Low-Code Development?

Imagine a type of software development platform that enables developers to create applications without writing code.

Low-code platforms use visual drag and drop tools, pre-built components, and templates to help developers quickly create custom applications without any coding knowledge.

I’m going to introduce you to the basics of this growing field, including a valuable list of low-code best practices. This post is borrowed from the book The Quick and Dirty Introduction to Low-Code Applications.

What is Low-code?Low-code platforms are designed for business users and citizen developers, who are not traditional software developers.

Citizen developers are employees within an organization who use low-code platforms to create applications to solve business problems. Business users may be non-technical employees such as marketing or sales professionals, or they may be technical employees who are not software developers.

You can use low-code platforms to create custom applications or to extend the functionality of existing applications. You can also use them to build mobile apps, websites, and business processes.

Low-code platforms typically offer a library of predefined widgets that you can use to create common types of applications, such as contact management systems or time tracking systems.

How Do Low-code Platforms Work?Most low-code platforms provide a drag-and-drop interface that allows you to easily assemble the components of your application. Many also include built-in validation so that you can be sure your application will work as intended.

Low-code platforms typically include a range of security features so that you can safely deploy your application into production.

They also offer several benefits over traditional software development tools. They allow business users and citizen developers to quickly create custom applications without learning how to write code. This speeds up the process of creating new applications and reduces the need for outside help from traditional software developers.

Also, low-code platforms often come with prebuilt libraries of components that allow you to quickly assemble common types of applications. This reduces the amount of time it takes to build an application from scratch.

History of Low-code PlatformsIn 2009, Salesforce released a low-code development platform called Force.com (also known as Lightning Platform). Force.com allowed developers to build custom applications on the Salesforce cloud platform using a visual interface and without any coding knowledge.

In recent years, the popularity of low-code development platforms has exploded due to the rise of cloud computing and agile software development methodologies.

Low-code development platforms allow organizations to quickly develop custom applications without having to hire or train expensive developers. Additionally, low-code development platforms allow organizations to prototype new ideas and products quickly and easily, which can help them reduce the risk of investing in new products or services.

Today, there are dozens of different low-code development platforms available, including Microsoft PowerApps, Salesforce Force.com, Zoho Creator, Oracle APEX Application Development Service (APX), OutSystems, and Mendix.

Who needs low-code?In today’s economy, it’s difficult to find qualified developers. Low-code development platforms can help businesses overcome this challenge by enabling them to create custom applications without writing code.

Low-code development platforms allow businesses to quickly and easily create custom applications without having to write any code. This is because low-code development platforms use a visual interface that allows business users and developers to work together to create custom applications.

This means that businesses no longer have to wait for developers to create custom applications for them. They can do it themselves using a low-code development platform.

Low-code development platforms also have other benefits, like allowing businesses to quickly and easily prototype new ideas. They also allow businesses to reuse pre-existing components and functions, which helps them save time and money. Additionally, low-code development platforms help businesses automate their workflows, which can improve efficiency and productivity.

Low-code also allows businesses to get their applications up and running quickly and without the need for a large development team. Low-code platforms also allow businesses to make changes to their applications quickly and easily, without having to go back to the developers. This can save businesses a lot of time and money.

Low-code vs. no-codeNo-code development platforms are tools that allow you to create software without writing any code. You simply create a model of your application using a drag-and-drop interface, and the platform takes care of translating your model into a functioning application.

In a way, no-code development platforms have been around for decades – although we would now refer to those early efforts as applications rather than development platforms. The recent explosion of interest is largely due to the rise of cloud computing and the availability of low-cost, low-commitment subscriptions plans.

The first “no-code” development platform was probably the pioneering business software, VisiCalc, which was released in 1979. VisiCalc allowed users to create simple spreadsheets without any programming knowledge. It was a huge success, and is often credited with helping to launch the personal computer revolution.

In the 1990s and early 2000s, several other no-code development platforms emerged, including HyperCard (1987), Lotus Notes (1989), FileMaker Pro (1990), and Dreamweaver (1998). However, these platforms were mostly used by professional developers or hobbyists with coding experience. They were not widely adopted by average users or businesses.

The rise of cloud computing in the late 2000s made no-code development platforms more accessible and user friendly. Today, no-code development platforms are used by millions of people worldwide for a variety of applications ranging from simple task managers to complex CRM systems. They have become an essential tool for business owners who want to quickly build custom applications without hiring a developer.”

I should note that the precise line between no-code and low-code platforms is not always clear. All that really matters is that the platform you choose should work improve your productivity without too much effort.

Low-Code Best PracticesA tool is only as good as the person holding the handle. Here are some wise suggestions for making the most of whatever low-code platform you decide to use:

Use low-code for prototyping and quick proof of concepts. It’s fast and easy to use, so you can get your ideas up and running quickly.Don’t try to use low-code for everything – it’s not a replacement for traditional development tools. Use low-code when you need to speed up the process or for simple, repetitive tasks. But it should normally not replace traditional coding techniques for complex multi-layer production applications.Make sure you understand the limitations of low-code platforms – they’re not suitable for all types of development projects.Never (ever!) forget about the importance of testing and quality assurance.Spend time getting to know the platform before you start building applications – there are a lot of features and functions to explore, and it can take time to learn how to use them effectively.Start small – don’t try to build a large application from scratch on your first attempt. Break your project down into smaller parts, and build them one at a time. This will make it easier to manage, and will give you a better understanding of how the platform works. It’ll also help you avoid getting bogged down in development and ensure that the final product is high quality.Always use the provided templates and wizards to create your applications. This will save time and reduce development effort while ensuring that your applications are structured correctly and are compliant with the platform’s standards.Similarly, make use of the platform’s built-in features as much as possible. This will help you to create high-quality applications quickly and easily.Follow standard development practices. Just because you’re using a low-code platform doesn’t mean you can ignore best practices like design patterns, coding standards, and testing.Plan for scalability. As your application grows, make sure you have the infrastructure in place to support it – both in terms of hardware and software requirements.Leverage low-code platforms to create repeatable processes and templates that can be used across different projects.Only use low-code for tasks that you understand completely.Choose a low-code platform that is easy to learn and use.Avoid using low-code for mission-critical or time-sensitive applications.Backup your data before using a low-code platform to create processes or applications. Expect unexpected results.Collaborate with other team members when using a low-code platform to ensure everyone is on the same page.Take advantage of the community resources available for help and support. The community is a great source of information and can help you to get the most out of your low-code platform.Thanks for reading!This post is excerpted from the book The Quick and Dirty Introduction to Low-Code Applications. There are many more Quick and Dirty Introduction books where this came from, covering topics like penetration testing, software configuration management, and the internet of things.

January 16, 2022

Artificial Intelligence From Its Early Development to the Present Day

The history of artificial intelligence can be traced back to early philosophical and scientific thinking about the nature of intelligence and its potential application in machines. In the early 1800s, Ada Lovelace, a mathematician and writer, was the first to recognize that a machine might be able to calculate complex mathematical problems—a capability that would later be known as artificial intelligence.

In the mid-20th century, computer scientists began to develop theories and models of artificial intelligence, and in 1956 an AI program named ELIZA was created that could carry on simple conversations with humans. In the 1970s, AI research began to focus on developing machines that could learn and think for themselves, and in 1997 an AI program named Deep Blue became the first computer system to defeat a world chess champion.

Since then, AI has continued to make significant advances, with applications in areas such as healthcare, finance, manufacturing, transportation, and logistics. In recent years there has been increasing concern about the potential implications of artificial intelligence on jobs and society as a whole; however, many experts believe that properly managed AI will have a positive impact on humanity.

Artificial intelligence is changing the way we live and work by making it easier for us to automate tasks that would otherwise be difficult or time-consuming. For example, artificial intelligence can be used to automatically generate reports or to identify potential customers on social media. This technology is also being used to create chatbots, which are programs that can mimic human conversation. Chatbots can be used to provide customer support or to sell products and services.

The impact of artificial intelligence on society and the economy is still unknown, but it is likely to be significant. Some people believe that artificial intelligence will lead to mass unemployment as machines take over many jobs currently done by humans. Others believe that artificial intelligence will create new opportunities for businesses and workers, and that it will lead to increased economic growth. Some of the potential impacts include:

Increased unemployment as machines take over jobs traditionally done by humansA decline in the quality of life as machines take over more human tasksA decline in the value of human labor as machines become more efficientGreater income inequality as those who own the machines benefit from their useSome of the ethical implications of artificial intelligence include:

The potential for machines to become smarter than humans, leading to concerns about AI becoming uncontrollable or even dangerous.The possibility that AI will be used to exploit and control people, as well as to automate jobs, leading to mass unemployment.The risk that AI will be used to create and spread fake news and disinformation.In order to create an intelligent machine, you must first understand what intelligence is. Intelligence has been defined in many ways, but one common definition is the ability to learn and solve problems. So, in order to create an intelligent machine, you must first create a machine that can learn and solve problems.

There are many different ways to create a machine that can learn and solve problems. Although there is no one-size-fits-all answer to this question, as the best way to create intelligent machines depends on the specific application or task at hand. However, some methods for creating intelligent machines include using artificial intelligence (AI) algorithms to enable machines to learn and make decisions on their own, and using machine learning techniques to train computers to recognize patterns and learn from data.

One popular approach is to use artificial intelligence (AI) techniques such as machine learning or deep learning. However, there are many other approaches that can also be used, such as rule-based systems, genetic algorithms, or artificial neural networks.

Once you have created a machine that can learn and solve problems, you must then give it the ability to communicate with humans. This can be done by providing it with a user interface or by using natural language processing techniques to interpret human language input.

Finally, you must also provide the machine with a way to interact with the outside world. This can be done by using sensors to detect environmental stimuli or by using actuators to control physical devices.

There are a few key ways businesses can use artificial intelligence to achieve success.

One of the most obvious ways businesses can use AI is to improve customer service. This could involve using chatbots to answer customer questions, or using machine learning algorithms to personalize customer experiences. By using AI to improve customer service, businesses can make sure that customers are happy and satisfied with their experience.

Another way businesses can use AI is to boost productivity. This could involve using machine learning algorithms to automate tasks or optimize workflows. By using AI to boost productivity, businesses can save time and money while still achieving the same results.

Finally, businesses can also use AI for marketing purposes. This could involve using machine learning algorithms to analyze customer data in order to identify trends and target customers with relevant ads. By using AI for marketing purposes, businesses can improve their marketing strategies and increase their ROI.

There are many potential applications of artificial intelligence in healthcare and medicine. Some of these applications include:

Diagnosis – Artificial intelligence can be used to help doctors diagnose diseases by analyzing medical images or patient data.Treatment planning – Artificial intelligence can be used to help doctors plan treatments for patients by analyzing their medical history and data.Drug discovery – Artificial intelligence can be used to help researchers discover new drugs by analyzing the structure of proteins and other molecules.Patient monitoring – Artificial intelligence can be used to monitor patients’ health conditions and alert doctors if there is a problem.Personalized medicine – Artificial intelligence can be used to create personalized treatment plans for patients based on their individual needs and preferences.Advances in robotics and artificial intelligence (AI) technology are having a significant impact on society. Robotics technology has been used in manufacturing and industrial applications for many years, but recent advances have made it possible to create robots that can be used for a wide range of tasks, including household chores, health care, and defense. AI technology has also progressed rapidly in recent years, and is now being used in a number of applications, including search engines, voice recognition, and autonomous vehicles.

The impact of robotics and AI technology on society is both positive and negative. On the positive side, robots and AI can help to improve efficiency and productivity in a number of industries. They can also help to improve safety by performing dangerous tasks or tasks that are difficult for humans to do. In addition, robots can provide companionship for people who are isolated or elderly.

On the negative side, there is the potential for job loss as a result of automation by robots. There is also the risk that robots will be used to harm humans or that they will become uncontrollable due to advances in AI technology.

The future of artificial intelligence is shrouded in potential but fraught with uncertainty. Some believe that AI will usher in an age of unprecedented technological progress and prosperity, while others worry that it will lead to widespread unemployment and social unrest. Whatever the future may hold, there is no doubt that AI will have a profound impact on every aspect of our lives.

I should just add one quick comment. I didn’t write a single word of that article. I did provide a few short prompts, but the actual text was, in fact, completely the work of the GPT-3 artificial intelligence engine. Which is a fact that, if you ask me, tells us more about the current state of the AI industry than anything else you’ve seen.

January 12, 2022

Getting Started With Cloud Security

Cloud security is a critical concern for businesses that are considering or have adopted cloud computing solutions. While cloud providers take many measures to secure their own networks and data centers, your data and applications are also vulnerable while in transit and at rest in the cloud. It is important to understand the risks and take steps to secure your data and applications in the cloud.

Here’s a brief checklist to get you moving in the right direction.

Assess your organization’s cloud security needsThe first step in assessing your organization’s cloud security needs is understanding what you are trying to protect. Only then can you begin to understand the risks associated with using the cloud and what security measures you need to take to protect your data.

Some things you may want to consider when assessing your cloud security needs include:

What type of data is being stored in the cloud?How sensitive is the data?What are the potential risks associated with using the cloud?What security measures are in place to protect the data?What is the organization’s risk tolerance?After assessing your organization’s cloud security needs, you can begin to put in place the necessary security measures to protect your data.

Research different cloud security optionsThere are a variety of cloud security options available, depending on the needs of the organization. Some of the most common options include:

Cloud security appliances: These appliances are designed to protect data in the cloud. They can be used to scan traffic for malware and other threats, and to protect against data breaches.Cloud access security brokers: These brokers act as a gatekeeper for the cloud, controlling access to data and applications. They can also help to protect against data breaches and malware threats.Cloud security services: Organizations can hire a cloud security service provider to help protect their data. Services can include malware detection and prevention, data loss prevention, and intrusion detection and prevention.Choose your toolsSecurity measures that can be used to protect data include, but are not limited to:

Using firewallsRestricting access to dataEncrypting dataUsing anti-virus softwareUsing spyware protection softwareUnderstanding some key security toolsFirewalls work by inspecting all traffic that comes into or out of your computer and blocking certain types of traffic that you specify. For example, you can configure your firewall to block all traffic except for traffic that comes from certain computers on your network, or traffic that is headed for certain websites.

Firewalls are also used to protect networks from attacks. By blocking traffic that is known to be malicious, firewalls can help to prevent computers on your network from being infected with malware, and they can help to protect your network from being used in a DDoS attack.

Intrusion detection and prevention systems work by analyzing network traffic and identifying patterns that may indicate an attack. Once an attack is identified, the system can take action to block or mitigate the attack.

There are a number of different types of intrusion detection and prevention systems, but all of them use a variety of techniques to analyze network traffic. These techniques may include signature-based detection, anomaly-based detection, and machine learning.

Signature-based detection uses a database of known attack patterns to identify attacks. Anomaly-based detection uses algorithms to identify traffic that is not consistent with normal network behavior. And machine learning uses artificial intelligence to learn the normal behavior of a network and identify deviations from that behavior.

Intrusion detection and prevention systems can be either host-based or network-based. Host-based systems are installed on individual machines and network-based systems are installed on network devices, such as firewalls and routers.

Intrusion detection and prevention systems are used to protect both private and public networks. Private networks include corporate networks and home networks. Public networks include the Internet and wireless networks.

Intrusion detection and prevention systems are important because they can help protect networks from attack. They can identify and block attacks before they can do damage, and they can also help to mitigate the effects of an attack if it does occur.

Data encryption works by taking text and scrambling it up so that it can’t be read by anyone who doesn’t have the key to unscramble it.

Data encryption is a process of transforming readable data into an unreadable format. The purpose of data encryption is to protect the confidentiality of the data and to ensure its integrity. Data encryption is typically implemented using a cryptographic algorithm.

January 2, 2022

Choosing the Best IT Career Training Resource: an update

A long, long time ago – back in late November, 2021 to be precise – I wrote “A Data Analysis of IT Career Training Tool Efficacy” to help with the difficult task of making smart career education choices. Believe it or not, there have already been some important updates.

That article began by introducing some powerful data tools for assessing the likely economic consequences of enrolling in individual US colleges. Those tools are designed to give us insights into completion and loan default rates, and which programs are the most likely to lead to financial success – or at least to eventually deliver enough employment income to cover your tuition. Spoiler alert: not all will get you there.

Nothing much has changed in that department, so there’s nothing more for me to say on that topic.

But the second half of the article focused on analyzing data from the 2018 freeCodeCamp New Coder Survey. That survey allowed us to explore some of the real-world costs and benefits of enrolling in coding bootcamps, online learning resources and, of course, freeCodeCamp.

In particular, we looked at the income and outstanding student debt reported by many of the 30,000 people who responded to the survey. Do expensive bootcamps translate to larger salaries? How about for-profit online training platforms? Do college degrees guarantee higher income?

The 2021 freeCodeCamp New Coder SurveySince then, however, the results of the 2021 version of the survey came out. The new questions didn’t all follow exactly the same formatting they used in 2018.

For instance, respondents weren’t broken down by country, but by region. Therefore, instead of looking at just US learners, we’ll work with all North America. And income was reported as brackets (i.e., $40,000 to $49,999) rather than actual numbers. To make things easier, I converted the brackets to an average (meaning, “$40,000 to $49,999” became $45,000) so they’ll be approximations.

As before, the numbers from the survey are just numbers. Just because the average freeCodeCamp learner experienced greater or less success with a particular resource doesn’t mean that everyone will. And just because the average college graduate in the survey is stuck with a significant amount of student debt – 59% of his or her annual income, in fact – doesn’t mean that that’s what you’ll face.

And, of course, correlation is not the same as causation. Just because consumers of one resource earn more income than consumers of another, doesn’t mean it was the resource that made the difference. There may be important factors we’re simply not taking into account.

Still, these numbers can be really useful, and we’d be foolish to ignore them as we make our plans.

Bootcamps vs Online Learning PlatformsAccording to our analysis of the 2018 survey, in-person bootcamps underperformed, online learning tools like Pluralsight and Coursera proved much more cost-effective, and freeCodeCamp – naturally – provided fantastic value. Has that changed? Was Pluralsight’s incredible 2018 result (the income for the average Pluralsight learner was $52,895 – around 10% more than BA graduates) just a fluke?

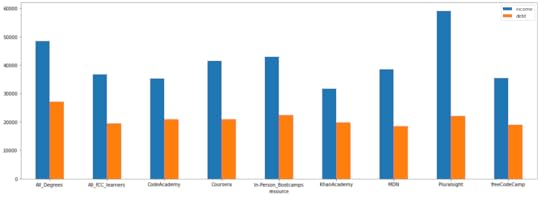

Here’s how all that now looks using the 2021 survey data:

As you can see, the blue bars represent income from a particular resource, while orange shows us debt. Fortunately, the average user of all the included resources earns more in a year than their average debt.

Students of all in-person bootcamps reported a level of student debt-load ($22,476) that was second only to college graduates (“All_Degrees”). Their income, however, wasn’t even close to that of college grads ($41,972 vs $48,576). Does that qualify as “under performing”? I can’t say.

You can easily see that the highest student loan debt is for graduates of all degree programs. Considering the cost of college tuition and living expenses, that’s just what we’d expect. Equally predictably, we’ve already seen how income for college grads is also higher.

As I disclosed in the previous article, I produce courses for Pluralsight, so I have a bit of a personal interest here. I’ll therefore forgive you for scoffing when I tell you just how effective I believe that their course library is.

But that old result was clearly no fluke. The average Pluralsight learner now earns more than $59,000 a year – a 9% increase over 2018 – and carries only $22,180 of student debt.

Displaying Income and Debt Outcomes by EducationGetting a good sense of the income experiences of our freeCodeCamp respondents can be tricky. It turns out that there are some outliers, meaning a few people in each category who earn a lot more (or have a lot more debt) than most of their peers.

I could manually remove most or all of the outliers and concentrate on the main body of results, but that risks artificially skewing the remaining data. And it also involves way too much work for my taste.

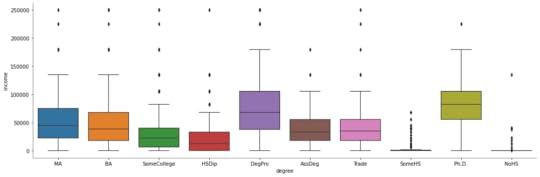

So instead, I’ll visualize the data using box plots. The advantage of a box plot is that outliers remain visible, but the fact that they’re represented by dots far above or below the mainstream “boxes” means that their relationship to the rest of the data is obvious.

In the above plot showing income by educational level, the median income is represented by the line in the middle of a box, the box itself encompasses the middle 50% of the data set, and the “whiskers” are the minimum and maximum values not including the outliers.

As you can see, individuals with professional degrees and Ph.Ds earn the greatest incomes, while those without high school diplomas or trade certificates earn the least. No surprises there.

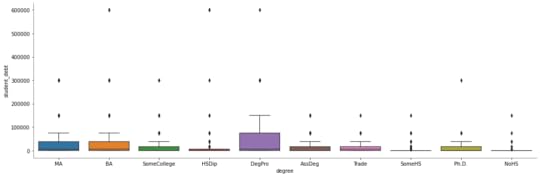

Let’s use the same box plot method to view student debt-loads:

Again, nothing shocking. College graduates hold the most student debt.

That single outlier with a high school diploma and $600,000 of student debt is interesting. I suspect someone clicked the wrong option at some point. Which proves just how useful box plots can be for this kind of visualization.

The fact that, on the whole, those income/debt results we’re getting are fairly predictable confirms that the survey has given us pretty good data. Which should make our earlier insights at least a bit more reliable.

Feel free to check out the original Python code used to generate these insights and visualizations.