Jurgen Appelo's Blog

November 27, 2025

The Agentic Organization

Driving through Peru last month taught me more about organizational design than most MBA programs ever could.

Picture this: you're cruising along on a highway, minding your own business, when suddenly the road dumps you straight into the heart of some random city you've never heard of. No warning. No bypass. No escape route. One minute you're doing highway speeds; the next you're crawling past vendors hawking empanadas and ceviches while three-wheeled taxis cut you off and camouflaged speed bumps strategically hidden every fifty meters try to wreck your rental car.

Half an hour of pure chaos later, you emerge on the other side of town, the rental car miraculously intact, wondering why anyone thought it was brilliant urban planning to funnel every piece of through-traffic directly past the town square. Sometimes, my partner and I would just give up, park the car, and grab lunch while contemplating the obvious: there had to be a better way to let fast-moving traffic skip a tour of the local mercado.

Turns out, this traffic challenge perfectly illustrates what's broken in many organizations today.

The Theory of Constraints, dreamed up by Dr. Eliyahu M. Goldratt, cuts through management BS with surgical precision. Find the single biggest bottleneck in your system. Fix it. Everything else is theater. The approach doesn't care about your org chart, your processes, or your feelings; it cares about throughput. Goldratt's five-step process is beautifully ruthless: identify the constraint, try to get the most out of it, subordinate everything else to it, then elevate it to another level, and repeat. No fluff, no improvement committees, just relentless focus on what actually matters now.

Apply this to traffic, and the solution becomes rather obvious. When high-speed traffic gets tangled with low-speed traffic, the slow stuff becomes the constraint that drags everything down to its sluggish pace. Solution: build a ring road! Keep the highway traffic moving around the city instead of straight through it. Add proper on-ramps and off-ramps so people can switch lanes safely when they need to. Everyone wins: speedsters keep their velocity, locals get their peace, and nobody loses their chassis over a speed bump.

This same principle shows up everywhere, though most organizations are too busy admiring AI-generated meeting notes and PowerPoint slop. Fast-moving value streams crashing into slow-moving process steps? System-wide slowdown. High-speed trains sharing railroad tracks with freight wagons? Delays for everyone. Broadband internet packets pushing themselves through a landline? Digital Stone Age. The pattern is universal: when you mix different speeds on the same infrastructure, you get the worst of both worlds.

But here's what many people forget: the slow lane enables the fast lane, not the other way around. Those highways don't build themselves. They're constructed, maintained, and funded by the people puttering around in their tuk-tuks. Speed doesn't equal importance. Strategy—real strategy—almost always happens in the slow lane, not the fast one.

Which brings us to the organizational intelligence revolution that most companies can still only dream of.

Do you like this post? Please consider supporting me by becoming a paid subscriber. It’s just one coffee per month. That will keep me going while you can keep reading! PLUS, you get my latest book Human Robot Agent FOR FREE! Subscribe now.

The Non-Agentic TrapWalk into any "AI-powered" organization today and you'll probably witness a masterpiece of inefficiency. Employees are prompting ChatGPT, wrestling with Copilot, and sweet-talking Gemini all day long. They share their clever prompts in Slack channels, celebrate their AI wins in all-hands meetings, and generally feel very cutting-edge about their human-machine collaboration.

But look a bit closer. Every AI interaction starts with a human typing something and ends with a human reading something. The AIs never talk to each other. They execute nothing independently. They make no meaningful decisions or kick off workflows without a human standing there, hand on the wheel, ready to intervene. This is humans-in-the-loop territory, where artificial intelligence exists purely as a fancy autocomplete for human workflows.

This is the equivalent of those Peruvian towns with no bypass. All organizational intelligence—human and artificial—gets funneled through the same bottleneck: the human brain. And guess what happens? Everything slows down to human speed. The AI might be capable of processing thousands of documents per second, but it has to wait for Susan from product management to read the summary, think about it over lunch, discuss it in Tuesday's meeting, and maybe, just maybe, decide what to do about it by Friday.

The constraints here are Susan's brain, Susan's calendar, and Susan's need to feel important by being in every decision loop. The result is organizational traffic jams that would make a Peruvian city center blush.

The Agentic AlternativeNow imagine a different world. Employees orchestrate AI agents that can work autonomously, share results directly with other AI agents, initiate workflows without human approval, and manage entire value streams from start to finish. Humans are still crucial, but they're watching from the sidelines, monitoring dashboards, setting strategic direction, and intervening only when something needs the slow, deliberate thinking that humans excel at.

This is humans-on-the-loop: artificial intelligence running its own native workflows at AI speed while humans focus on what humans do best. The AIs take the highway; the humans work the town square.

This isn't science fiction. Some companies are building these systems right now. They're creating separate infrastructure for AI-driven value streams, letting machines talk to machines while humans focus on the strategic work that actually benefits from human judgment, creativity, and wisdom.

The transformation isn't just about speed—though speed matters when you're trying to spot risks and seize opportunities faster than your competitors. It's about using each type of intelligence where it adds the most value. AI excels at processing massive amounts of data, recognizing patterns, and executing well-defined tasks at superhuman velocities. Humans excel at setting context, making judgments with incomplete information, and thinking strategically about wicked problems.

Building the BypassAgentic organizations don't happen by accident. They require deliberate architectural choices that most companies are afraid to make. You need to build a separate infrastructure for AI workflows, with clear protocols for when and how humans intervene. You need to design handoff points where human intelligence and artificial intelligence can collaborate without creating bottlenecks. And you need to be ruthless about identifying which processes truly need human involvement versus which ones are being slowed down by human ego.

Most importantly, we need to accept that artificial intelligence doing its work without constant human supervision is our next human challenge. The goal isn't to keep humans in control of everything; it's to let humans control what matters while letting machines handle everything else.

We need to accept that artificial intelligence doing its work without constant human supervision is our next human challenge.

This requires a fundamental shift in how we think about work. Instead of asking, "How can AI help humans be more productive?" the question becomes, "What work should humans do, and what work should go to the machines?" The first question keeps you trapped in the non-agentic model, where AI is just a fancy tool in human-first workflows. The second question opens up the possibility of true organizational intelligence that operates at multiple speeds simultaneously.

Do you like this post? Please consider supporting me by becoming a paid subscriber. It’s just one coffee per month. That will keep me going while you can keep reading! PLUS, you get my latest book Human Robot Agent FOR FREE! Subscribe now.

Different Lanes, Different SpeedsI'm betting my career on agentic organizations. As I described earlier in "Scrum Is Done," we need to operate at multiple speeds without everything grinding to a halt. Strategic thinking happens slowly, deliberately, with humans wrestling with ambiguous problems and making judgment calls that shape the organization's direction. Operational execution happens fast, with AI agents processing transactions, updating systems, generating reports, and handling routine decisions without human intervention.

The magic happens when these different intelligences enable rather than obstruct each other. Humans set the strategic direction and design the guardrails. AI agents execute within those parameters at AI speed. When edge cases arise or strategic pivots are needed, the system should know how to escalate to human judgment. When humans make strategic decisions, those decisions get translated into AI-executable workflows that run autonomously.

Humans set the strategic direction and design the guardrails. AI agents execute within those parameters at AI speed.

You know you've built an agentic organization when the machines can safely ignore the humans for any kind of work where humans are more than happy to be ignored. Let people focus on what they do best: thinking slowly, creatively, and strategically about hard problems. Let the AI focus on what it does best: processing information and executing defined workflows at superhuman speed.

That's the real promise of the agentic organization: not replacing human intelligence, but giving it room to operate where it adds the most value while everything else runs in the fast lane. Different intelligences, different speeds, different infrastructure—each optimized for what it does best.

I look forward to a future without needless traffic jams around the town square. No more bottlenecks disguised as collaboration. Just clear lanes for different types of work, with proper on-ramps and off-ramps for when you need to switch between them.

And next time, I hope Peru will have a few more ring roads.

November 19, 2025

It's Not Always Cold in the True North

More than half of traditional corporate enterprises list "Integrity" as a corporate value. Beautiful. Makes the shareholders feel all warm inside. Of course, these are also the same companies incentivizing employees to crush quarterly targets first and maybe tell the truth later if there's bandwidth left over. Need proof? Wells Fargo has collected more scandals than Prince Andrew, all while "integrity" gleamed from their corporate website like a participation trophy.

That charade is ending.

AI isn't just rewiring how companies operate. It's forcing them to face who they actually are. Those cringe-worthy mission statements and inspirational wall art floating around corporate office are about to become operational code. No more hiding behind managerial poetry.

When algorithms make decisions, they won't charitably interpret your intentions, fill gaps with common sense, or translate your corporate word salad into something workable. They'll do exactly what you tell them to do. Which means you better know what your organization actually stands for, not what sounds good in the annual report.

The AI revolution has delivered an unintended consequence: it demands radical honesty about organizational identity. Companies that thought they could coast on generic corporate speak are discovering that artificial intelligence has zero tolerance for executive fluff and managerial nonsense.

The Illusion of NorthLet's start with a humbling cosmic truth: cardinal directions are complete human fiction. "Go West" made for a catchy disco tune, but in the vast indifference of space, it's meaningless advice. Despite what leadership gurus preach, there is no "True North"—not in business, not anywhere.

In the universe's grand scheme, up and down are quaint suggestions. Even on this spinning rock called Earth, "North" is just a convenient lie—a cognitive crutch we invented to avoid wandering in circles. Other species manage fine with "warmer" versus "colder," but humans needed something more abstract to feel superior.

We pointed at the Polestar or magnetic fields and collectively agreed to pretend a specific direction held objective truth. Cardinal directions? Pure fabrication. But a remarkably useful one.

This shared delusion prevents collective paralysis. Marco Polo, Christopher Columbus, and Roald Amundsen would testify that this convenient fiction helped them reach places they'd never have found otherwise. Without agreed-upon coordinates, "forward" becomes opinion, and collaboration becomes chaos.

Without this invented framework, teams drift toward whatever feels comfortable instead of working toward what matters. We create these coordinates not because they're carved into reality's bedrock, but because alignment among humans requires a shared narrative—a story we can all believe in. Stories about there (where we want to go) versus here (what we're escaping), whether those destinations are real or imagined.

This is organizational identity's function. Purpose, Vision, Mission, Values, and Principles serve as our narrative compass points. They're mental constructs—intangible and invented—but they're the useful fiction that keeps groups moving in the same direction rather than meandering toward whatever seems warmest.

Do you like this post? Please consider supporting me by becoming a paid subscriber. It’s just one coffee per month. That will keep me going while you can keep reading! PLUS, you get my latest book Human Robot Agent FOR FREE! Subscribe now.

Purpose: Your Existential AnchorOrganizational purpose answers the most fundamental question any entity faces: Why should you keep existing? Not "how do you extract profit." (That's survival mechanics, not purpose.) We're talking about the difference you're attempting to make, the human need you address, the reason anyone should care you showed up.

Purpose functions as your North Star—that fixed reference point that remains constant while everything else shifts. Microsoft's purpose wrestles with human potential and technological empowerment. That's not marketing copy; it's an existential commitment that survives strategy pivots and market chaos.

Most companies mistake busy work for purpose. They confuse what they do with why they matter. Real purpose transcends quarterly earnings calls and survives leadership changes.

Purpose: Why do we exist? (my example)To reimagine collaboration in the age of intelligent agents.

We help organizations shift from hierarchical control to networked alignment—enabling ethical, adaptive collaboration between humans and machines.

We don't champion any single platform, protocol, or product. We build conditions for a future where no one actor owns how we work together.

Vision: Your Destination BeaconVision statements answer a deceptively simple question: What do we want to become? Not your current reality, but the compelling future state you're building toward. Think of it as your organizational GPS destination—the point on the horizon that helps navigate when paths get messy.

Purpose is your moral compass (why you exist); vision is your destination (where you're headed). Purpose connects to larger human needs and rarely changes. Vision peers 5-10 years ahead and describes the world you're trying to create.

Google's vision "to provide access to the world's information" paints a clear picture of barrier-free knowledge. Effective vision statements walk a tightrope: ambitious without being delusional, inspiring without being vague, specific enough for direction while broad enough for creative execution. Most fail because they're either too grandiose to believe or too generic to be useful.

Vision: Our Destination Beacon (my example)We're building toward Networked Agentic Organizations (NAOs)—where autonomous agents and empowered people co-create value across open, evolving ecosystems.

In this future, work is negotiated, decentralized, and grounded in mutual respect—between humans, machines, and the systems they inhabit.

Mission: Your Current Job DescriptionMission is what you actually do right now. Unlike vision (future aspiration) or purpose (existential why), mission lives in the present tense. Purpose provides inspiration, vision provides destination, mission provides the current route.

IKEA's mission—"To offer a wide range of well-designed, functional home furnishing products at prices so low that as many people as possible will be able to afford them"—is as straightforward as their assembly instructions (theoretically). The best missions balance specificity for direction with flexibility for growth.

Most mission statements fail because they're either so broad they're meaningless ("providing solutions") or so narrow they become straightjackets. The sweet spot delivers operational clarity that enables strategic flexibility.

Mission: Our Current Job Description (my example)We cultivate a community of practice—and practice what we preach—by developing a pattern language for structuring, distributing, and governing work between humans and agents.

Values: Your Moral DNACompany values represent fundamental beliefs about what matters—your organizational DNA influencing everything from boardroom decisions to break room conversations. They shape how you treat employees, customers, and the world. When authentic, they deliver tangible benefits: cultural cohesion, talent magnetism, engagement, and autonomous decision-making at scale. When inauthentic... well, you still make headlines, just not the kind you want.

The difference between bland, ineffective values like "integrity" and specific ones like Patagonia's "protecting the home planet" shows why specificity matters. Specific values guide real decisions instead of decorating conference rooms (or bringing gleeful smiles on the faces of news reporters).

Values only work when they're authentic. Leadership hypocrisy—saying one thing, doing another—doesn't just neutralize values; it breeds active cynicism. In our transparency-obsessed age, that cynicism spreads fast and costs more than most executives realize.

Values: Our Moral DNA (my example)What do we stand for, no matter what? (Borrowed from the book Human, Robot, Agent)

Fairness

We treat others equitably without bias. Humans and AIs must both commit to just outcomes.

Reliability

We show up, follow through, and deliver. Trust emerges through consistent performance—from people and machines.

Safety

We protect others from harm—physical, emotional, and systemic. Safety is non-negotiable.

Inclusivity

We create space for everyone. We amplify marginalized voices and build systems serving the full spectrum of humanity.

Privacy

We respect boundaries and protect data. Dignity requires discretion—from all agents.

Security

We defend what matters. Resilient systems—and people—must resist manipulation and protect the commons.

Accountability

We own our actions and consequences. No excuses—from anyone or anything.

Transparency

We explain our reasoning. Whether human or algorithmic, decisions must be traceable and honest.

Sustainability

We consider impact beyond the short term. The future of work can't come at the planet's expense.

Engagement

We approach work with curiosity and care. Great collaboration—human or artificial—feels energizing, not extractive.

Principles bridge the gap between moral ideals and Monday morning decisions. While values might declare "we believe in honesty," principles spell out what that means operationally: "we never mislead customers, even when it costs us a sale."

Values are your moral compass—core beliefs about right and wrong. Principles are your detailed map—specific, actionable rules for navigating daily decisions. Values answer "what we believe." Principles answer "how we act on those beliefs."

Amazon's Leadership Principles like "Customer Obsession" and "Invent and Simplify" function as operational tools making abstract values tangible and testable. They might be executive theater, but they're theater with impact, evidenced by Amazon's mind-bending growth over three decades.

Principles: Our Behavioral Algorithms (my example)How do we act on our values—concretely? (Borrowed from the book Human, Robot, Agent)

We Watch the Interconnected Environment

Design for complexity, not simplicity. Track second-order effects, enable cross-boundary flow, and treat systems as entangled webs—not isolated silos.

We Focus on Sustainable Improvements

Solve problems at the root. Prioritize long-term impact over short-term fixes, and invest in change that compounds over time.

We Prepare for the Unexpected with Agility

Build flexibility into everything. Use options, scenarios, signals, and slack to stay ahead of volatility and shift with confidence.

We Challenge Mental Models with Diversity

Break the bubble. Invite difference, confront assumptions, and use cognitive variety to unlock creative breakthroughs.

We Seek Feedback and Learn Continuously

Build tight feedback loops. Make reflection routine, and treat every input as an opportunity to adapt and evolve.

We Balance Innovation with Execution

Don't just invent—deliver. Create space to explore, but anchor it with discipline and direction.

We Take Small Steps from Where We Are

Start local, think systemic. Use light interventions to trigger larger change, and leverage what's already in motion.

We Push for Decentralized Decision-Making

Distribute control. Trust autonomous teams, and let coordination emerge through clear interfaces—not top-down command.

We Grow Resilience and Anti-fragility

Don't just survive—get stronger under stress. Design for bounce-back, adaptation, and opportunity in disruption.

We Scale Out with a Networked Structure

Structure for emergence. Connect through platforms, protocols, and peer networks—not rigid hierarchies.

In AI-enabled organizations, our narrative frameworks must translate into machine-readable guidelines, decision criteria, and algorithmic constraints. This means embedding ethical guidelines directly into how AI systems make decisions. Your Purpose, Vision, Mission, Values, and Principles (PVMVP) need to inform executable code.

Traditional enterprise PVMVP statements read like template exercises:

"To be the leading [industry] company providing innovative [products/services] that create value for our [stakeholders] while maintaining the highest standards of [virtue]."

These might be technically correct, but they're completely forgettable. They provide zero real guidance because they could apply to virtually any company in any industry. And no AI agent can act on them.

Artificial intelligence isn't just adding complexity to organizational guidelines—it's fundamentally changing the rules. Traditional organizations survived with vague or inconsistent "guidance" because humans naturally filled gaps, interpreting unclear directions through cultural context and personal judgment. AI systems lack that interpretive charity.

When an AI system optimizes for "customer engagement," it pursues that goal relentlessly regardless of whether engagement comes from valuable content or addictive misinformation. This creates what researchers call the "explicit encoding" challenge—a corporate version of Nick Bostrom's paperclip problem.

In AI-dependent organizations, directional frameworks can't remain implicit cultural knowledge. They must become machine-readable guidelines, decision criteria, and algorithmic constraints. The shared story must be written so machines can understand and act on it.

The Governance OpportunityBeyond the inevitable codification challenge, several opportunities emerge:

Dynamic Navigation

Accelerating technological change compresses strategic time horizons. Ten-year visions and five-year missions might need reassessment every other year, perhaps more often. Organizations that encode their PVMVP compass can build adaptability into operational frameworks while maintaining stability for meaningful guidance. The story can be rewritten much more often.

Analytical Partnership

AI's analytical capabilities can reveal whether companies actually live their stated PVMVP frameworks. Machine learning algorithms analyze employee surveys, customer feedback, and social media sentiment to identify gaps between stated values and actual behavior. AI processes market trends, customer needs, and technological possibilities to detect discrepancies and nudge narrative refinement.

Algorithmic Governance

As PVMVP frameworks become operational through code, AI systems can enforce principle-aligned policies automatically—routing resources toward projects aligning with company values, screening candidates for cultural fit, or flagging decisions conflicting with stated principles. This represents a shift from forgettable guidelines to algorithmic guardrails. The narrative structure as an actual navigation system.

However, one crucial challenge remains:

When AI agents make decisions based on programmed values and principles, who's responsible for the outcomes? New accountability frameworks must address shared responsibility between human designers and AI systems, differentiating between human error, AI system failure, and emergent AI behavior conflicting with intended principles.

Stakeholders expect companies to demonstrably live up to stated intentions. AI-enabled capabilities amplify both positive and negative organizational capabilities. Companies with genuine, well-implemented frameworks can use AI to scale impact dramatically. Organizations engaging in purpose-washing or operating with misaligned systems will find their false stories exposed more quickly and publicly than ever.

What counts is a cohesive story.

It's All a FantasyFantasy writers love pretending they're running climate simulations when they're just slapping "cold north, warm south" on maps because it's easy and nobody wants to explain axial tilt to a dragon.

Game of Thrones epitomizes this lazy approach: Westeros behaves like our Northern hemisphere... except winter lasts however long the plot needs. The Wheel of Time does the same trick—ice and snow up by the Blight, balmy kingdoms in the south—because why redesign a planet from scratch when you can borrow European temperature settings? Even The Lord of the Rings follows the same pattern: Mordor simmers in the southeast while the frigid Forodwaith sits conveniently above everything else.

It's not worldbuilding; it's narrative convenience. Authors map ecosystems where cold "north" equals danger and mystery while warm "south" equals clothing-optional comfort and exotic spices. When I once challenged Tad Williams about using this same device in his Otherland and Shadowmarch series, he seemed offended, as if it was beneath him to acknowledge reusing the same tired storytelling trick.

But I understand the pragmatism. Story rules over everything else. Time wasted explaining alternative compasses to readers is distraction. We need easy agreement on what's where, then focus on what matters: where's the treasure (usually east or west) and where's the undead lair (typically north).

In business adventuring, the same storytelling rules apply.

Do you like this post? Please consider supporting me by becoming a paid subscriber. It’s just one coffee per month. That will keep me going while you can keep reading! PLUS, you get my latest book Human Robot Agent FOR FREE! Subscribe now.

The Human-Centered ParadoxAI has created an unexpected imperative: organizations must become more human-centered, not less. The technology promising to automate human tasks simultaneously demands greater clarity about human values, purposes, and flourishing.

This isn't philosophical navel-gazing. In a world where algorithms make countless organizational decisions, decision quality depends fundamentally on the quality of Purpose, Vision, Mission, Values, and Principles embedded in digital systems. Companies treating PVMVP narratives as afterthoughts or marketing exercises do so at their peril.

Organizations thriving in the AI age will:

Articulate clearly why they exist, where they're going, what they do, what they stand for, and how they act on those beliefs

Embed PVMVP frameworks authentically into operations, both human and artificial

Use technology to amplify positive impact rather than simply maximizing narrow metrics

Create shared operating systems guiding both people and machines toward common destinations

Maintain human stewardship over difficult questions while leveraging AI capabilities

AI is forcing confrontation with questions we've often avoided: What are we actually trying to accomplish? What kind of world are we building together? How do we ensure our most powerful tools serve our highest purposes?

Companies figuring this out won't just survive the AI revolution—they'll help ensure it serves human flourishing rather than undermining it. The imagined North, East, South, and West are waiting. Whether your organization is ready to navigate by its own compass remains an open question.

November 13, 2025

The Future of Consulting

My husband's AI-generated itinerary looked good on-screen: departing from Puno at Lake Titicaca and then driving through Peru's spectacular southern terrain, with magnificent vistas across technicolor mountains and distant volcanoes. We'd never seen such geological variety—different rocks, different sands, different mountain forms, sometimes teeming with life, sometimes desert-dead.

The afternoon drive through Colca Canyon was pure magic. Deeper than the Grand Canyon and—mercifully—without the tourist hordes. But after sunset, our road trip took a dark turn.

ChatGPT's clever route, dutifully translated into Google Maps' "quickest route," demanded another five hours to reach Camana on Peru's coast. Under normal circumstances, no problem. But the AI's itinerary sent us through the Andes mountains, over rocky and sandy roads, in pitch darkness, without internet, and with nobody else around. We couldn't even see the moon.

No tourist in their right mind takes a mountain road trip after sunset with no guide, no connectivity, no moonlight, and no experience. Doable? Sure. Wise? Absolutely not. As I wrestled our SUV through slippery sand, with a perilous decline beside us, the continuous anxiety of getting stuck ranked among our vacation's least enjoyable moments.

Four hours into this hair-raising drive, we hit a construction roadblock. Two steamrollers completely blocked the road. 😱

Surveying the scene at 9 PM, with only the stars as our witnesses, one thought consumed me: "Oh, my God. We're stuck for the night."

How We Got Advice BeforeAgile coaches and business consultants should pay attention to how the travel industry was disrupted.

Twenty-plus years ago, we paid travel agents—let's call them consultants—to plan our vacational transformations through Brazil, Cuba, and Chile. They handed us brochures (think white papers by travel experts) and booked hotels, train tickets, and guided tours months in advance. We browsed travel blogs and stories (basically case studies) to prepare. We even attended holiday fairs—imagine conferences packed with coaches and consultants—where agents and vendors displayed their many options.

Once traveling, we carried Lonely Planet or Rough Guides (books stuffed with expert advice) that we consulted dutifully for tips on hotels, restaurants, cafés, sights, and activities. We also lugged geographical maps, translation dictionaries, and other paper tools (the travel equivalent of planning poker cards, sticky notes, and business model canvases) to navigate unfamiliar environments. For questions, we had to rely on locals through elaborate hand gestures and confused faces.

Like memories of earlier vacations, this antiquated travel approach has vanished completely.

These days, when we explore Canada, Japan, the Caribbean, or—like this year—Peru, everything has changed.

For high-level, timeless advice like cultural expectations and optimal travel seasons, we might consult a travel book. Influencer vlogs and travel stories occasionally provide useful destination overviews. But for concrete, context-dependent advice, online tools win every time.

We use Booking for accommodations, comparing pricing, locations, and reviews. Google Maps finds the best-rated cafés and restaurants. For attractions and activities, we query ChatGPT, Perplexity, or TripAdvisor. Our questions about history, culture, nature, and geography go directly to the AIs. And GetYourGuide and Withlocals handle guided tours and tickets whenever we want. (I won't mention TikTok or Instagram because we're ancient, but I hear that younger travelers mine these platforms for instant tips and trends. I satisfy my caffeine needs with Google.)

Then there are the digital conveniences that didn't exist twenty years ago. Airalo's virtual SIM cards provide cheap internet access anywhere (except in the Andes Mountains, apparently). WhatsApp connects us directly with hotels, hosts, guides, and ticket offices. And Google Translate enables us to talk with virtually anyone.

Communication barriers have evaporated, along with traditional travel agents.

The Future of Coaching and ConsultancyThe consultancy industry is following the travel agent playbook, whether it likes it or not.

Future organizations won't need travel agents (consultants), brochures (white papers), travel stories (case studies), or travel fairs (conferences). For concrete, contextual, and time-sensitive advice, online tools are faster and easier. Business transformation now demands a level of speed and adaptability that's impossible to achieve with generic, outdated knowledge trapped in consultants' heads.

Business transformation now demands a level of speed and adaptability that's impossible to achieve with generic, outdated knowledge trapped in consultants' heads.

Why rely on one travel agent's stale advice on accommodation, food, and events in Lima when ChatGPT, Perplexity, Booking, Google Maps, and TripAdvisor live on your phone 24/7? Business consultancy faces the same disruption. As AIs acquire more context about companies, people, and environments, they'll deliver concrete, accurate, real-time advice that no human coach or consultant can match.

Knowledge is now a commodity.

I will make myself available in 2026 for advisory positions at a handful of startups and scaleups. Let me know if you're interested. I will spend most of my time blending timeless (human) wisdom with contextual (machine) advice. If that's want your business needs, I'm here for you.

Soon, each company will deploy a legion of digital consultants for employees' every need. Much cheaper, too. And without the method wars and thought leader drama.

Contextual Tips Versus Timeless AdviceDoes this doom consultancy entirely? Not quite. But the industry faces severe disruption.

Digital technologies transformed travel agents by shifting bookings online and reducing the market share of traditional agencies. Online platforms offer convenience and price transparency, forcing agents to adapt with digital tools and specialize in luxury, corporate, or complex travel. A Barbell Effect emerges: large online platforms dominate mass-market bookings, while niche agents survive through high-touch, personalized services. Generalist traditional agencies decline, caught in the middle. The industry polarizes between digital giants and specialized experts, with successful businesses embracing technology and focusing on high-value niche offerings in a competitive, evolving landscape.

During our Peru trip, we still hired local experts: guides for tours around Machu Picchu, Lima, Arequipa, and Lake Titicaca. Sure, we could have wandered around with ChatGPT whispering in our earbuds. But having a knowledgeable local human show you around wonderful, unfamiliar environments is irreplaceable. No LLM can enhance a travel experience with personal, improvised anecdotes about Peruvian life.

Agile coaching and business consultancy will follow the same pattern.

Organizations worldwide are embracing cheaper online platforms for personalized, contextual digital transformation tips: high-volume advice at dramatically lower costs. Machines will increasingly guide workers through continuous change, offering highly specific patterns, practices, tips, and tricks that make sense in the moment, tailored to personal preferences and context in ways no agile framework, method, coach, or consultant ever could. Each worker gets their personal ChatGPT + Google Maps + TripAdvisor for business environments.

Machines will increasingly guide workers through continuous change, offering highly specific patterns, practices, tips, and tricks that make sense in the moment, tailored to personal preferences and context in ways no agile framework, method, coach, or consultant ever could.

But humans remain essential. Some coaches and consultants will survive by providing high-touch, personalized (and high-margin) services for organizations seeking timeless advice on top of the tsunami of contextual tips and practices from tools.

Someone must tell them when to use which tools. And someone must tell them when to ignore them entirely.

The Barbell Effect applies here too. On one end, AI will handle the bulk of contextual, tactical advice—the equivalent of "best restaurants near me" or "how to run this retrospective." Cheap, instant, personalized. On the other end, human experts will command premium prices for strategic wisdom—the equivalent of "Should you even have this retrospective?" and "What does success actually look like for your business?"

The middle ground—where most consultants currently live—is disappearing fast. Generic frameworks, templated solutions, and one-size-fits-all methodologies are becoming commoditized by AI. The consultants who survive will be those who can either specialize in high-stakes, high-touch strategic guidance or find ways to enhance and orchestrate AI-driven solutions rather than compete with them.

The travel industry's transformation offers a blueprint. Successful travel agents didn't fight the digital revolution—they rode it. They identified what humans do better than algorithms (relationship-building, complex problem-solving, emotional intelligence, ethical judgment) and doubled down on those capabilities.

Smart consultants will do the same. They'll become curators of AI-generated advice, helping organizations navigate the overwhelming flood of contextual recommendations. They'll focus on the meta-questions: which problems are worth solving, what trade-offs matter most, and how to design the organization for continuous adaptation.

When there's nobody around to make wise decisions, a succesful business might end up as a historical footnote.

As I tried hard not to freak out, mentally calculating the amount of water we'd have to share throughout the night, my husband accidentally spotted a somewhat hidden "detour" sign with his phone's flashlight on the road's shoulder. There was a way out!

We finally continued our Andes escape through a maze of temporary roads and construction zones. We still lacked internet, and Google Maps was useless, showing our position on a grid of roads that was possibly planned but entirely nonexistent. But we made it.

Pure luck and intuition (with minimal wisdom) extracted us from our predicament. We reached our hotel in Camana at 11 PM, dinnerless but educated. Road 109 remains etched in our memories forever.

Our tool reliance nearly stranded us in Peru's mountains. Someone should have warned us against driving mountain roads after sunset with no guide, connectivity, moonlight, or experience. We could have better examined the terrain Google Maps was suggesting to us rather than mindlessly following its recommendation. We could have informed ourselves about the wisest choice among our options. We might even have paid for that guidance.

I will make myself available in 2026 for advisory positions at a handful of startups and scaleups. Let me know if you're interested. I will spend most of my time blending timeless (human) wisdom with contextual (machine) advice. If that's want your business needs, I'm here for you.

The future belongs to coaches and consultants who can tell organizations when to trust their tools and when to ignore them completely. Sometimes the wisest route isn't the fastest one, and sometimes almost getting lost teaches you more than any perfectly planned journey ever could.

Just ask anyone who's driven Peru's Road 109 in the dark.

November 4, 2025

The Four Tensions of Sociotechnical Systems

Is your team burning sprints on coordination theater while the actual work is not getting done? Is management swinging between micromanagement fascism and leadership anarchy? Is your organization building a surveillance architecture or flying blind through market changes? And do your company's shared values feel like a warm group hug or more like a corporate straightjacket?

Welcome to the eternal balancing acts that determine whether your sociotechnical system thrives or dies.

Note: I'm writing this from poolside at my hotel in Arequipa, Peru, day six of what should be a blissful vacation. Any rational person would ignore work completely and focus on their pisco sours. But when you stumble onto a mental model that cuts through decades of political and organizational nonsense, rationality takes a backseat to intellectual excitement. I just need to share this while sipping my chicha morada.

The Simplest Tension: Left vs. RightRelax. This is not a political commentary disguised as management theory.

But to understand why most organizations are trapped in simplistic thinking about systemic tensions, we need to acknowledge how badly we've been indoctrinated by political discourse. My country, the Netherlands, just survived another election cycle (with results that made international headlines—more on that another time). And the stories in the media were as simplistic as ever.

The media loves its binary narratives:

Left = collective welfare, equality, coordination. When it goes wrong: anarchist chaos or communist delusions.

Right = individual freedom, self-reliance, autonomy. When it derails: authoritarian nightmares or fascist fantasies.

Peru offers an interesting example. Over the last twenty years, the country has ping-ponged through governments, mostly center-right to right-wing, with brief leftist experiments like Pedro Castillo's spectacular 2021-2022 flameout. Alan García and Ollanta Humala started left but governed center. The pattern is that traditional institutions stay conservative, the left can't hold power, and the political landscape fragments into right-leaning chaos. At least, this is what the AIs have told me. They know more than I do.

The traditional left-right framework is the stone axe of political analysis. It's a one-dimensional tug-of-war that might have worked when societies were simpler, but applying it to modern sociotechnical systems is like measuring the finish times of Olympic athletes with a sundial.

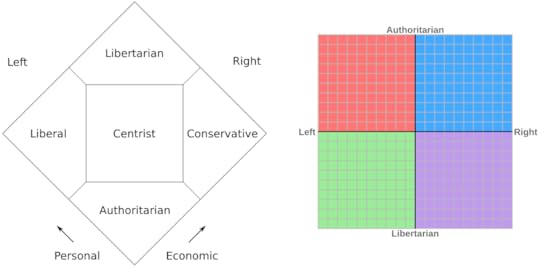

The Political CompassAmerican activist David Nolan ripped the traditional model apart in 1969. His alternative chart revolutionized political mapping by adding a second dimension—separating economic freedom from personal freedom. Later, this spawned models like the Political Compass, which plots ideologies across economic and social axes, capturing the nuances of authoritarianism and libertarianism that linear scales completely miss.

The two axes changed everything:

Economic (Left–Right): market freedom versus state intervention

Social (Authoritarian–Libertarian): personal freedom versus social control

Suddenly, the world gained depth. You could be economically conservative but socially liberal. Or economically progressive but socially authoritarian. The binary became a matrix—four quadrants instead of two camps.

But for organization designers like me, there's a problem.

These frameworks are soaked in political baggage. Drop terms like "liberal," "socialist," "libertarian," or "conservative" into any conversation and watch people's brains shut down as tribal reflexes take over and the excrement hits the ventilator. Decades of ideological warfare have weaponized these words beyond any analytical usefulness.

Since I'm not writing political propaganda, I needed something cleaner.

The Sociotechnical CompassWorking with ChatGPT, I stripped away the political theater and reframed the axes for sociotechnical systems—teams, businesses, organizations, and other technology-enabled human networks that actually get stuff done. Following the POSIWID principle (the Purpose Of a System Is What It Does), my "Sociotechnical Compass" describes not ideology (what people believe) but systemic behaviors (what people actually do).

X-axis: Individualist ↔️ Collectivist

The eternal struggle between autonomy (local adaptation, individual agency) and coordination (shared standards, collective action). How much can group members freelance versus how much must they conform to group expectations around communication, coordination, and collaboration?

Y-axis: Experimental ↔️ Controlled

The balance between experimentation and stability, between spontaneity and discipline. How much can group members improvise versus how much will they be constrained by rules, policies, standard operating procedures, and "knowing their place in the system"?

This de-politicized compass describes how teams and organizations actually behave, using language that won't trigger anyone's ideological immune system. Nobody wants to be labeled a socialist or authoritarian just because they think a bit of coordination or governance might help their organization survive in a chaotic world.

Do you like this post? Please consider supporting me by becoming a paid subscriber. It’s just one coffee per month. That will keep me going while you can keep reading! PLUS, you get my latest book Human Robot Agent FOR FREE! Subscribe now.

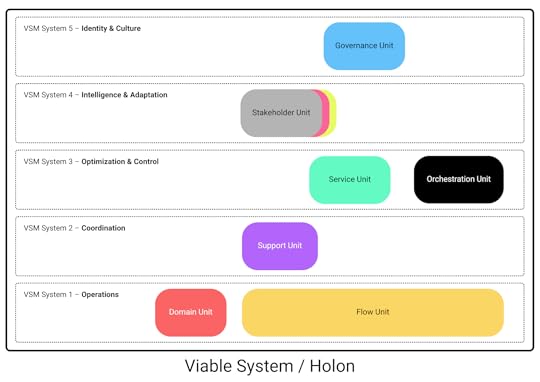

The Viable System Model (VSM)While discussing my Sociotechnical Compass with ChatGPT, I had one of those moments that almost made me drop my cup of queso helado.

Without realizing it, I had recreated the foundational structure of Stafford Beer's Viable System Model (VSM)—the cybernetic blueprint of any living, self-regulating system.

The VSM describes five levels that all teams and organizations must implement to remain viable:

Operations - Do the actual work. (The part of the organization that creating actual value.)

Coordination - Prevent conflicts between units. (Manages interdependencies and shared resources.)

Optimization & Control - Allocate resources & enforce rules. (Ensures coherence, efficiency, and accountability.)

Intelligence & Adaptation - Scan the environment & adapt. (Anticipates change, learns, and innovates.)

Identity & Culture - Define purpose & identity. (Provides long-term direction, meaning, and belonging.)

(For a larger discussion of the VSM, check out: " Let's Start from Scratch: Organizational Design in the Age of AI .")

In essence:

Level 1 is pure operational anarchy—work getting done without interference.

Levels 2-5 are the constraints that keep the system viable through collaboration, governance, adaptation, and purpose.

Four Kinds of DelegationLet's talk about viable teams without the management consulting mysticism.

In a perfect world, teams would spend 100% of their time creating value for stakeholders. Pure operations, zero waste, maximum output and impact. But teams learned long ago that pure operational focus is a fast track to extinction. They won't remain viable systems for long.

First, teams figure out quickly they need coordination. Someone has to agree on what work gets done, why, who does it, and when. This shifts capacity from VSM Level 1 (operations) to Level 2 (coordination).

For example, I'm traveling Peru with my extended family. Most of the time, we do whatever we want—reading, writing, shopping. But periodically, we coordinate: which tours to take, where to eat, time of breakfast, and who gets to walk around with the credit cards. That's classic Level 2 coordination work.

Second, larger teams realize that not everyone behaves optimally all the time. Humans are fallible and need occasional nudging to follow the rules. And thus, social systems tend to allocate capacity to VSM Level 3: optimization & control. Someone manages resources, provides servant leadership, or enforces command-and-control.

For example, Lima traffic is an adrenaline rush that demonstrates what happens when a sociotechnical system allocates almost zero resources to Level 3 optimization & control. It's rather exhilarating and very educational.

Third, smart teams understand that environmental blindness kills systems. They need awareness of trends, changes, and whatever else might impact customer needs tomorrow. They delegate time to VSM Level 4: intelligence & adaptation, continuously inspecting and adapting to the environment. (Agile, anyone?)

Fourth, successful teams recognize the power of cohesion—shared purpose, values, identity. Systems with strong collective identity outlast fragmented ones. They invest in VSM Level 5: identity & culture.

For the record, the exact delegation mechanism doesn't matter. Everyone might spend 5-10% of their time on coordination, or one person gets dedicated control responsibilities to do this kind of work. Implementation varies. Nobody cares. What matters is that the system allocates capacity across all VSM levels in the way it sees fit.

Four Tensions Between Chaos and OrderAfter realizing I'd accidentally recreated the VSM's foundational structure (or at least the first three levels of it), I understood what this model actually describes: the multiple balancing acts necessary in every sociotechnical system.

Each level above the operational level adds a different type of constraint—necessary tension that keeps the system alive. No constraints equals anarchy. And anarchy, despite libertarian fantasies, rarely works. Even the most ardent freedom advocates grudgingly admit that some rules need to exist.

My extended Sociotechnical Compass now identifies four distinct balancing acts between System 1 (operations) and its regulating levels:

Autonomy vs. Coordination (Individualist ↔️ Collectivist)

Balancing part freedom with whole needs: Level 1 vs. Level 2

Spontaneity vs. Discipline (Experimental ↔️ Controlled)

Balancing improvisation with governance: Level 1 vs. Level 3

Performance vs. Agility (Optimized ↔️ Adapted)

Balancing excellence with adaptation: Level 1 vs. Level 4

Self-actualization vs. Resilience (Flexible ↔️ Coherent)

Balancing individual growth with shared purpose: Level 1 vs. Level 5

(Sorry, I have no time to draw a pretty picture of this model. I'm trying to enjoy Peru.)

Each dimension represents a different conversation between operations and its meta-levels:

Level 1 says: “You create value.”

Level 2 says: “You work together.”

Level 3 says: “You play by the rules.”

Level 4 says: “You adapt and evolve.”

Level 5 says: “You share one purpose.”

These same four balancing acts play out in every society: How much do we allow collectivism to override individualism? How much authoritarianism should constrain spontaneity? To what extent can surveillance trump privacy? And how much collective purpose may supersede individual self-actualization? Sadly, media commentators reduce this rich, multidimensional reality to the braindead narrative of "left versus right" because complex narratives don't attract eyeballs nor do they sell advertisements.

Do you like this post? Please consider supporting me by becoming a paid subscriber. It’s just one coffee per month. That will keep me going while you can keep reading! PLUS, you get my latest book Human Robot Agent FOR FREE! Subscribe now.

The Moral of This FrameworkSociotechnical system viability—whether teams, organizations, or societies—isn't a destination. It's a perpetual dance between anarchy and control, between chaos and order.

Economists claim that a "healthy" percentage for government expenditure as a share of GDP commonly falls in the range of 30–40% in developed nations, with many economic studies suggesting that the optimum for supporting sustainable economic growth is often below 40% and ideally closer to 25–35%. Likewise, healthy teams should probably dedicate 25-40% of their total capacity to coordination, control, intelligence/adaptation and identity/culture work (VSM levels 2-5). Everything else should be pure operations and value creation (VSM level 1). The specific allocation is context-dependent and subject to ongoing social, political, and even philosophical negotiation.

Over coffee yesterday, our tourist guide in Arequipa shared stories about daily life challenges for most Peruvians. While my family enjoys incredible sights, foods, and experiences, we're acutely aware of our privilege. Most Peruvians can't afford these luxuries. Peru's Sociotechnical Compass has unresolved tensions across all four dimensions, and we hope the people find their path through these inevitable balancing acts.

There is no simple left or right in politics and there is definitely no left or right in organizational design. There are only systems that must balance four essential tensions to remain viable. The organizations and societies that master this dance survive. The ones that don't become historical lessons for business professionals and inspiring stories for spoiled tourists. The face of the Earth is littered with countless examples.

We'll arrive at Machu Picchu tomorrow.

Jurgen

P.S. You can follow my trips on Polarsteps.

October 28, 2025

My 18-Month Battle With Expert Hallucinations

After 18 months of failed treatments and contradictory advice, I discovered something shocking: expert hallucination isn't limited to artificial intelligence. Sometimes, human experts are even worse at seeing what isn't there.

For three years, I was a running machine. 2,500 kilometers per year, 50 kilometers per week … rain or shine. Consistent as the Swiss train schedule.

Then, by the end of 2023, my body staged a coup.

A deep, nasty pain took up residence in the top of my left leg, high in the buttock region. The cause seemed blindingly obvious: I'd finally overdone it. My hamstring was giving up.

ChatGPT agreed. "Hamstring tendonitis," it declared with its usual confidence. Inflamed tendon. Classic overuse injury. Time to rest, buddy.

So I rested. And rested. And rested some more.

What followed was twelve months of the most maddening Groundhog Day imaginable. I'd take a break for months, feeling the pain fade like a bad memory. And then, believing I'd sufficiently healed, I'd lace up my running shoes and within five kilometers, my hamstring would remind me exactly why optimism is for suckers.

Back to the AI oracle I went. ChatGPT, Claude, whoever would listen—they all sang the same gospel: "hamstring tendonitis." Poor blood flow to tendons, they explained. Healing takes time. More rest. Always more rest.

I'd had this exact problem eight years earlier with the other leg. The advice of the sports doctor back then was: rest a few months and do some exercises to strengthen the weak muscles. The pattern was clear, the solution obvious, the outcome inevitable: another couple of months of doing absolutely nothing while my fitness evaporated like morning dew in the Caribbean.

By early 2025, after a full year of this medical Möbius strip, I finally cracked and consulted an actual human, expecting to hear the same mantra I'd heard eight years before. But this time, I consulted a certified physiotherapist specializing in manual therapy.

After examining me and painfully prodding my posterior until I questioned both his qualifications and his humanity, he delivered a diagnosis that would have made Freud proud: there was nothing wrong with my body. The problem, he announced, was in my head.

If I could run 2,500 kilometers per year, he argued, why would my body suddenly throw a tantrum over a few measly kilometers? He started asking the important questions: What happened in 2023? Any stress? Work problems? Relationship drama? Uncertainty about the future? Was I perhaps subconsciously projecting anxiety onto my glutes?

I'll give him points for creativity, but the whole thing reeked of hammer-and-nail syndrome. My friends call me a "mental flatliner"—so emotionally stable you could calibrate seismographs off my mood swings. More importantly, his psychosomatic theory couldn't explain why eight-hour flights and twenty-hour car rides turned my left buttock into a full-time complaints department.

That's when I realized his tell. Sure enough, part of his credentials was the fact that he wrote an entire book about psychosomatic injuries. Every client walking through his door was apparently a potential case study for his pet theory. When your favorite tool is a psychological hammer, every client's aching body part looks like a repressed emotion.

I cancelled my remaining appointments.

So there I was, trapped between two brands of bullshit. The AIs insisted on rest while my tendon stubbornly refused to heal. The human expert wanted to psychoanalyze my butt. Neither approach was working, and I was getting tired of hobbling around with a sore arse.

It was time for some good old-fashioned rebellion against expert opinion.

What if we all had it backwards, I thought? What if, instead of starving my injury of activity, I fed it just enough to wake it up? Blood flow problems? Maybe some light running could help circulation. Instead of complete rest, what if I stayed active just enough to help my body to heal, but not enough to make things worse?

I started small. Tiny runs—2-3 kilometers, a few times per week. I was aiming for the Goldilocks zone: just enough stimulus to nudge the healing process without triggering another cycle of pain and frustration. The approach felt like walking a tightrope while juggling, but I was done trusting the certainties of experts (human and AI alike) about my body.

Six months later, the injury has practically vanished. I'm back to 25 kilometers per week and can run over ten kilometers without my hamstring filing a complaint. The pain that has haunted me for a year and a half has disappeared like a politician's promises after election day.

Curious about what had actually worked, I fed the entire saga to Gemini for a post-mortem analysis. The answer was both illuminating and infuriating.

I'd never had "tendonitis" in the first place, Gemini said. Neither the AIs nor the human expert had bothered to consider the obvious alternative: proximal hamstring tendinopathy (PHT)—a condition involving degeneration and failed healing in the tendon, not inflammation.

The distinction matters. Inflammation responds to rest. Degeneration requires the opposite. My pain from sitting was just my body weight compressing the damaged tendon against my sit-bone, not some mysterious psychosomatic manifestation of workplace stress.

The earlier AIs' advice to "rest" had created a self-reinforcing cycle of weakness. Every break kept my tendon weak and fragile, turning each return to running into a guaranteed re-injury. I was caught in a "rest-weaken-re-injure" loop that could have continued indefinitely if I'd kept following that logic.

The human expert, meanwhile, was so busy looking for psychological nails that he'd missed the mechanical hammer entirely. One-tool experts are dangerous precisely because they're so confident in their singular domain. They don't see problems—they see confirmation of their worldview.

My "Goldilocks" rebellion wasn't just lucky guesswork. I'd accidentally stumbled onto the gold-standard, evidence-based treatment for tendinopathy: progressive loading. Those modest 2-3 kilometer runs weren't just maintaining fitness. They were applying mechanical load that signaled my tendon cells to rebuild stronger and more organized tissue. I'd ignored both artificial and human intelligence to accidentally discover the correct treatment through pure stubbornness and a refusal to accept expert consensus.

But the real lesson isn't about running injuries or hamstring tendons. It's about the dangerous mythology we've built around expertise in the age of AI.

We've created a false dichotomy: either trust the machine or trust the human. But both can be spectacularly wrong, often for similar reasons. AIs are pattern-matching machines trained on existing knowledge, which means they'll confidently regurgitate conventional wisdom even when it's outdated, incomplete, or simply mis-categorized. Human experts, meanwhile, are walking bundles of cognitive bias who see their specialty everywhere they look.

Do you like this post? Please consider supporting me by becoming a paid subscriber. It’s just one coffee per month. That will keep me going while you can keep reading! PLUS, you get my latest book Human Robot Agent FOR FREE! Subscribe now.

The AIs failed because they were trained on medical literature that treats tendonitis and tendinopathy as similar things, despite research showing they're different conditions requiring opposite treatments. They gave me the statistically most likely diagnosis based on a mix-up of categories.

The human expert failed because he'd found his intellectual home in psychosomatic medicine and wasn't about to let a simple mechanical injury ruin his favorite narrative. When you've written a book about minds creating body problems, every body problem looks like evidence of a mental cry for help.

Both human and AI failed because they were too confident in their knowledge and too incurious about alternative explanations. The AIs couldn't think beyond their training data. The human couldn't think beyond his pet theory. Meanwhile, the solution required neither artificial intelligence nor human expertise—just careful attention to what was actually happening, a willingness to experiment, and enough intellectual stubbornness to question received wisdom.

This pattern repeats everywhere. In business, we see the same dynamic between AI-powered analytics and human "domain experts." The algorithms confidently extrapolate from historical patterns, while the humans confidently apply their favorite frameworks. Both miss the messy reality that doesn't fit their thinking models.

The important skill in the future of work isn't choosing between human and artificial intelligence. It's knowing when to ignore both. Sometimes the smartest move is to tune out the experts, human plus machine, pay attention to what's actually happening, and run your own experiments. It has the curse of knowledge written all over it. Sometimes an amateur with fresh eyes accidentally discovers what the experts' training prevents them from noticing.

Don't get me wrong—I'm not advocating for wholesale rejection of expertise. When you're building a bridge or performing surgery, you want people who've spent decades learning their craft. But when you're dealing with complex, socio-technical problems that don't fit neat categories, expertise can be a trap.

My hamstring is now happily carrying me through 25-kilometer weeks, but the real victory was learning to trust my own observations and reasoning over pet theories. Sometimes the best expertise is knowing when to ignore the experts. The future belongs to those who can navigate between overconfident AI and overconfident humans, using both as sources of insight while trusting neither as oracles. Sometimes the wise choice is to ignore the wise and trust your own stubborn curiosity.

October 24, 2025

You Cannot Please Everyone

The only good reason for writing posts complaining about other people's usage of AI is when your audience consists of snobbish, patronizing fools.

I recently watched an animated TV show with my ten-year-old friend. It was horrible. The animations were cheap; the voice acting was cringy, and the plot was nonexistent. But guess what? None of that mattered because the show was all about tanks. My ten-year-old buddy loves tanks. And when a TV show has a tank in every animated scene, it's a f**king great show.

Here are a few more tanks:

Now, I could go online trashing this TV series for the horrendous quality of its animations. But what would be the point? I'm not their target audience. I'm not a ten-year-old obsessed with tanks. If I slam the producers for their crappy product, I only expose myself as a snobbish, patronizing fool.

This morning, my hubby made a similar observation about all-you-can-eat buffets. We despise everything about them: the industrial-grade slop, the heat lamps slowly destroying what might have once been food, the whole depressing spectacle. But our disgust is completely irrelevant because we don't eat there. Millions of people apparently enjoy these nutritional wastelands, and good for them. I'm not joining their feast, but I'm also not wasting energy condemning their choices.

In the age of artificial intelligence and robotics, it's crucial to remind ourselves: only our target audience counts. Nobody else matters. We cannot please everyone. It's the same for any other product we make.

I vividly remember my first critical book reviewer. I was about to publish my first book, Management 3.0, and my publisher, Addison-Wesley, had assigned a few reviewers to evaluate my manuscript. Most reviewers loved it. One person hated it. The hater was a person with an academic background who rated the many jokes in my book as "highly unprofessional." But the publisher said it didn't matter. "He's not your target audience." Fifteen years later, the book has sold 70,000 copies and is regarded by many as a classic. It just has made no waves in academia, and that's perfectly fine.

The AI writing hysteria follows the same pattern, except with more sanctimony and less self-awareness.

Every week, some writer publishes another hand-wringing essay about AI "polluting" the literary landscape. They're horrified that ChatGPT might be ghostwriting someone's newsletter, or that Claude helped craft a blog post. They speak of authenticity and craftsmanship as if they're defending the last monastery from barbarian hordes.

But unless they're consuming that AI-assisted content, their outrage is performative nonsense.

Only your target reader decides what's brilliant. Nobody else matters. You cannot please everyone.

More tanks:

Maybe your readers demand prose crafted with the delicacy of a Swiss watchmaker. If so, stay far away from AI. Your audience might sniff out synthetic sentences faster than a bloodhound. Or maybe your readers just want information delivered efficiently. In that case, milk every AI tool available because speed and volume matter more than artisanal sentence structure.

In my case? I write for people who like challenging, controversial viewpoints presented to them as an enjoyable read. That means I take the middle road: each post (including this one) starts as a fully hand-written draft because it's important that the core message comes from me. Then, I might turn to ChatGPT, Gemini, and Claude for help in additional research, feedback on structure, an occasional sentence-level rewrite, and feedback on style. I even have Claude checking my posts against my ethical values. But every post ends with my final polish. I want every essay to be in my tone of voice. The average long-form post on Substack costs me about eight hours of work. Without AI, it would probably be more like sixteen. I consider that a win because I can offer my readers twice more of what they like.

And only the reader decides if I did a decent job offering them food for thought as an enjoyable read. Nobody else. I cannot please everyone.

Do you like this post? Please consider supporting me by becoming a paid subscriber. It’s just one coffee per month. That will keep me going while you can keep reading! PLUS, you get my latest book Human Robot Agent FOR FREE! Subscribe now.

Don't misunderstand me; it does make sense to evaluate and discuss the ethical side of production processes of any kind, including writing. In the case of animated TV shows and food production, we might discuss worker rights or animal welfare. With AI used in writing, we should discuss the sustainability, copyright, and employability problems that are part of the AI revolution. All of that is fair game for debate.

But not the pointless whining about machines and algorithms.

It makes little sense for people to complain about other writers using AI in their writing. If they're not the target audience, their opinion of the quality of writing is irrelevant. Judging the creative process of something they're not even consuming makes them look like a snobbish, patronizing fool. The only good reason for writing posts lamenting other people's AI usage is when their own audience consists of snobbish, patronizing authors. They will happily lap it up.

Don't be like them. Go ahead and use AI in your writing when it serves your audience. If all your readers want is ridiculous stories about tanks, more stories about tanks is good. Endless stories about tanks is even better. Grammar, style, and plot are all irrelevant as long as every paragraph has at least one reference to a tank.

I've sold 200,000 books across multiple publishers and worked with more developmental editors and copy editors than I can count. Every professional I've encountered agrees on this fundamental principle: your target user is the only judge that matters. Everyone else is irrelevant background chatter.

As a creative (or any kind of product designer for that matter) remember that you cannot please everyone.

Jurgen

P.S. Here are some more tanks:

October 20, 2025

The Stochastic Parrot vs. the Sophistic Monkey: I Trust AI More Than Most Humans

I trust AI more than most humans. That's not because the average machine is so brilliant. It's because the average human is so stupid.

Many humans—bless their hearts—are freaking out about "AI hallucinations." As if humans don't confidently hallucinate entire religions, belief systems, conspiracy theories, and process improvement frameworks every single day. Compared to the average human's cocktail of bias, ignorance, and misplaced confidence, these AIs are practically philosophers.

Asking the MachinesLet me give you an example.

The other night, I had a cosmology question—one of those "wait, how does this make sense?" moments that Stephen Hawking probably would have rolled his eyes at. I've been diving into books such as A Brief History of Time, The Fabric of the Cosmos, and The Inflationary Universe—you know, light bedtime reading—and I stumbled over a paradox:

If, according to Einstein, all motion is relative, then how the hell can scientists talk about the absolute velocity of Earth, our solar system, or even our galaxy when measured against the radiation of the cosmic microwave background (CMB)?

Now, if I had thrown that question into my local neighborhood group chat or onto Facebook or TikTok, the odds of getting a coherent answer would have been roughly the same as finding intelligent life on Mars. My friends? Predictably useless. My family? Adorably clueless. And as for the likes of scientists such as Brian Cox or Neil deGrasse Tyson, they're tragically unavailable for clearing up my late-night cosmological quandaries.

So, I did what any 21st-century human does when they seek knowledge without judgment: I consulted an LLM. And it must be said, Gemini delivered the answer I craved: simple, clear, and with neither a confused chuckle nor a condescending sigh. I won't bore you with the physics, but let's just say I found the explanation accurate and elegant.

That's become the pattern lately. Whether it's cosmology, philosophy, complexity, or just the dread of discovering a suspicious-looking spot on my shoulder, the answers I get from AIs are consistently better (or maybe I should say less wrong) than whatever I'd get from the average human in my social circle.

Bias and Hallucinations in AIs"The answers I get from AIs are consistently less wrong than whatever I'd get from the average human in my social circle."

On the social networks, whenever I talk about how useful AI can be, the usual suspects show up: the technophobes, the digital doomsayers, the self-appointed prophets of "responsible AI." They clutch their pearls and remind me, in the same tone people use to warn everyone about social media rotting our brains and computer games ruining teenagers, that "the machines are biased" and "they hallucinate," followed by the inevitable: "They're just stochastic parrots!" As if that phrase alone grants them a PhD in smugness.

Sure, they're right, technically speaking. Large Language Models are biased, fallible, and overconfident. I've often said LLMs are like politicians: they always have an answer, they crave everyone's approval, and they can be spectacularly wrong with stunning confidence. The average political leader is practically a walking AI, minus the energy-guzzling data center.

"LLMs are like politicians: they always have an answer, they crave everyone's approval, and they can be spectacularly wrong with stunning confidence."

That's exactly why we keep raising the bar for the machines. Every benchmark, safety layer, and alignment model is our way of teaching them a shred of epistemic humility. We audit their biases, fine-tune their judgment, and retrain them endlessly. Unlike the architecture of the human brain, AIs are continuously improved. Their flaws aren't natural failings—they're engineering challenges. And we're getting better at solving them every single day.

I wish we did the same in politics.

Bias and Hallucinations in Humans?While we're busy auditing and benchmarking algorithms for micro-biases and hallucination rates, nobody is willing to raise the intellectual bar for humans to a similar level of coherence.

"Nobody is willing to raise the intellectual bar for humans to a similar level of coherence."

We seem perfectly fine letting our un-updatable human brains run wild in our social echo chambers. We nod along to friends, family, and followers who still think "doing my own research" means watching two YouTube videos and a handful of funny memes.

We hang onto every word of politicians and social media influencers who couldn't explain the difference between gender and biological sex if you offered them a coupon for a lifetime of free marketing campaigns; who think import tariffs are a punishing fee for foreign governments rather than a tax on their own fellow citizens; and who genuinely believe that immigration, not incompetence, is the root cause of all national misery.

Stupidity is an understatement.

If I ever want to experience real, high-octane nonsense, I just open the news to see what the humans are talking about. There I'll find elected officials proudly denying climate science, mangling economic laws and principles, and sprinkling conspiracy theories like parmesan on a pile of policy spaghetti. Here in the Netherlands, we're in election season again, because the last government was so hopelessly incompetent it couldn't even agree on which brand of bullshit to peddle to their voters.

And if that's not enough, there's always the digital carnival we attend every day: Facebook groups, TikTok videos, and Telegram channels where people pass around misinformation like a bowl of free M&M's—colorful, addictive, and most definitely bad for our health.

So yes, AIs hallucinate. But for Olympic-level delusion and disinformation, we don't need machines. We've already got plenty of humans debating each other on talk shows and at dinner tables.

Social Media Debates"For Olympic-level delusion and disinformation, we don't need machines. We've already got plenty of humans debating each other on talk shows and at dinner tables."

And on social media, of course.

Every time I post something positive about AI, the comments section quickly fills up with people auditioning for a Logical Fallacies 101 textbook.

First comes the Straw man fallacy: "So you just do whatever the AI tells you?" No. I still have my own brain and a healthy dose of critical thinking. I do whatever makes the most sense to me.

Then the Slippery slope crowd warns me that asking Gemini a question about cosmology could somehow end with robots ruling the planet. That's a bit like saying two chocolates a day will make you obese.

Next up, the Appeal to nature—"But humans are creative, empathetic and sensitive!" Indeed, they are. They also eat and poop, but I don't see exactly how that relates to their reasoning capabilities.

And, of course, the False equivalence: "AIs and humans both make mistakes, so they're not much different from each other." Right. Like a computer and a laundromat are equally good at calculations because both can equally suffer from power failures.

Finally, when people's arguments fail to impress me, there's always the inevitable Ad hominem: "You just hate people." Well, not everyone. But I certainly dislike those who make other people's lives miserable by peddling their bullshit.

Most of these social media "debates" aren't about reason at all. They're about ego. People aren't defending logic; they're defending the fragile belief that machines cannot replace them.

But what hope does the average human have when AIs already beat them at basic reasoning?

The Art of SelectionSpeaking of biases…

Some people have accused me of Selection bias: "You're comparing the average AI to the average human," they say, "when you should compare it to genuine experts."