Brian Potter's Blog

November 22, 2025

Reading List 11/22/25

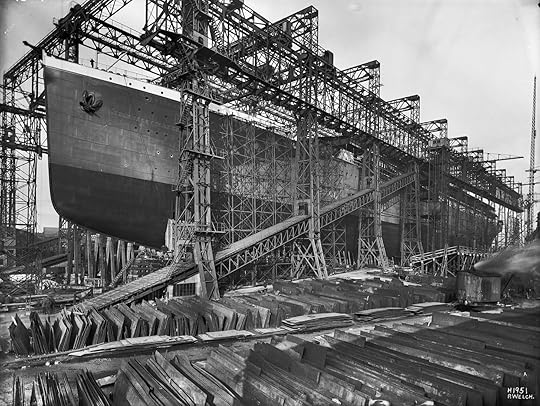

USS George HW Bush under construction at Newport News shipyard.

USS George HW Bush under construction at Newport News shipyard.Welcome to the reading list, a weekly roundup of news and links related to buildings, infrastructure, and industrial technology. This week we look at the ship failure that caused the Francis Scott Key Bridge collapse, the boring part of Bell Labs, a more efficient way of making antimatter, underground nuclear reactors, and more. Roughly 2/3rds of the reading list is paywalled, so for full access become a paid subscriber.

Francis Scott Key Bridge CollapseI normally think of extreme sensitivity to small failures as a property of very high performance engineered objects – things like a jet engine catastrophically failing due to a pipe wall being a few fractions of a millimeter too thin. But other complex engineered systems can also be susceptible to the right (or wrong) sort of very small failure. The National Transportation Safety Board has a report out on what caused the MV Dali containership to lose power and crash into the Francis Scott Key Bridge in Baltimore in 2024. The culprit? The label on a single wire in slightly the wrong position, which prevented the wire from being firmly connected. When the wire came loose, the ship lost power. Via the NTSB:

At Tuesday’s public meeting at NTSB headquarters, investigators said the loose wire in the ship’s electrical system caused a breaker to unexpectedly open -- beginning a sequence of events that led to two vessel blackouts and a loss of both propulsion and steering near the 2.37-mile-long Key Bridge on March 26, 2024. Investigators found that wire-label banding prevented the wire from being fully inserted into a terminal block spring-clamp gate, causing an inadequate connection.

The NTSB also has a video on its Youtube channel showing exactly what went wrong with the wire.

Apple and 3D printing titaniumApple has an interesting piece on their use of 3D printing for their titanium-bodied watches. It’s typically rare to use 3D printing for large-volume production, due to its higher unit costs compared to other fabrication technologies. Apple seems to be using 3D printing on its watch bodies for two reasons: one is that because 3D printing is additive rather than subtractive (machining down a titanium forging), there’s less material waste, which they consider beneficial for decarbonization reasons. The other is that 3D printing makes it possible to fabricate part geometries that wouldn’t be possible using other fabrication methods.

The boring part of Bell Labs

Apple 2030 is the company’s ambitious goal to be carbon neutral across its entire footprint by the end of this decade, which includes the manufacturing supply chain and lifetime use of its products. Already, all of the electricity used to manufacture Apple Watch comes from renewable energy sources like wind and solar.

Using the additive process of 3D printing, layer after layer gets printed until an object is as close to the final shape needed as possible. Historically, machining forged parts is subtractive, requiring large portions of material to be shaved off. This shift enables Ultra 3 and titanium cases of Series 11 to use just half the raw material compared to their previous generations.

“A 50 percent drop is a massive achievement — you’re getting two watches out of the same amount of material used for one,” Chandler explains. “When you start mapping that back, the savings to the planet are tremendous.”

In total, Apple estimates more than 400 metric tons of raw titanium will be saved this year alone thanks to this new process.

Bell Labs, as I’ve noted several times, is famous for the number of world-changing inventions and scientific discoveries it generated over its history. It’s the birthplace of the transistor, the solar PV cell, and information theory, and it has accumulated more Nobel Prizes than any other industrial research lab. But the scientific breakthroughs and world-changing inventions were a small part of what Bell Labs did. Most people that worked there were engaged in the more prosaic work of making the telephone system work better and more efficiently. Elizabeth Van Nostrand has an interesting interview with her father, who worked in this “boring” part of Bell Labs:

Transit timelines

Most calls went through automatically e.g. if you knew the number. But some would need an operator. Naturally, the companies didn’t want to hire more operators than they needed to. The operating company would do load measurements and, if the number of calls that needed an operator followed a Poisson distribution (so the inter-arrival times were exponential).

The length of time an operator took to service the call followed an exponential distribution. In theory, one could use queuing theory to get an analytical answer to how many operators you needed to provide to get reasonable service. However, there was some feeling that real phone traffic had rare but lengthy tasks (the company’s president wanted the operator to call around a number of shops to find his wife so he could make plans for dinner (this is 1970)) that would be added on top of the regular Poisson/exponential traffic and these special calls might significantly degrade overall operator service.

I turned this into my Master’s thesis. Using a simulation package called GPSS (General Purpose Simulation System, which I was pleasantly surprised to find still exists) I ran simulations for a number of phone lines and added different numbers of rare phone calls that called for considerable amounts of operator time. What we found was that occasional high-demand tasks did not disrupt the system and did not need to be planned for.

Transit timelines is a very cool website that has transit system maps for over 300 different cities, going back to the 19th century. For each city you can step through time in five year increments to look at the extent of the transit system, and compare the transit systems of multiple cities for a given period of time.

November 20, 2025

How ASML Got EUV

I am pleased to cross-post this piece with Factory Settings, the new Substack from IFP. Factory Settings will feature essays from the inaugural CHIPS team about why CHIPS succeeded, where it stumbled, and its lessons for state capacity and industrial policy. You can subscribe here.

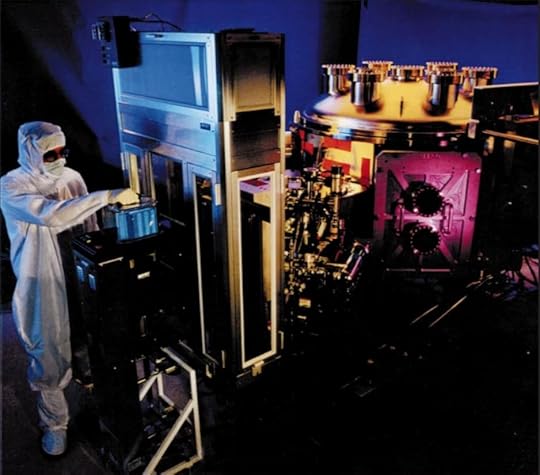

An EUV tool at Lawrence Livermore National Lab in the 1990s.

An EUV tool at Lawrence Livermore National Lab in the 1990s.Moore’s Law, the observation that the number of transistors on an integrated circuit tends to double every two years, has progressed in large part thanks to advances in lithography: techniques for creating microscopic patterns on silicon wafers. The steadily shrinking size of transistors — from around 10,000 nanometers in the early 1970s to around 20-60 nanometers today — has been made possible by developing lithography methods capable of patterning smaller and smaller features.1 The most recent advance in lithography is the adoption of Extreme Ultraviolet (EUV) lithography, which uses light at a wavelength of 13.5 nanometers to create patterns on chips.

EUV lithography machines are famously made by just a single firm, ASML in the Netherlands, and determining who has access to the machines has become a major geopolitical concern. However, though they’re built by ASML, much of the research that made the machines possible was done in the US. Some of the most storied names in US research and development — DARPA, Bell Labs, IBM Research, Intel, the US National Laboratories — spent decades of research and hundreds of millions of dollars to make EUV possible.

So why, after all that effort by the US, did EUV end up being commercialized by a single firm in the Netherlands?

How semiconductor lithography worksBriefly, semiconductor lithography works by selectively projecting light onto a silicon wafer using a mask. When light shines through the mask (or reflects off the mask in EUV), the patterns on that mask are projected onto the silicon wafer, which is covered with a chemical called photoresist. When the light strikes the photoresist, it either hardens or softens the photoresist (depending on the type). The wafer is then washed, removing any softened photoresist and leaving behind hardened photoresist in the pattern that needs to be applied. The wafer will then be exposed to a corrosive chemical, typically plasma, removing material from the wafer in the places where the photoresist has been washed away. The remaining hardened photoresist is then removed, leaving only an etched pattern in the silicon wafer. The silicon wafer will then be coated with another layer of material, and the process will repeat with the next mask. This process will be repeated dozens of times as the structure of the integrated circuit is built up, layer by layer.

Early semiconductor lithography was done using mercury lamps that emitted light of 436 nanometers wavelength, at the low end of the visible range. But as early as the 1960s, it was recognized that as semiconductor devices continued to shrink, the wavelength of light would eventually become a binding constraint due to a phenomena known as diffraction. Diffraction is when light spreads out after passing through a hole, such as the openings in a semiconductor mask. Because of diffraction, the edges of an image projected through a semiconductor mask will be blurry and indistinct; as semiconductor features get smaller and smaller, this blurriness eventually makes it impossible to distinguish them at all.

The search for better lithographyThe longer the wavelength of light, the greater the amount of diffraction. To avoid eventually running into diffraction limiting semiconductor feature sizes, in the 1960s researchers began to investigate alternative lithography techniques.

One method considered was to use a beam of electrons, rather than light, to pattern semiconductor features. This is known as electron-beam lithography (or e-beam lithography). Just as an electron microscope uses a beam of electrons to resolve features much smaller than a microscope which uses visible light, electron-beam lithography can pattern features much smaller than light-based lithography (“optical lithography”) can. The first successful electron lithography experiment was performed in 1960, and IBM extensively developed the technology from the 1960s through the 1990s. IBM introduced its first e-beam lithography tool, the EL-1, in 1975, and by the 1980s had 30 e-beam systems installed.

E-beam lithography has the advantage of not requiring a mask to create patterns on a wafer. However, the drawback was that it’s very slow, at least “three orders of magnitude slower than optical lithography”: a single 300mm wafer takes “many tens of hours” to expose using e-beam lithography. Because of this, while e-beam lithography is used today for things like prototyping (where not having to make a mask first makes iterative testing much easier) and for making masks, it never displaced optical lithography for large-volume wafer production.

Another lithography method considered by semiconductor researchers was the use of X-rays. X-rays have a wavelength range of just 10 to 0.01 nanometers, allowing for extremely small feature sizes. As with e-beam lithography, IBM extensively developed X-ray lithography (XRL) from the 1960s through the 1990s, though they were far from the only ones. Bell Labs, Hughes Aircraft, Hewlett Packard, and Westinghouse all worked on XRL, and work on it was funded by DARPA and the US Naval Research Lab.

For many years X-ray lithography was considered the clear successor technology to optical lithography. In the late 1980s there was concern that the US was falling behind Europe and Japan in developing X-ray lithography, and by the 1990s IBM alone is estimated to have invested more than a billion dollars in the technology. But like with e-beam lithography, XRL never displaced optical lithography for large-volume production, and it’s only been used for relatively niche applications. One challenge was creating a source of X-rays. This largely had to be done using particle accelerators called synchrotrons: large, complex pieces of equipment which were typically only built by government labs. IBM, committed to developing X-ray lithography, ended up commissioning its own synchrotron (which cost on the order of $25 million) in the late 1980s.

Part of the reason that technologies like e-beam and X-ray lithography never displaced optical lithography is that optical lithography kept improving, surpassing its predicted limits again and again. Researchers were forecasting the end of optical lithography since the 1970s, but through various techniques, such as immersion lithography (using water between the lens and the wafer), phase-shift masking (designing the mask to deliberately create interference in the light waves to increase the contrast), multiple patterning (using multiple exposures for a single layer), and advances in lens design, the performance of optical lithography kept getting pushed higher and higher, repeatedly pushing back the need to transition to a new lithography technology. The unexpectedly long life for optical lithography is captured by Sturtevant’s Law: “the end of optical lithography is 6 – 7 years away. Always has been, always will be.”

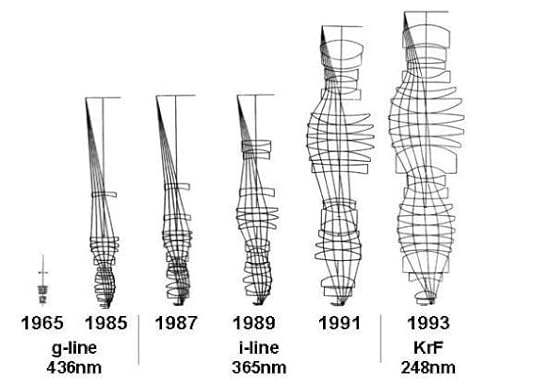

Advances in optical lithography lenses over time, via Bruning 2007. In addition to more complex lenses, shorter wavelengths of light were used.The rise of EUV

Advances in optical lithography lenses over time, via Bruning 2007. In addition to more complex lenses, shorter wavelengths of light were used.The rise of EUVIn the early 1980s, Hiroo Kinoshita, a researcher at Japan’s Nippon Telephone and Telegraph (NTT), was researching X-ray lithography, but was becoming disillusioned by its numerous difficulties. The X-ray lithography technology being used was known as “X-ray proximity lithography” or XPL. Whereas in optical lithography light passed through a lens to reduce the image size projected onto the silicon wafer, because no known materials could make a reduction lens for X-rays, X-rays were projected directly onto the wafers without any sort of lens reduction. In part because of the lack of reduction — which meant that any imperfections in the mask wouldn’t be scaled down when projected onto the wafer — making masks for XPL proved exceptionally difficult.

However, while it’s not possible to focus X-rays with a lens, it is possible to reflect certain X-ray wavelengths with a mirror. A normal mirror will only reflect X-rays at very shallow angles, making it very hard to use them for a practical lithography system (the requirement of a shallow angle would make such a system gigantic); at steeper angles, X-rays will simply pass through the mirror. However, by constructing a special mirror from alternating layers of different materials, known as a “multilayer mirror”, light near the X-ray region of the spectrum can be reflected at much steeper angles. Multilayer mirrors use layers of different materials with different indices of refraction (how much light bends when entering it) to create constructive interference — each layer boundary reflects a small amount of light, which (when properly designed) adds together with the reflection from the other layers. (Anti-reflective coatings use a similar principle, but instead use multiple layers to create destructive interference to eliminate reflections.)

The first multilayer mirrors that could reflect X-rays were built in the 1940s, but they were impractical because the mirrors were made from gold and copper, which quickly diffused into each other, degrading the mirror. But by the 1970s and 80s, the technology for making these constructive interference-creating mirrors had dramatically improved. In 1972 researchers at IBM successfully built a 10-layer multilayer mirror that reflected a significant fraction of light in the 5 to 50 nanometer region, and in 1981 researchers at Stanford and the Jet Propulsion Laboratory built a 76-layer mirror from alternating layers of tungsten and carbon. A few years later researchers at NTT also successfully built a multilayer tungsten and carbon film, and based on their success Kinoshita, the researcher at NTT, began a project to leverage these multilayer mirrors to create a lithography system. In 1985 his team successfully projected an image using what were then called “soft X-rays” (light in roughly the 2 nanometer to 20 nanometer range) reflected off of multilayer mirrors for the first time.2 That same year, researchers at Stanford and Berkeley published work showing that a multilayer mirror made from molybdenum and silicon could reflect a very large fraction of light near the 13 nanometer wavelength. Because X-rays in a lithography tool will bounce off of multiple mirrors (a modern EUV tool might have 10 mirrors), reflecting a large portion of them is key to making a lithography tool practical; too little reflection and the light will be too weak by the time it reaches the wafer.

Initially people in the field were skeptical about the prospects of a reflective X-ray lithography system. When presenting this research in Japan, Kinoshita noted that his audience was “highly skeptical of his talk” and that they were “unwilling to believe that an image had actually been made by bending X-rays”. The same year, when Bell Labs researchers suggested to the American government that soft X-rays with multilayer mirrors could be used to create a lithography system, they received an “extremely negative reaction”; reviewers argued that “even if each of the components and subsystems could be fabricated, the complete lithography system would be so complex that its uptime would be negligible.” When researchers at Lawrence Livermore National Lab, after learning of Kinoshita’s work, presented a paper on their own soft X-ray lithography work in 1988, reception was similarly negative. One paper author noted that “You can’t imagine the negative reception I got at that presentation. Everybody in the audience was about to skewer me. I went home with my tail between my legs…”

Despite the negative reactions, work on soft X-ray lithography continued to advance at NTT, Bell Labs, and Livermore. Kinoshita’s research group at NTT designed a new two-mirror soft x-ray lithography system, and used it to successfully print patterns with features 500 nanometers wide. When presenting this work at a 1989 conference in California, a Bell Labs researcher named Tania Jewell became extremely interested, and “deluged” Kinoshita with questions. The next year, Bell Labs successfully printed a 50 nanometer pattern using soft X-rays. The 1989 conference, and the meeting between NTT and Bell Labs, has been called the “dawn of EUV”.

Work on soft X-ray lithography continued in the 1990s. Early soft X-ray experiments had been done with synchrotron radiation, but a synchrotron would be difficult to make into a practical light source for high-volume production, so researchers looked for alternative ways to generate soft X-rays. One strategy for doing this is to heat certain materials, such as xenon or tin, enough to turn them into a plasma. This can be done using either lasers (creating laser produced plasma, or LPP) or electrical currents (creating discharge produced plasma, or DPP). Development of LPP power sources began in the 1990s, but creating such a system was enormously difficult. Turning material into a plasma generated debris which reduced the life of the extremely sensitive multilayer mirrors, and a “great deal of effort [was] put into designing and testing a variety of debris minimization schemes”. One strategy that proved to be very successful was to minimize the amount of debris by creating a “mass limited target”: minimizing the amount of material to be heated into plasma by emitting it as a series of microscopic droplets. Over time, these and other strategies allowed for longer and longer mirror life.

Another major challenge was manufacturing sufficiently precise multilayer mirrors. In 1990, mirrors could be fabricated with at most around 8 nanometers of precision, but a practical soft X-ray lithography system demanded 0.5 nanometer precision or better. NTT had obtained its first multilayer mirrors from Tinsley (the US firm that had built the ultra-precise mirrors for the Hubble Space Telescope), and with NTT’s encouragement Tinsley was able to fabricate mirrors of 1.5 to 1.8 nanometer accuracy in 1993. Similar work on mirror accuracy was done at Bell Labs (with assistance from researchers at the National Institute of Standards and Technology), and during the 1990s the precision of multilayer mirrors continued to improve.

As work on it was proceeding, a change in name for soft X-ray technology was suggested. “Soft X-ray” was thought to be too close to X-ray proximity lithography, which worked on different principles (ie: it had no mirrors) and had developed a negative reputation thanks to its difficult development history. So in 1993 the name was changed to Extreme Ultraviolet Lithography, or EUV. The wavelengths being used were at the very bottom of the ultraviolet spectrum, and the name created associations with “Deep Ultraviolet Lithography” (DUV), a lithography technique based on 193-nanometer light, which was then being used successfully.

Organizational momentum behind EUV continued to build. In the early 1990s Sandia National Labs, using technology developed for the Strategic Defense Initiative, partnered with Bell Labs to demonstrate a soft X-ray lithography system using a laser produced plasma. In 1991, Japanese corporations Nikon and Hitachi also began to research EUV technology. That same year, the Defense Advanced Research Projects Agency (DARPA) began to fund lithography development via its Advanced Lithography Program, and by 1996 Sandia National Labs and Lawrence Livermore lab had committed around $30 million to EUV development (with a similar amount contributed by several private companies). In 1992, Intel committed $200 million into the development of EUV, most of which funded research work at Sandia, Livermore and Bell Labs. In 1994, the US formed the National EUV Lithography Program, made up of researchers from the national labs (Livermore, Berkeley, and Sandia), and led by DARPA and the DOE.

EUV-LLCIn 1996, congress voted to terminate DOE funding for EUV research. Without funding to keep the research community together, the national lab researchers would be reassigned to other tasks, and much of the knowledge around EUV might dissipate. At the time, there were still numerous difficulties with EUV, and it was far from obvious it would be the successor lithography technology: a 1997 lithography task force convened by SEMATECH (a US semiconductor industrial consortium) ranked EUV last of four possible technologies behind XPL, e-beam lithography, and ion projection lithography.

Despite the uncertainty, Intel placed a bold bet on the future of EUV, and stepped in with around $250 million in funding to keep the EUV research program alive. It formed a consortium known as EUV-LLC, which contracted with the Department of Energy to fund EUV work at Sandia, Berkeley, and Livermore national labs. Other major US firms, including Motorola, AMD, IBM, Micron also joined the consortium, but Intel remained the largest and most influential shareholder, the “95% gorilla”. Following the creation of EUV-LLC, Europe and Japan formed their own EUV research consortiums: EUCLIDES in Europe and ASET in Japan.

When EUV-LLC was formed, US lithography companies had been almost completely forced out of the global marketplace. Japanese firms Nikon and Canon held a 40% and 30% share of the market, respectively, and third place was held by an up-and-coming Dutch firm called ASML, which held 20% market share. The members of EUV-LLC, not least Intel, wanted a major foreign lithography firm to join the consortium to help ensure EUV became a global standard However, the prospect of funding the development of advanced semiconductor technology, only to hand that technology over to a national competitor (especially a Japanese competitor who had so recently been responsible for decimating the US semiconductor industry), wasn’t an easy sell. Nikon declined to participate in EUV-LLC in part due to the resulting controversy, and Canon was ultimately prevented from joining by the US government.

ASML, however, was different. Being located in the Netherlands, it was considered “neutral ground” in the semiconductor wars between the US and Japan. Intel, whose main concern was that it could itself get access to the next generation of lithography tools regardless of who produced them, strongly advocated that ASML be allowed to procure a license. (One executive at the US lithography company Ultratech Stepper complained that Intel had “done everything in their power to give the technology to ASML on a silver platter.”) In 1999, ASML was allowed to join EUV-LLC and gain a license for its technology, provided that it used a sufficient quantity of US components in the machines it built and opened a US factory — conditions that it never met.

Left outside of the EUV-LLC consortium, Nikon and Canon never successfully developed EUV technology. And neither did any US firms. Silicon Valley Group, a US lithography tool maker which had licensed EUV technology, was bought by ASML in 2001, and Ultratech Stepper, another US licensee, opted not to pursue it. ASML, in partnership with German optics firm Carl Zeiss, became the only lithography firm to take EUV technology across the finish line.

ConclusionOver the next several years, EUV-LLC proved to be a huge success, and when the program ended in 2003, it had met all of its technical goals. EUV-LLC had successfully built a test EUV lithography tool, made progress on both LPP and DPP light sources, developed masks that would work with EUV, created better multilayer mirrors, and filed for over 150 patents. Thanks in large part to Intel’s wager, EUV would ultimately become the lithography technology of the future, a technology that would entirely be in the hands of ASML.

This future took much longer to arrive than expected. When the EUV-LLC program concluded in 2003, US semiconductor industry organization SEMATECH stepped in to continue funding work on commercialization. ASML shipped its first prototype EUV lithography tool in 2006, but with very weak DPP power sources. A US company, Cymer (later acquired by ASML), was developing a better power source using a laser-produced plasma, but working out the problems with it took years and required further investment from Intel. Making defect-free EUV masks proved to be similarly difficult. EUV development proved to be so difficult that ASML ultimately required billions of dollars in investment from TSMC, Samsung, and Intel to fund its completion: the three companies invested $1 billion, $1 billion, and $4 billion, respectively in ASML in 2012 in exchange for shares of the company. ASML didn’t ship its first production EUV tool until 2013, but development work on things like the power source (often funded by the US) continued for years afterwards. Intel, worried about the difficulties of getting EUV into high-volume production, made the ultimately disastrous decision to try and push optical lithography technology one more step for its 10 nanometer process.

But today, after decades of development, EUV has arrived. TSMC, Intel, and Samsung, the world’s leading semiconductor fabricators, are all using EUV in production. And they are all using lithography tools built by ASML for it.

An important takeaway from the story of EUV is that developing a technology that works, and successfully competing with that technology in the marketplace, are two different things. Thanks to contributions from researchers around the world, including a who’s who of major US research organizations — DARPA, Bell Labs, the US National Labs, IBM Research — EUV went from unpromising speculation to the next generation of lithography technology. But by the time it was ready, US firms had been almost entirely forced out of the lithography tools market, leaving EUV in the hands of a single European firm to take it across the finish line and commercialize.

1Modern semiconductor processes often have names that imply smaller sizes — TSMC’s 7 nm node, Intel’s 10 nm node — but these are essentially just names that don’t correspond with actual feature sizes.

2Definitions for what light is considered to be “soft x-ray” don’t seem especially consistent. One article notes that “the terms soft X-ray and extreme ultraviolet aren’t well defined.”

November 15, 2025

Reading List 11/15/25

Bristol 188 supersonic research aircraft.

Bristol 188 supersonic research aircraft.Welcome to the reading list, a weekly roundup of news and links related to buildings, infrastructure, and industrial technology. This week we look at Israel refilling a lake with desalinated seawater, South Korean nuclear subs, ways to make titanium cheap, a “new Bell Labs”, and more. Roughly 2/3rds of the reading list is paywalled, so for full access become a paid subscriber.

Housekeeping items this week:

IFP has started a new substack, Factory Settings, about the CHIPS Act and how it succeeded.

Sea of GalileeThe inaptly named Sea of Galilee is a large lake in Israel, and the supposed location of many of Jesus’ miracles (including him walking on water). The lake supplies around 10% of Israel’s drinking water, but water levels in the lake have declined in recent years.

Via Giame and Artzy 2022.

Via Giame and Artzy 2022.To try and prevent declining water levels, Israel is now pumping large amounts of desalinated seawater into the lake. Via the Times of Israel:

Iran drought

The Water Authority has started channeling desalinated water to the Sea of Galilee, marking the first ever attempt anywhere in the world to top up a freshwater lake with processed seawater.

The groundbreaking project, years in the making and a sign of both Israel’s success in converting previously unusable water into a vital resource and the rapidly dropping water levels in the country’s largest freshwater reservoir, was quietly inaugurated on October 23.

The desalinated water enters the Sea of Galilee via the seasonal Tsalmon Stream, entering at the Ein Ravid spring, some four kilometers (2.5 miles) northwest of what is Israel’s emergency drinking source.

Firas Talhami, who is in charge of the rehabilitation of water sources in northern Israel for the Water Authority, told The Times of Israel that he expected the project to raise the lake’s level by around 0.5 centimeters (0.2 inches) per month.

The move has also reactivated the previously dried-out spring, allowing visitors to once again paddle down the Tsalmon, which now flows with desalinated water.

Israel isn’t the only middle eastern country facing water problems. The capital of Iran, Tehran, is facing an acute water shortage. This crisis has been brewing for months, and has now gotten so bad that the city may become “uninhabitable” if the current drought continues. Via Reuters:

Colorado River negotiations

President Masoud Pezeshkian has cautioned that if rainfall does not arrive by December, the government must start rationing water in Tehran.

“Even if we do ration and it still does not rain, then we will have no water at all. They (citizens) have to evacuate Tehran,” Pezeshkian said on November 6.

The stakes are high for Iran’s clerical rulers. In 2021, water shortages sparked violent protests in the southern Khuzestan province. Sporadic protests also broke out in 2018, with farmers in particular accusing the government of water mismanagement.

The water crisis in Iran after a scorching hot summer is not solely the result of low rainfall.

Decades of mismanagement, including overbuilding of dams, illegal well drilling, and inefficient agricultural practices, have depleted reserves, dozens of critics and water experts have told state media in the past days as the crisis dominates the airwaves with panel discussions and debates.

Pezeshkian’s government has blamed the crisis on various factors such as the “policies of past governments, climate change and over-consumption”.

In US water news, the Colorado River Compact is an agreement that determines how seven southwest states — California, Nevada, Arizona, New Mexico, Utah, Colorado, and Wyoming — divide up water from the Colorado River. The compact allocates a specific amount of water to each state. However, the total amount allocated to the various states exceeds the typical flow of the Colorado River, possibly because allocations were decided during a period of unusually high flow. New agreements have been needed to determine how water is allocated in these conditions, and states don’t always have an easy time coming to an agreement. From the Colorado Sun:

South Korean nuclear submarines

The rules that govern how key reservoirs store and release water supplies expire Dec. 31. They’ll guide reservoir operations until fall 2026, and federal and state officials plan to use the winter months to nail down a new set of replacement rules. But negotiating those new rules raises questions about everything from when the new agreement will expire to who has to cut back on water use in the basin’s driest years.

And those questions have stymied the seven state negotiators for months. In March 2024, four Upper Basin states — Colorado, New Mexico, Utah and Wyoming — shared their vision for what future management should look like. Three Lower Basin states — Arizona, California and Nevada — released a competing vision at the same time. The negotiators have suggested and shot down ideas in the time since, but they have made no firm decisions.

…The Department of the Interior is managing the process to replace the set of rules, established in 2007, that guide how key reservoirs — lakes Mead and Powell — store and release water.

The federal agency plans to release a draft of its plans in December and have a final decision signed by May or June. If the seven states can come to agreement by March, the Department of the Interior can parachute it into its planning process, said Scott Cameron, acting head of the Bureau of Reclamation, during a meeting in Arizona in June.

If they cannot agree, the feds will decide how the basin’s water is managed.

Given South Korea’s proficiency in shipbuilding, and in nuclear reactor construction, it’s always been somewhat surprising to me that South Korea doesn’t build nuclear submarines. Apparently this is due to non-proliferation restrictions that prevent Korea from producing enriched uranium for submarine reactors, as uranium enrichment could also be used to produce nuclear weapons.

Now though, the Trump administration has approved South Korean nuclear submarine construction, apparently as part of a deal where South Korea will invest in US shipbuilding capabilities. From Naval News:

The announcement came following a meeting with various Asian heads of state including South Korean President Lee Jae-Myung in Gyeongju, South Korea. Additional posts by Trump on Truth Social have detailed that the Submarines will be built on U.S soil at the Philadelphia shipyards, which were acquired by the Korean defense firm Hanwha late in 2024.

Subsequently, the construction of Nuclear submarines marks a departure from past efforts, as previous South Korean submarine construction has focused primarily on conventionally powered submarines. In tandem with this, South Korean Nuclear Submarine construction projects have remained in limbo for sometime as the U.S had not given tacit approval until President Trump’s statement.

However, as the Philadelphia Shipyards where construction will take place is not currently equipped to handle the construction of Nuclear Submarines (only commercial vessels have been produced), Hanwha has reportedly invested an additional $5 billion dollars into modernization and preparation. Despite this, there has been a lack of a concrete agreement regarding the development of the shipyards and a plan for the construction of the submarines with no official signature from the South Korean side.

These agreements are the conclusion of a long standing desire for nuclear powered submarines expressed by the South Korean government and military. Naval News has previously reported that subsequent efforts for a Nuclear Submarines have been born of increasingly intense operational needs for endurance and a deterrent towards neighboring nations such as North Korea, China, and Russia.

November 13, 2025

What Is A Production Process?

Corning ribbon machine, via The Henry Ford.

Corning ribbon machine, via The Henry Ford.Below is the first chapter of my book, The Origins of Efficiency, available now on Amazon, Barnes and Noble, and Bookshop.

In 1880, Thomas Edison was awarded a patent for his electric incandescent light bulb, marking the beginning of the age of electricity. Although it was the result of thousands of hours of research that took place over decades by Edison and his many predecessors, the ultimate design of Edison’s light bulb was simple, consisting of just a few components: a filament, a thin glass tube in which the filament was mounted, a pair of lead-in wires, a base, and the glass bulb itself.

Until the 20th century, light bulbs were largely manufactured by hand. Workers would run the lead-in wires through the inner glass tube, attach the filament to the lead-in wires, and attach the glass tube to the bulb. A vacuum pump would then suck the air out of the bulb. Initially, this was done by connecting the pump to the top of the bulb, leaving a small tip of glass that had to be cut off. Later, tipless bulbs were developed that had the air removed from the bottom.

Most of this manufacturing process was done in house by Edison’s Electric Light Company, but the production of the glass bulb itself, known as a bulb blank, was outsourced. Edison placed his first order for bulb blanks with the Corning Glass Works company in 1880. The process of making the bulb blanks was fairly straightforward: Glassworkers would mix together sand, lead, and potassium carbonate, along with small quantities of niter, arsenic, and manganese oxide, place the mixture in a crucible, and melt it in a furnace into liquid glass. A worker called a gaffer would then gather a blob of glass on the end of a hollow iron tube and place the blob into a mold the shape of a light bulb. While the blob was still attached to the iron tube, the gaffer would blow into it to form the body of the bulb, then open the mold and cut the bulb from the end of the tube.

We can draw this series of steps using a process flow diagram, a visual representation of how a process unfolds. See Figure 2 for an example of what the bulb blank process might look like. Making bulb blanks is an example of what we’ll call a production process—a series of steps through which input materials are transformed incrementally into a finished product. Each step in the process induces some change in the input material. The changed material is then passed on to the next step, which makes another change, and so on, until the finished product comes out the other side. In the bulb blank process, sand, lead, and other chemicals are the inputs. These are gradually transformed by heat, chemical reactions, and physical manipulation until a finished bulb blank emerges at the other end.

In turn, this output might be the input to a subsequent process. Bulb blanks, for instance, would then be sent to Edison’s factory to be assembled into complete light bulbs. Likewise, the input materials for the manufacture of bulb blanks were themselves the output of some other production process. Potassium carbonate, for example, was mined from potassium ore and then refined using the Leblanc process.

Outside of the small number of things we can obtain directly from nature, all products of civilization are the result of some sort of production process—some series of transformations that take in raw materials, energy, labor, and information and produce goods and services. At first glance, services might seem far removed from the production of physical goods like cars or shoes, but the same basic model applies. A house cleaner, for example, goes through a specific series of steps—cleaning the bedrooms, then the bathrooms, then the kitchen—using various inputs—labor, electricity, cleaning products—to transform an input—a dirty house—into an output—a clean one. These processes might be comparatively simple, such as the production of light bulb blanks, or exceptionally complex, with hundreds or even thousands of steps. One 19th-century watch factory boasted that its watches “required 3,700 distinct operations to produce,” while a 1940s Cadillac — a relatively simple automobile by modern standards — required nearly 60,000 separate operations.

Even everyday objects can mask a great deal of production complexity. In his book The Toaster Project, Thomas Thwaites disassembles a $7 toaster to find that it contains 404 parts made up of more than a hundred different materials. And if we follow the chain of production further back, to the processes required to make the various input materials (and the processes to make the inputs for those processes, and so on), we find a sprawling mass of complexity for even the simplest products of civilization. In his famous 1958 essay “I, Pencil,” Leonard Read notes that a full accounting of the inputs required to make an ordinary pencil—the steel used to make the tools to harvest the cedar, the ships used to transport the graphite from Sri Lanka to the factory, the agricultural equipment used to grow the castor beans to produce the lacquer—involves the work of millions of people all over the world.

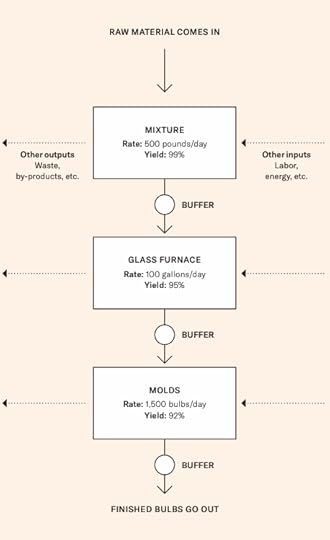

Figure 2. Process flow diagram of a bulb blank production process.Five factors of the production process

Figure 2. Process flow diagram of a bulb blank production process.Five factors of the production processNow that we have a basic model for how things get produced, we can add a bit of detail to the description, identifying five distinct factors of the production process. This slightly more regimented structure will be useful for pinpointing discrete sites of intervention that can improve the efficiency of a production process.

First is the transformation method itself. In bulb blank production, one transformation method is the process of blowing the glass bulbs. Of course, each transformation is itself made up of many steps (gathering the glass on a blowpipe, placing the mold around it, blowing while a worker holds it), which in turn might be made up of substeps (such as individual worker motions). Different situations will call for varying degrees of fidelity in describing a process—the scientific management movement of the early 20th century, for example, spent a great deal of time studying specific worker motions—but it will always be a simplified model that omits many details of what is actually occurring.

The idea of a well-defined transformation or series of transformations is something of a simplification, as there will inevitably be some degree of variation in the specific actions taken during a step. For a machine, this variation will be very small and occur in narrowly defined ways, but the farther we get from modern industrial production processes, the less true this becomes. A person might perform the same step slightly differently each time and modify their technique over time as they get more skilled. And craft production methods often require some degree of deciding what the next step should be. A glassblower blowing bulbs without a mold, for instance, will decide how hard to blow based on how they see the bulb taking shape.

Second, to understand how efficient a production process is, we need some idea of how much time the process takes. It obviously makes a big difference whether the bulb blank factory can produce 10 or 10,000 bulbs a day. Using bulb molds, three workers could produce about 150 bulbs per hour, or roughly 1500 per day. This is called the production rate. Each step in the process will have its own rate, and these rates may differ from those of other steps. For example, filling the glass crucibles might be done just once a week, even though glassworkers

were producing bulb blanks daily.

Third, to determine how much a given production process costs, we need to account for all the direct material inputs and outputs to the process. At the furnace step, raw materials go in and molten glass comes out. At the blowing step, molten glass goes in and a bulb comes out. Depending on how detailed we decide to be, we might also include inputs like the coal that fuels the furnace and outputs like the ash and smoke produced by the furnace. There are labor inputs as well. The blowing step, for instance, requires the labor of two or three workers to gather the glass, work the mold, and cut the finished bulb free.

We also need to account for the indirect inputs—things that aren’t used directly by the process but are nevertheless necessary. A factory’s rent can’t be directly attributed to any particular operation within the factory, but the building is still an important input to the process. We can account for this cost by attributing some fraction of it to each step. Similarly, we can assign some fraction of the cost of the equipment, administration, insurance, and any other overhead costs to each step in the process. (The question of how best to assign these indirect costs is an involved area of accounting, but broadly speaking, these costs will be spread over the amount of output we produce.)

Fourth, to understand whether the process is efficiently arranged, we need to keep track of how much material is in the process at any given time. At any point, some material is actively being worked on and some is waiting to be worked on. In bulb blank production, once the raw materials had been added to the crucible, it might take a while before the glass was gathered by workers and blown into bulbs. If crucibles were filled once a week, there would be about half a week’s worth of molten glass waiting to be turned into bulbs at any given time. Any material that isn’t currently being worked on is considered to be in a buffer of available material. The total amount of material in the system—that is, the combination of what’s in the buffer and what’s being worked on—is collectively known as work in process.

Fifth and finally, in evaluating a production process we need to make note of how the output of the process varies. While it’s tempting to think of a step as producing the exact same output every time, there will inevitably be some variation. At times, the process may simply fail. For example, in some cases, the furnace would produce a batch of glass that was unsuitable for bulbs. In other cases, the crucibles that held the molten glass would crack, spilling the glass before it could be turned into bulbs.

But there will also be more subtle sources of variation. For instance, the composition of the glass and the thickness of the bulbs would differ slightly, perhaps imperceptibly, from bulb to bulb. No two bulbs were exactly alike. This discrepancy can be a natural outcome of the process, the result of a disparity in the inputs, or due to variation in the environment in which the process takes place. The quality of the bulb glass, for example, was greatly dependent on the quality of the chemicals used, how well they were mixed, and the temperature of the furnace.

One simple way of characterizing variation is in terms of yield—the fraction of inputs that are successfully transformed into outputs. A yield of 50 percent would characterize a process that is only successful half the time. An unsuccessful transformation might be a complete failure (a bulb falls on the floor and breaks) or one that is simply outside the range of acceptable tolerance (the glass on the bulb was slightly too thin). In many cases, however, it will be useful to have a more detailed characterization of the variation in a process. In the production of light bulb filaments, very slight differences in temperature during the carburizing process resulted in the filament producing different amounts of light. Understanding how the resulting filaments varied was, therefore, necessary to determine how many bulbs of a given illumination could be produced. It might turn out that the variation in illumination could be described by a normal distribution with a particular mean and standard deviation, making it possible to track disruptions to the process by looking at whether values fell outside of the expected range. For now, we’ll just note that variation is an important factor to consider without worrying about developing a certain measurement for it.

Looking at a single step in the process, we now have five factors

that characterize it:

The transformation method itself. For example, the act of blowing molten glass into a mold.

The production rate. For example, how many molds the gaffers can fill in an hour.

The inputs and outputs, along with their associated costs. For example, the molten glass, the gaffer’s wages, and wear and tear on the molds and blowpipes.

The size of the buffer. For example, how much molten glass is stored in the furnace waiting for the gaffer.

The variability of the output. For example, fluctuations in how fast the gaffer works and the thickness of the bulbs produced, or how often the gaffer drops and breaks a bulb.

This is, of course, a highly simplified model. For one thing, it omits the complexity of what specifically occurs during each step. For another, it suggests that these factors are steady over time, but in reality they will frequently be in flux. The variation in output may rise when a new worker starts, or at the end of the day when workers are tired, or over a long period of time as workers or managers grow complacent. Alternatively, variation may go down over time as workers gain experience and precision improves.

This model also doesn’t include the many possible ways one step may influence another step, beyond how fast the step runs. The temperature of the glass furnace might influence how easy it is to blow the bulb into the mold, for example. Likewise, variation in one process may be a function of variation in some previous process. Bulbs breaking when the mold is removed, for instance, might be a function of inconsistent mixing of the ingredients or uneven temperature of the molten glass.

Finally, this model doesn’t include any specifics about what is actually being produced. As we’ll see later on, the form of the product and the method of production are intimately connected, and a change in one generally results in a change in the other.

Despite its various simplifications, however, this model gives us a useful way to structure our thinking about production processes and how they can be made more efficient.

Figure 3. Process sketch of a bulb blank process showing inputs, outputs, buffers, production rates, and yields.Improvements to the process

Figure 3. Process sketch of a bulb blank process showing inputs, outputs, buffers, production rates, and yields.Improvements to the processThe goal of any efficiency improvement is to minimize the costs of producing something. If we’re running a bulb blank factory, we want to figure out how to produce those bulb blanks as cheaply as possible, which means using the fewest, lowest-cost inputs we can. The way to do this is to change one or more of these five factors.

First, we can change the transformation method itself to one that requires fewer resources. The very first bulb blanks produced by Corning didn’t use molds but were produced using a much slower free-hand method, which entailed manually rolling out tubes of glass. Changing the bulb-blowing process to the mold method greatly increased output and decreased the labor required for each bulb, such that workers went from producing 165 bulbs on the first day to

150 an hour.

Second, we can try to improve the rate of production and take advantage of economies of scale—the fact that per-unit costs tend to fall as production volume rises. Glass furnaces in the bulb blank factory ran continuously, because starting a furnace cold took a great deal of time (24 hours or more) and was very likely to damage the crucibles. The furnaces were, therefore, burning coal regardless of whether glass was being blown and bulbs produced. Similarly, the rent needed to be paid whether the factory was producing bulb blanks or not. For these reasons, a factory that manufactured bulbs continuously over 24 hours would have lower unit costs than a factory that only operated for eight hours a day (and, in fact, some glass manufacturers did run continuously for this reason).

Third, we can try to reduce variation in the process. The quality of the glass was dependent on the temperature of the furnace: Variations in temperature would result in glass that would break after a short period of use. Reducing temperature variation would, therefore, result in more glass within acceptable bounds, producing a higher yield.

Fourth, we can try to decrease the costs of our inputs. Replacing the hand-blowing process with the bulb mold process not only reduced the amount of labor required but also enabled the factory to use less expensive labor, since the molding process required less skill.

Fifth, we can try to reduce our work in process by decreasing the size of our buffers. Work in process is material that has been paid for but hasn’t yet been sold—it’s an investment that has yet to yield a return. If glass furnaces were filled with new glass once a week, on average there would be half a week’s worth of glass simply sitting idle in the factory. If the crucibles were instead half the size and filled twice a week, on average there would only be a quarter of a week’s supply of glass in the crucibles, reducing work in process by 50 percent.

We also have one more option available to us: We can try to delete an entire step in the process. This will, obviously, remove all of its associated costs. If, for instance, it becomes possible to buy premixed glass powder, we no longer need to perform the mixing step ourselves—our input materials can instead go directly to the glass crucibles.

These are the options available to improve the efficiency of a process. So, what does this suggest about what an extremely efficient process looks like?

It’s a process with no buffers. Material moves smoothly from one step to the next without any waiting or delay, and material tied up in the process is minimized.

It’s a process with no variability. The process works every time and always produces exactly what it’s supposed to, at exactly the time when it’s needed. More generally, the output of the process is as close to perfectly predictable as possible.

It’s a process with no unnecessary or wasteful steps. Every step is contributing value, and no steps can be eliminated.

It’s a process with inputs that are as cheap as possible and no wasted outputs. Either all inputs are successfully transformed, or the ancillary outputs are repurposed elsewhere.

It’s a process that acts at as large a scale as the technology and market will allow. Fixed costs are spread over as much output as possible, and the process takes maximum advantage of scale effects.

It’s a process that uses transformation methods that require as few inputs as possible, at the limits of what production technology will allow.

This sort of production process is sometimes called a continuous flow process—it continuously transforms inputs into outputs without any delays, downtime, waiting, unnecessary steps, or unneeded inputs. A steady stream of inputs goes in, and a steady stream of completed products swiftly and smoothly comes out.

One way of thinking about a continuous flow process is that it’s like driving on the highway. In the city, there’s the constant stop-and-start of traffic lights and waiting behind other cars. But on the highway, the flow of cars is consistent and uninterrupted, as one car smoothly follows another.

In practice, it’s often not possible to achieve a true continuous flow process, just as it’s not always possible for traffic to flow perfectly smoothly on the highway. The technology may not allow it, or the size of the market may not justify the cost of the equipment required. There are any number of reasons why continuous flow may not be achievable. But when it is possible, it results in the production of enormous volumes of incredibly inexpensive goods.

To see what a continuous flow process looks like in practice, let’s look at how the light bulb manufacturing process evolved in the century after Edison.

The evolution of lightbulb productionIn 1891, just over a decade after Edison’s invention, the US was producing 7.5 million incandescent bulbs per year. By the turn of the 20th century, that figure had climbed to 25 million. But production was still largely manual, and the cost of light bulbs, though falling, was still high. In 1907, a 60-watt light bulb cost $1.75, or about $54 in 2022 dollars.

In 1912, Corning introduced the first semiautomatic machine for blowing light bulbs, called the Empire E machine. Though it still required workers to manually gather the molten glass, the machine could produce bulbs at a rate of 400 per hour, over twice as fast as the manual mold method. This was followed by General Electric’s fully automatic Westlake machine, as well as Corning’s Empire F. In 1921, a Westlake machine could manufacture over 1000 bulbs an hour. By the 1930s, improved Westlake machines could produce 5000 bulb blanks an hour.

The Westlake machine, though speedy, was largely a faster, mechanized version of the existing method for hand-blowing bulbs. It consisted of a large rotating drum with a series of iron tube arms mounted to it; as the machine rotated, the arms would lower into a glass furnace, gather a glob of molten glass, and swing it into a mold, after which air would be blown into it to form the bulb. Then, in 1926, a new type of machine for manufacturing bulb blanks was introduced: the Corning ribbon machine. Unlike previous machines, which largely duplicated the manual bulb-blowing process, the ribbon machine used a different mechanism for forming the bulbs. Instead of gathering a blob of glass on an iron rod, molten glass was poured onto a conveyor

belt, which produced a continuous ribbon of molten glass (giving the machine its name). The glass would sag through holes in the belt, forming a bowl shape. As the conveyor moved, a mold attached to a second conveyor below would snap shut around the bowl-shaped glass and air would be blown in from above, forming the shape of the bulb. The formed bulbs were then released and carried away by conveyor belt.

What had previously been a process with many small stops and starts became an uninterrupted, continuous flow. Glass poured onto the conveyor, sagged through the holes, and was repeatedly transformed into finished bulbs, one after the other, without any delays or waiting. Every step was perfectly synchronized.

The ribbon machine was extraordinarily complex and required constant intervention to keep operational. But it could produce bulb blanks in truly staggering volumes. The first ribbon machine produced 16,000 bulbs an hour—over three times faster than the Westlake machines. By 1930, an improved ribbon machine could produce 40,000 bulbs an hour.

The ribbon machine represented the final evolution of incandescent bulb blank production. It produced bulbs in such enormous quantities that by the early 1980s, fewer than 15 ribbon machines were needed for the entire world’s supply of light bulbs. By then, machine improvements had increased the production volume to nearly 120,000 bulbs an hour, or 33 bulbs every second.

Similar improvements took place in the rest of the light bulb manufacturing process, though none were quite so dramatic as the ribbon machine. In the late 19th and early 20th centuries, machines were developed to attach the inner tube to the outside bulb, mount the filament to the tube, make and then insert the lead-in wires, and seal the bulb. Enhanced vacuum pumps were developed to evacuate bulbs much more quickly—Edison’s original pumps took five hours to produce a vacuum in a bulb—and they did so automatically.

By the 1920s, most steps in the bulb manufacturing process had been automated, but they were largely performed by separate machines. Large volumes of in-process bulbs would accumulate between workstations, creating severe storage problems. Starting in 1921, these steps were rearranged into groups, or cells, so one machine would smoothly feed another at synchronized rates. Work in process was greatly reduced, storage requirements fell, and output per worker nearly doubled. By 1930, the major manufacturing innovations were complete, and by 1942, finished bulbs could be produced by a work cell at a rate of 1000 per hour.

As a result of these improvements, the cost of a light bulb plummeted. By 1942, the cost of a 60-watt bulb had fallen to 10 cents. Over this same period, bulb efficiency, the amount of light emitted per watt, also improved, nearly doubling from 1907 to 1942. Combined with cheaper electricity, the cost per lumen dropped 98.5 percent between 1882 and 1942.

Other parts of the light bulb-making process benefited from the same types of improvements: new production technology that required fewer inputs, increased economies of scale, reduced variability, minimization of buffers, and the elimination of unnecessary steps. As with bulb blanks, these processes gradually evolved toward a continuous, uninterrupted transformation of material.

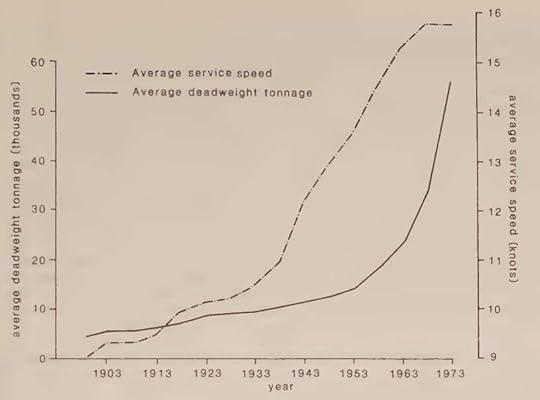

Of course, such gains are not restricted to light bulbs. Any production process that can be described as a series of sequential steps can be made more efficient in the exact same ways. As we’ll see throughout this book, these types of improvements have resulted in increased efficiency in everything from steelmaking to cargo shipping. Over the next several chapters, we’ll take a closer look at each of the five factors of a production process and how they can contribute to increased production efficiency.

The Origins of Efficiency is available now from Amazon, Barnes and Noble, and Bookshop.

November 8, 2025

Reading List 11/8/2025

Grumman X-29.

Grumman X-29.Welcome to the reading list, a weekly roundup of news and links related to buildings, infrastructure, and industrial technology. This week we look at gathering robot training data, “love letters” sent to home sellers, the Napier Deltic diesel engine, jumps in electricity demand from electric teakettles, and more. Roughly 2/3rds of the reading list is paywalled, so for full access become a paid subscriber.

Robot trainingWe’ve previously noted that one major bottleneck in making robots more capable is a lack of training data. LLMs have the benefit of the entire internet as a source of training data, but there’s no such pre-existing “movement” dataset that we can use to train robot AI models on, and finding/creating a source of robot training data has become an important aspect of robot progress. The LA Times has a good piece about some of the companies working to collect this training data:

Privatized air traffic control

In an industrial town in southern India, Naveen Kumar, 28, stands at his desk and starts his job for the day: folding hand towels hundreds of times, as precisely as possible.

He doesn’t work at a hotel; he works for a startup that creates physical data used to train AI.

He mounts a GoPro camera to his forehead and follows a regimented list of hand movements to capture exact point-of-view footage of how a human folds.

That day, he had to pick up each towel from a basket on the right side of his desk, using only his right hand, shake the towel straight using both hands, then fold it neatly three times. Then he had to put each folded towel in the left corner of the desk.

If it takes more than a minute or he misses any steps, he has to start over.

In response to the ongoing government shutdown, an air traffic controller shortage is forcing a curtailment of airline flights across the US. Air traffic controllers are having to work without pay, and there are about 3500 fewer controllers working than needed. On Thursday 10% of flights across the country, around 1800 flights, were ordered to be cancelled.

Air traffic control seems like an obvious service for the government to provide, like police or firefighters, but apparently private or semi-private air traffic control systems aren’t all that uncommon. Marginal Revolution has an interesting, short post about Canada’s privatized air traffic control system:

It’s absurd that a mission‑critical service is financed by annual appropriations subject to political failure. We need to remove the politics.

Canada fixed this in 1996 by spinning off air navigation services to NAV CANADA, a private, non‑profit utility funded by user fees, not taxes. Safety regulation stayed with the government; operations moved to a professionally governed, bond‑financed utility with multi‑year budgets. NAV Canada has been instrumental in moving Canada to more accurate and safer satellite-based navigation, rather than relying on ground-based radar as in the US.

“NAV CANADA – in conjunction with the United Kingdom’s NATS – was the first in the world to deploy space-based ADS-B, by implementing it in 2019 over the North Atlantic, the world’s busiest oceanic airspace.

NAV CANADA was also the first air navigation service provider worldwide to implement space-based ADS-B in its domestic airspace.”

Meanwhile, America’s NextGen has delivered a fraction of promised benefits, years late and over budget. As the Office of Inspector General reports:

“Lengthy delays and cost growth have been a recurring feature of FAA’s modernization efforts through the course of NextGen’s over 20-year lifespan. FAA faced significant challenges throughout NextGen’s development and implementation phases that resulted in delaying or reducing benefits and delivering fewer capabilities than expected. While NextGen programs and capabilities have delivered some benefits in the form of more efficient air traffic management and reduced flight delays and airline operating costs, as of December 2024, FAA had achieved only about 16 percent of NextGen’s total expected benefits.”

NPR also ran an article in July this year about the debate around switching to a privatized system in the US, and the pros and cons of a system like Canada’s:

The origins of Airbus

Canada went from paying for air traffic control largely through tax revenue to charging customers a fee based on the weight and distance of a flight.

According to Correia, privatizing air traffic control was the next move for an aviation sector that already had privately-held airplane manufacturers and commercial airlines. “So basically the step that was taken by Canada was to say, well, air traffic control is providing a service to an industry that is already privatized or mostly privatized in many regions of the world,” he said.

Other air traffic control systems that exist outside or partially outside the government include NATS in the United Kingdom, Airservices Australia, Airways New Zealand, DFS in Germany and Skyguide in Switzerland.

A 2017 report by the Congressional Research Service said other countries’ models don’t appear to show “conclusive evidence that any of these models is either superior or inferior to others or to existing government-run air traffic services, including FAA, with respect to productivity, cost-effectiveness, service quality, and safety and security.”

I’ve previously written about the difficulties of competing in the commercial aircraft industry: the costs of developing commercial aircraft are so high (often a significant fraction of the value of the company) and the number of annual aircraft sold so few, that a few bad bets — a program that goes over budget, or sells much less than anticipated — can be ruinous.

Given these difficulties, and the fact that many companies (Lockheed, Douglass, Convair) have been forced from the field, it’s a little surprising who ended up being competitive, and who didn’t. Japan, despite overwhelming many US industries with inexpensive, high-quality manufacturing in the second half of the 20th century, never fielded a commercial airliner (though not for lack of trying). South Korea didn’t either. Instead, the international competitors came from Brazil (Embraer), and a consortium of European countries (Airbus). Works in Progress has a good piece on why Airbus was so successful, when so many other similar European efforts failed:

Air travel computational complexity

Europe is a graveyard of failed national champions. They span from the glamorous Concorde to obscure ventures like pan-European computer consortium Unidata or notorious Franco-German search engine Quaero.

Airbus is the rare success story. European governments pooled resources and subsidized their champion aggressively to face down a titan of American capitalism in a strategically vital sector. Why did Airbus succeed when so many similar initiatives crashed and burned?

Airbus prevailed because it was the least European version of a European industrial strategy project ever. It put its customer first, was uninterested in being seen as European, had leadership willing to risk political blowback in the pursuit of a good product, and operated in a unique industry.

…Roger Béteille, who led the A300 program, probably bears more responsibility for Airbus’s early success than anyone else. Béteille wasn’t interested in building an inferior European Boeing copy. Instead, he invested significant time in getting to know his potential customers and what they needed. This led to Airbus quickly tossing the original design for a 300-seat A300, in favor of a 225-250 seater, when it became clear that Air France and Lufthansa wanted a smaller product.

The revised A300B would prove much cheaper to develop, in part because it allowed the consortium to dispense with the expensive Rolls Royce engine in favour of a cheaper American alternative. In response, the UK exited the project, only to later return with a lower ownership stake.

This willingness to risk political blowback and avoid petty chauvinism in equipment choice was rare in industrial strategy.

Béteille went one step further. He designated English the official language of the project, instead of the usual mixture of languages that characterised European projects, and forbade the use of metric measurements to make it easier to sell into the US market.

Also on the subject of commercial air travel, air travel reservation systems, like early spell checkers, are one of those pieces of software that’s much more complicated than it might first appear. One of the first major air travel reservation software systems, SABRE, was built by IBM using the technology from the recently-completed SAGE defense system (one of the most expensive megaprojects ever built). And these 2003 lecture slides from an MIT course on artificial intelligence talk about some of the computational difficulties involved in booking flights:

At 30,000,000 flights per year, standard algorithms like Dijkstra’s are perfectly capable of finding the shortest path. However, as with any well-connected graph, the number of possible paths grows exponentially with the duration or length one considers. Just for San Francisco to Boston, arriving the same day, there are close to 30,000 flight combinations, more flying from east to west (because of the longer day) or if one considers neighboring airports. Most of these paths are of length 2 or 3 (the ten or so 6-hour non-stops don’t visually register on the chart to the right). For a traveler willing to arrive the next day the number of possibilities more than squares, to more than 1 billion one-way paths. And that’s for two airports that are relatively close. Considering international airport pairs where the shortest route may be 5 or 6 flights there may be more than 1015 options within a small factor of the optimal.

One important consequence of these numbers is that there is no way to enumerate all the plausible one-way flight combinations for many queries, and the (approximately squared) number of round-trip flight combinations makes it impossible to explicitly consider, or present, all options that a traveler might be interested in for almost all queries.

November 6, 2025

Strap Rail

The early history of the United States runs along with the first years of the railroad. A small prototype of a steam-powered locomotive was first built by William Murdoch in 1784, just a year after the Treaty of Paris ended the Revolutionary War. The first working steam locomotive, Richard Trevithick’s Coalbrookdale Locomotive, was built in 1802, and the first public steam railway in the world, George Stephenson’s Locomotion No. 1, was built in 1825.

Early railroad development largely took place in the UK (Murdoch, Trevithick, and Stephenson all built their locomotives there), and early US locomotives were British imports. However, British locomotives were quickly found to have difficulties running on American railroads. British railroads were “models of a civil engineering enterprise”, having:

…carefully graded roadbeds, substantial tracks, and grand viaducts and tunnels to overcome natural obstacles. Easy grades and generous curves were the rule. Since capital was plentiful, distances short, and traffic density high, the British could afford to build splendid railways.

All this engineering came at a cost: early British railroads cost $179,000 per mile to build (though part of this was the cost of land). But because of Britain’s high population density, traffic was high, routes could be short, and so the costs could be recovered.

Conditions in the US were far different. Distances were large, populations were lower, and financing for large engineering projects was in short supply. As a result, US railroads evolved differently than British ones. Rather than being straight, American railroads tended to have winding routes that followed the curve of the land, and avoided tunnels, grading, or expensive civil engineering works. Instead of expensive stone bridges, US railroads used wooden trestles. Locomotives in the US needed to cope with steeper grades and much less robust track than in Britain, and thus needed to be designed differently

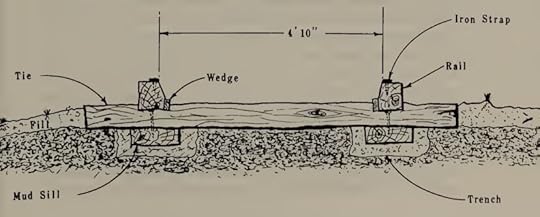

One interesting example of the different railroad conditions in the US and Britain is a railroad technology that was briefly popular for early US railroads: the strap rail track. British railroads were built with solid iron rails which, while effective, were expensive. Strap rail, by contrast, was built by attaching a thin plate of iron to the top of a piece of timber. This greatly reduced the amount of iron required to build railroad track — while British track required 91 tons of iron per mile, strap rail required just 25 tons.

Strap rail, via Material Culture of the Wooden Age.

Strap rail, via Material Culture of the Wooden Age.This style of construction was far cheaper than British iron rails, just $20,000-30,000 per mile, 1/6th to 1/9th the cost of British rail. Building strap rail substituted comparatively precious iron (which was in short supply in the early US) for wood, which was widely available. By 1840, it’s estimated that 2/3rds of the 3,000 miles of railway in the US was strap rail track.

But this thrift wasn’t without consequence. While cheaper to build than solid iron tracks, strap rail lines decayed quickly, and had incredibly high maintenance costs, over twice as much per mile as iron rail (material culture 192). Rather than being built atop crushed rock (which would allow for proper drainage), strap rail lines were typically placed directly onto the ground. Mold, insects, and moisture quickly went to work on the wooden rails, and after a few seasons they became “a hopeless ruin”. And strap rail lines were dangerous: the repeated impact of the locomotive wheels could cause the iron straps to curl up at the ends of the timbers, in some cases derailing trains

Because of these various deficiencies, strap rail became less and less popular for commercial railroads in the US. In 1847, New York banned the use of strap rail for public railroads, and gave existing railways three years to convert to iron rails. After 1850, new commercial installation of strap rail was rare, and by 1860 most existing mileage had been replaced with solid iron rails.

However, strap rail continued to see some use in other areas. Horse-drawn streetcars, which began to appear in US cities in the 1850s, often used strap rail tracks. The comparative lightness of the streetcars made the downsides of strap rail less acute, and strap rail was a popular choice for streetcar lines until electric streetcars began to replace horse-drawn cars in the 1880s. Strap rail also found occasional use on private, industrial railways, and several such lines were built in the 1860s and 1870s. Here too, however, strap rail eventually fell out of fashion.

An even cheaper type of wooden railway was occasionally built for logging railroads to transport felled timbers: the pole road. A pole road was nothing more than two rows of logs, laid parallel on the ground, and tapered so that one log could fit into the next. “Locomotives”, little more than modified agricultural steam tractors, would ride on top of these poles on flanged wheels that wrapped around the poles. Like strap rail, pole roads were inexpensive to build but decayed incredibly quickly, and by the end of the 19th century had become increasingly rare

Pole road in Alabama in 1902, via Material Culture of the Wooden Age.

Pole road in Alabama in 1902, via Material Culture of the Wooden Age.The history of technological development emerges from a complex interplay between people working to solve a particular problem (like moving travelers and goods from place to place) and the terrain in which that problem exists. The particular constraints that existed in the early 19th century US — little capital, limited access to iron, low population density — shaped how railway technology developed here, producing technological arcs like the rise and fall of the strap railroad.

November 1, 2025

Reading List 11/01/2025

Space shuttle Enterprise being towed across antelope valley in 1977. Via Wikipedia.