Vineeth G. Nair's Blog

November 6, 2017

Hello world!

Welcome to WordPress. This is your first post. Edit or delete it, then start writing!

February 25, 2016

Get 50% Discount on E Book- Getting Started With Beautiful Soup

Hi All,

You can get 50% discount for my book, Getting Started with Beautiful Soup using the discount code below. Discount code is valid only on Ebooks.

Discount Code : GSWBS5

How to redeem

1. Go to link at packtpub.

2. Add Ebook to cart

3. Add discount code during checkout.

Happy Scraping

December 29, 2015

Only $5 for Getting Started with Beautiful Soup ebook till 8th Jan 2016

Hi All,

Ebook version of My book, Getting Started with Beautiful Soup is available for sale at only $5 till 8th Jan 2016.

This is been included as part of Packtpub’s $5, Skill up Year Campaign. More details are available at this link below.

https://www.packtpub.com/packt/five-dollar-skillup/report

Hurry, grab ebook before offer expire.

December 9, 2015

Getting All Text From Web Page Using Beautiful Soup

In this blog post, i will explain how to get only the text from a web page using Beautiful Soup 4. In Beautiful Soup 4, we have get_text() method which can be used to get all the text information from a web page. So consider my blog kochi-coders.com itself. If i want to get all the text within this blog, i can use the below code.

from bs4 import BeautifulSoup

import urllib2

url="http://www.kochi-coders.com"

page = urllib2.urlopen(url)

soup = BeautifulSoup(page)

In the above code we have created the soup object based on the URL http://www.kochi-coders.com. To get all the text stored within the page we can use the below code.

all_texts = soup.get_text()

print all_texts

In The above code get_text() is used to get all the text content within the page.

Sample Output

Getting All Text From Web Page Using Beautiful Soup « kochi-coders.com

script

( function() {

var query = document.location.search;

if ( query && query.indexOf( 'preview=true' ) !== -1 ) {

window.name = 'wp-preview-387';

}

if ( window.addEventListener ) {

window.addEventListener( 'unload', function() { window.name = ''; }, false );

}

}());

/script

But wait, are we seeing any script tags and contents within the output ?

Yes we can see java script has been present. This is because get_text() considers the

extract() is used to delete a particular tag and all its content.

Removing the JavaScript and printing only the text within a document can be

achieved using the following :

[x.extract() for x in soup_packtpage.find_all('script')]

The previous line of code will remove all the script elements from the document.

After this, the print(soup_packtpage.get_text()) line will print only the text

stored within the page.

Happy Scraping

October 21, 2015

Get 50% Discount on E Book- Getting Started With Beautiful Soup

Hi All,

You can get 50% discount for my book, Getting Started with Beautiful Soup using the discount code below. Discount code is valid till November 9 2015 and valid only on Ebooks.

Discount Code : fyieZ7W

How to redeem

1. Go to link at packtpub.

2. Add Ebook to cart

3. Add discount code during checkout.

Happy Scraping

December 22, 2014

Get a Free E Book on Getting Started with Beautiful Soup

You can get a free copy of my book, Getting Started With Beautiful Soup, on 12/23/2014 from

https://www.packtpub.com/packt/offers...

Get a Free E Book on Getting Started with Beautiful Soup

Hi All

You can get a free copy of my book, Getting Started With Beautiful Soup, on 12/23/2014 from

https://www.packtpub.com/packt/offers/christmas-countdown

May 5, 2014

Scraping a JavaScript enabled Web Page, using Beautiful Soup and PhantomJS

In the previous posts, i have shown ways of scraping web pages using Beautiful Soup. Beautiful Soup is a brilliant HTML parser and helps in parsing the HTML easily. I normally use urllib2 module to open a URL. Later i use this URL open handle to create a Beautiful Soup Object. The normal script that i does is as below.

import urllib2

from bs4 import BeautifulSoup

url = "http://www.regulations.gov/#!docketBr..."'

page = urllib2.urlopen(url)

soup = BeautifulSoup(page)

The above code of using urllib2 module to open the webpage and then using BeautifulSoup will work like a charm, if the page content is plain HTML without any content being load through JavaScript. But recently, i came across this website (regulations.gov), where JavaScript is enabled. This meant that the normal way of opening a webpage to get the page won’t work. The website specifically checks if JavaScript is enabled and renders the page based on the Java Script being run after the page load. I used urllib2 to open the webpage, but it seems to contain only the message (“You need to have javascript enabled to view this page” ) . I was clueless on how to scrape this webpage. Searched a lot and there where lot of possibilities. Among them where Selenium and PhantomJs. Phantomjs impressed me as it was a headless browser and i don’t need to have extra drivers or web browsers installed as in the case with Selenium. So this was the plan.

Use phantomjs to open the page.

Save it as a local file using phantom js File System Module Api.

Later use this local file handle to create BeautifulSoup object and then parse the page.

PhantomJs Script to load the Web Page Below is the script i used to load the web page using PhantomJs and save it as a local file.

var page = require('webpage').create();

var fs = require('fs');// File System Module

var args = system.args;

var output = './temp_htmls/test1.html'; // path for saving the local file

page.open('http://www.regulations.gov/#!docketBr...', function() { // open the file

fs.write(output,page.content,'w'); // Write the page to the local file using page.content

phantom.exit(); // exit PhantomJs

});

Here we have opened the page using PhantomJs and then saved locally. On inspecting the content of the file, we can see that the JavaScript was run and there is no error message regarding JavaScript requirement. Later we will open the local file and then scrape it using the code below.

page_name="./temp_htmls/test1.html"

local_page = open(page_name,"r")

soup = bs(local_page,"lxml")

If you want to learn and understand more examples and sample codes, I have authored a book on Beautiful Soup 4 and you can find more details here

Happy Scraping

March 31, 2014

Your Chance to win a Free Copy of Getting started with Beautiful Soup {“is_closed”:True}

Packt have kindly provided 3 free eBook copies of my newly published book, Getting Started with Beautiful Soup.

How to Enter?

All you need to do is head on over to the book page (http://www.packtpub.com/getting-started-with-beautiful-soup/book)and look through the product description of the book and drop a line via the comments below this post to let us know what interests you the most about this book. It’s that simple.

The winners will be chosen from the comments below and contacted by email so please use a valid email address when you post your comment.

The contest ends when I run out of free copies to give away. Good luck!

Update 1 ( 5th April 2014 )

—————

Packtpub has provided 2 more copies to give away, that mean 5 copies to be won :-). Contest will end soon.

Update 2 (7th April 2014 )

———-

The first winner is Cm. Congratulations! Someone from Packt will be in contact with you shortly.

Update 3 ( 11th April 2014)

The second winner is Elizabeth. Congratulations! Someone from Packt will be in contact with you shortly.

Update 4 ( 16th April 2014)

The third winner is Joel. Congratulations! Someone from Packt will be in contact with you shortly.

Update 5 ( 16th April 2014)

The Fourth winner is Graham O’ Malley. Congratulations! Someone from Packt will be in contact with you shortly.

Update 6 ( 21st April 2014)

The Final winner is Nick Fiorentini. Congratulations! Someone from Packt will be in contact with you shortly.

The contest is now closed. Thanks every one for the participation.

March 10, 2014

Let’s Scrape the page, Using Beautiful Soup 4

I was thinking about porting my blog post about scraping a website using Beautiful Soup from Python 2.7 and Beautiful Soup 3 to Python 3 and Beautiful Soup 4. Thanks to Steve for his code which made it easy for me. In this blog post i will be scraping the same website using Beautiful Soup 4.

Task : Extract all U.S university name and url from University of texas website as a csv ( comma-seperated values ) format.

Dependencies : Python and Beautiful Soup 4

Script with Explanation

Importing Beautiful Soup 4

from bs4 import BeautifulSoup

This is major difference between Beautiful Soup 3 and 4. In 3 it was just

from BeautifulSoup import BeautifulSoup

But in Bs4 it is entirely different.

Next we will import the request module for opening the Url

import urllib.request

We now need to open the page at the above Url.

url="http://www.utexas.edu/world/univ/alpha/"

page = urllib.request.urlopen(url)

Creating the Soup

soup = BeautifulSoup(page.read())

Finding the pattern in the page

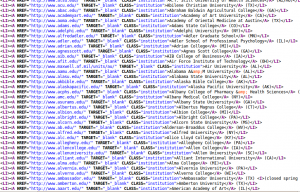

Web scraping will be effective only if we can find patterns used in the websites for the contents. For example in the university of texas website if you view the source of the page then you can see that all university names have a common format like as shown below in the screeshshot

From the pattern we can see that all universities will be within tag with css class institution. So we need to find all the tags whose class is institution to find all the universities. We can use Beautiful Soup 4 find_all() method to accomplish this.

universities=soup.find_all('a',class_='institution')

In the above code line, we used find_all method in Beautiful Soup 4 to find all the universities. We found all the tags with class institution. In Beautiful Soup 4 we can use the keyword argument class_ to search based on the css classes. We can iterate over each university by using the code below

for university in universities:

print(university['href']+","+university.string)

In the above page each University name is stored as the string of the tag and URL are stored as the href property of the tag. So in the above code, by using university.string we will get each university name and using university['href'] we will get the university URL.

Putting it all together

The script for scraping University of texas website using Beautiful Soup 4 is as below.

from bs4 import BeautifulSoup

import urllib.request

url="http://www.utexas.edu/world/univ/alpha/"

page = urllib.request.urlopen(url)

soup = BeautifulSoup(page.read())

universities=soup.find_all('a',class_='institution')

for university in universities:

print(university['href']+","+university.string)

If you want to learn and understand more examples and sample codes, I have authored a book on Beautiful Soup 4 and you can find more details here

Update 1:

You have a Chance to Win Free Copies of Getting Started with Beautiful Soup by Packt Publishing. Please follow the instruction in this post

Happy Scraping