My Thoughts on AI

Every summer, the readers in the Susan’s FANetties FB group have a Q&A in which they submit questions to me, and I, usually quite reserved throughout the year, hold forth at length in my answers. This year, the 11th, had a particularly great collection of questions that really made me think.

One of them got me so spun up I wrote a whole angry essay in response (angry at the topic, not at the questioner–it’s an important question), complete with lots of links to sources, not to mention LOTS of swearing, and it’s just too much for FB to handle. So I’m publishing it here, available for anybody who wants to read it.

So buckle in, and let’s talk about AI.

Q: As publishing evolves, authors are exploring AI tools for research or writing. Have you considered using AI in your process? Why or why not?

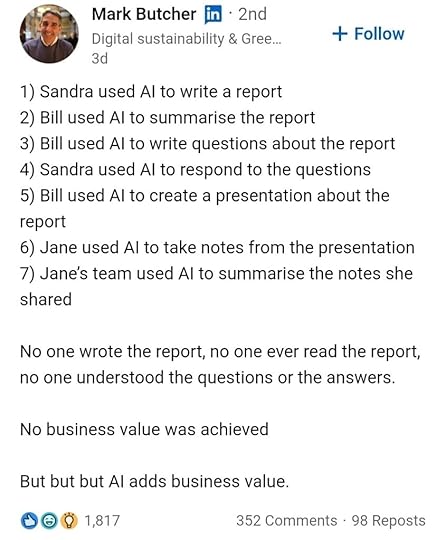

A: I think about this shit a lot. It’s like a constant drumbeat at the back of my brain, occasionally surging forward in a crescendo. It touches both my careers, and I have BIG OPINIONS. I feel strongly enough that I’m not going to try to make space for the other side of the debate. I think the other side of the debate is unethical at best and downright world-killing at worst, so … yeah. If you use generative AI, you probably don’t want to read my thoughts about the use of generative AI. I’m not going to be nice about it.

Before I get into the details here, the tl;dr of this answer is: ABSOLUTELY FUCKING NOT. BECAUSE IT FUCKING SUCKS MUDDY DOG BALLS.

Design by Kim Hu. You can find a purchase link at the end of the post.

Design by Kim Hu. You can find a purchase link at the end of the post. (And yes, I own this shirt)

There’s your warning.

Now I’m gonna get real honest.

*cracks knuckles* *cracks neck*

I’m going to organize this manifesto into separate parts, taking on each of the big, big issues I have with GenAI. In case the distinction isn’t known to everyone: broadly speaking, generative AI is the stuff that purports to create something from a prompt. Assistive AI is like what Siri’s been doing for more than a decade, the tools Photoshop’s had for almost as long, even predictive text—stuff that’s not replacing human work so much as making human work a little easier. That’s an important distinction.

BTW: when you hear/see someone arguing that GenAI is the same thing as using a calculator in math problems, the correct analog is SIRI, NOT ChatGPT. Siri is *assistive* AI, like a calculator is assistive to the math the human brain is actually doing.

To use a calculator, you have to know the numbers to enter. You have to do all the actual thinking yourself to arrive at the numbers you enter. A calculator is streamlining the most basic function of the work. GenAI, on the other hand, is purporting to do the actual thinking for you.

GenAI can be used like a calculator, and when it is, it can simply be a similarly supportive tool. But its creators are pushing it as a better thinker and creator than the human brain, and people are mainly using it so they don’t have to do the thinking and creation. That’s very, very bad for humanity.

I don’t use Siri, because I’d always rather type than talk, even at my own phone, but I don’t want Siri dead. ChatGPT, on the other hand, deserves a firing squad. And then burn the bones and salt the earth. All its stupid competitors, too.

And do not get me started on so-called “agentic” AI, or we will be here all day. GRRR.

Environmental Impact: This one should be the most neutral regardless of whether you think the products of GenAI are a good or an evil in the world, because this is simply fact. GenAI, in particular LLMs (large language models, which is what ChatGPT and such are), has an absolutely ENORMOUS energy footprint. According to an MIT study, a single ChatGPT query uses five times the energy of a regular Google search. Multiply that by how often you use Google, how many people around the world are searching the web at any given moment, and imagine what happens if (when; sigh) GenAI replaces Google and becomes the world’s default. The most basic function we ask GenAI to do is five times more destructive than what we’ve been using to accomplish the exact same task, in seconds, for decades now. Imagine how much energy each request to produce a document or collate data, much more demanding tasks, takes. And that’s just part of its overall energy gluttony and climate assault. The xAI supercomputing facility in Memphis is poisoning the people who breathe the air around it. And these facilities use a colossally dangerous amount of unreplenishable water as well.

We’re 25 years—one generation—from 2050, the year climate scientists have identified as the point at which we will be in a full-blown climate disaster, and the fuckheads crowing about GenAI are apparently like, “Wouldn’t it be cool if we killed the earth by 2030 instead?”

I wish every last one of those moronic techbros itching powder in every pair of underwear they ever put on for the rest of their lives.

Creative Impact: As an English professor and a writer, this one gets me where I live. I have a whole anti-AI lecture to give students at the beginning of the semester (with a lot of what I’m saying here), because GenAI is a fucking scourge in schools right now, and the bullshit ways techbros and pro-AI people talk about it makes me want to puke. And then throat-punch somebody.

THE IDEA IS NOT THE ART. THE EXECUTION OF THE IDEA IS THE ART. COME ON! Telling Midjourney to make an image with elements you’ve (I’m using the general ‘you’ here) specified is NOT you creating that image. That is Midjourney finding versions of those elements out in the aether, and STEALING THEM FROM ACTUAL ARTISTS to Frankenstein the image you said you wanted. The person who typed in the prompt IS NOT AN ARTIST. People who use GenAI to “create content” are more analogous to a shitty corporate executive, demanding work from somebody else and taking all the credit. In fact it’s worse, because at least the corporate drone getting their work claimed by their boss is drawing some kind of payment for the work.

It’s an absurd assertion that it’s “elitist” or “gatekeeping” to say you gotta do the fucking work and write the fucking book your fucking self, you gotta put the paint on the canvas, you gotta design the image, you gotta carve the marble. That’s not gatekeeping. It’s art. Only Michelangelo, through the lens of his genius and talent, saw the David in a block of marble. Only the very human man himself could have created that masterpiece. Perhaps a machine could carve a block of marble to look like a man, but what would result would be no masterpiece.

The David, one of the world’s greatest works of art. Taken during our 2022 visit to Florence.

The David, one of the world’s greatest works of art. Taken during our 2022 visit to Florence.We negate the value and significance of art itself when we call what a machine produces “art.” There is a reason we consider art one of the humanities.

Few have the talent of Michelangelo, but any human can make art. Art is self-expression. Full stop. Bad art made by human hands is still art. Not all art can be successfully monetized, but that doesn’t have anything to do with talent OR artistic expression. Van Gogh struggled to pay for food and shelter all his short life, after all, and sold very few works in his lifetime, and he’s now almost universally regarded as one of the greatest artists the world has known.

Money has literally NOTHING to do with art itself. It has lots to do with having access to the time and supplies to make it, of course, privilege is very much in play there, but whether a piece has any monetary value is irrelevant to the art itself.

People who use GenAI want an easy button. But NOBODY gets an easy button to create or to learn. You might have the resources to do it more readily and often than someone who’s working 16 hours a day to keep the rent paid, but you still gotta do the work, and so does the tired double-shift worker. What comes from the easy button will never be art and should never be allowed to replace what humans create.

So yeah, I make a teensy bit of room for some of the good GenAI might be able to do in science and medicine (I’m not informed enough about that yet to assert a strong opinion), but consumer-facing GenAI is nothing but a plagiarism machine, full stop. Plagiarism is theft. And the AI bros aren’t even pretending it’s not. They’re arguing in court that they should be allowed to steal it, because of how much money can be made when they do.

That’s the thing that seems to get lost in the constant discourse about GenAI and how much money a very few people who are already richer than the rest of us combined can make: productivity is not the be-all, end-all of life. Productivity is not a moral or ethical value, and it should not define human existence. Most people’s employment is not a calling, not a thing they do for love. For most people, work is no more or less than a necessary burden. The meaning of life cannot be that we are simply cogs keeping the billionaire-support machine running.

It’s not the destination, after all, it’s the journey. The learning and experience we gain before we achieve a thing is greater than the thing itself. We are shaped by our experiences. GenAI is all about the destination—and at that destination, GenAI gives us a hologram of reality, not the actual thing.

You can really tell that the people running the tech world have no respect whatsoever for humanity—or for the study of it, what we call the humanities. The latest issue of The New Yorker includes a lame-brained defense of AI, in which the author describes AI’s ability to summarize writing, and uses a passage from the opening to Charles Dickens’ classic novel Bleak House as an example: “Gas looming through the fog in divers places in the streets, much as the sun may, from spongey fields, be seen to loom by husbandman and ploughboy.”

Declaring Dickens’ writing “muddy and semantically torturous,” the New Yorker writer suggests this AI summary as an improvement: “Gas lamps glow dimly through the fog at various places throughout the streets, much like how the sun might appear to farmers working in misty fields.”

Now, I’m not a particular fan of Dickens, and I am particularly not a fan of Bleak House. However, the difference between the opening Dickens wrote and the summary AI cobbled together from stolen bits of the internet is the difference between making art and stringing words together.

There is rhythm in Dickens’ passage, there is semantic pattern and balance, there is mood. The summary is nothing more than a report. It’s not even description because it doesn’t carry any emotion or mood. It’s just “It’s foggy, there’s a few street lamps, looks like sun in the country. Anyway.”

And HOLY SHIT, the point of art is not merely to convey information, and not merely to be entertained. It’s not mere “content” to be consumed. The point of art IS TO MAKE YOU FEEL, to have something to say, to put you in a character’s (or author’s) head and share an experience. That summary bleeds all the feeling straight out of Dickens’ art.

And HOLY SHIRTBALLS, the point of studying art and literature is not simply to get the plot points. It’s not a puzzle to be solved. We study art to appreciate it, to relate to it, to understand its context in the human story, and to understand how the language achieves such a lofty goal as communion through time and space between writer, story, reader, and the world.

Very few writers are trying to make the themes and emotions of their stories difficult to unearth. They’re not hiding what they want to say. They’re trying to pull you into their brain and show you what they see and feel so that you see and feel it too. They want you to get EVERYTHING they’re saying and seeing and feeling and thinking. They want you to live the story.

I should have used “we” instead of “they” there.

When someone asserts that AI can be a useful tool for students because it takes difficult prose and summarizes it in simple language, when they also insist that AI can write books and movies, can do the work of human artists, what they’re really saying is that art doesn’t matter. And they’re also COMPLETELY MISSING THE POINT of humanities education.

We don’t assign Dickens or Shakespeare or any other writer whose use of language is less colloquial and therefore more difficult because we’re trying to torment our students or because we don’t think there are great writers who use contemporary diction. We assign them because 1) they are great writers, so important to our body of literature they are part of our modern cultural idiom, here in the “western” world at least, and familiarity with their work is part of the context of understanding contemporary works, and 2) learning to interpret and analyze these difficult texts builds the skills to be able to interpret and analyze any text. Oh, and 3) because to understand our own humanity, we must understand the humanity that came before us.

It’s artists who interpret human history. That has been true since the first cave painting.

Moreover, we don’t assign essays because essay-writing itself is so vital to daily life. That’s true for some, those who become professional writers, but that’s not most people. We assign essays because grappling with your own ideas is important—and not just the opinions you’ve osmosed from parents, and media, and friends, and randos online, but ideas you build through research—real research, conducted by experts and reviewed by other experts. Learning how to truly evaluate sources—not just find junk online that already agrees with you, but find the people who are doing real study so you can test your opinions against facts and empirical observation and expert analysis. Academic writing and research shows students that the opinion of someone who’s studied the topic in depth and for years, under the rigorous structure of academic process, is a better opinion than some dude with a podcast, and builds the skills to know which dude with a podcast has actually done the work and which bozo is spewing vibes and toeing the line of his sponsors’ interests.

In good research, sound research, we understand, analyze, and evaluate others’ arguments and then synthesize what we’ve learned with our own ideas so that our ideas come through that process, maybe changed, but certainly stronger, grounded in logic and harder to rebut.

Doing that thinking and expression in writing is important. In writing, you can practice refining your words, find the organization and style that brings the most impact, understand whether your audience is already on your side or needs convincing, and which approach is most likely to gain their attention and respect.

It’s not about the essay. It’s about the work you had to do, and the learning that happened while you did it, before you even began putting words on a page. These are crucial skills of critical thinking that transcend college requirements.

GenAI strips away all that learning. And good god, without it, the apocalypse we’re heading for is Wall-E.

Economic Impact: The first and easiest point to make here is that stealing art from writers and artists, and anybody else who has “IP” online, is stealing money from them. Art isn’t about monetary value, but we live in a capitalist society, and artists have the same human needs of food and shelter that, in our society, we must pay for. If anybody is going to make money from art, the list had damn well better start with the artist.

During the SAG/AFTRA strike a few years ago, one of the main sticking points was studios’ insistence on taking the rights, in perpetuity, to voice and image of actors once they were under contract for any project. Which would mean, of course, that studios could pay an actor once and never again, forever. Just use the AI-generated likeness in any future projects, forever, without any compensation or even consent for the actor. Most actors aren’t Brad Pitt. Most actors barely earn enough from acting to live—if that. It would have destroyed the entire entertainment industry as we know it and replaced it, in a very few years, with nothing but AI-generated slop.

That’s important because we all turn to art for comfort, for community, for entertainment, for emotional resonance, for inspiration, for purpose. For humanity.

But the studio heads would’ve made big, big, bucks for a while (as if they don’t already), and that’s all they give a shit about. Thankfully, they eventually conceded the AI fight in order to come to a labor agreement. But they will try again next time, no doubt.

This gets to a bigger point about the economic impact of GenAI. Payroll is the most expensive item on a company’s budget. Execs are already doing everything they can to keep payroll costs low (e.g., pay people as little as they’ll tolerate without uprising). All this talk of GenAI’s “productivity potential” is heading down a very scary road, where whole sectors of human jobs disappear, replaced by AI. The very rich will have everything and the rest of us will be scraping their leavings off the street. Like pre-1900s levels of wealth disparity. Just last week, Salesforce reported that up to 50% of its work was being completed by AI, and it had laid off 1,000 human workers.

It is also singlehandedly striking the death blow for the critically injured profession of journalism. The internet itself shot journalism in the head, and this new scourge is kicking the plug from the wall. A report released a few days ago points to the way search engines like Google use “AI overviews” is tanking the “clickthrough” rate—in other words, people aren’t clicking links to the actual information when they google something. They’re simply reading the AI summary at the top of the page and calling their question answered. (BTW, for other rebels like me, you can add “- ai” to the end of your search term, and that nasty overview will go away.)

Also? GenAI is bad at what it says it can do. It lies all the time, it’s wrong all the time, and when it’s challenged, it acts like a petulant toddler who needs a time out—and often more like the psycho ex you needed a restraining order for. GenAI has told recovering addicts they’ll feel better with a little meth, it’s told people to kill themselves, it’s literally driven people mad, (and that’s not an isolated problem). It’s just bad, but it puts all that badness in a nicely wrapped package that looks pretty smart at first glance (or longer, if you know nothing about what you asked it).

Astrophysicist Katie Mack writes that GenAI was designed to be convincing, not to be correct. And if that ain’t the best description of the brosphere ethos, I don’t know what is.

To sum up: consumer-facing GenAI is very bad, morally and practically. Writers who use it aren’t writers, they’re thieves. Period. Same goes for AI cover art. And I will mount up and ride against any author who promotes AI-produced books.

No, I’m not interested in counterpoint. I have no respect or patience for the pro-AI crowd at all. If we come to a point where AI is the default for art, work, and all important aspects of human life, and I’m still alive when it happens, I’m moving off the grid somewhere and turning into a swamp witch.

/endrant

BTW: The shirt in today’s image was designed by Kim Hu, and is available at https://aftermath.site/buy-destroy-ai-shirt-aftermath-kim-hu

If you read the whole thing, thank you! (And I bet you’re sorry you asked that question, lol!)