What is Copilot trying to Hide?

The Atlantic has a tool that allows authors to check if their books appear on LibGen; the pirated-books database that Meta used to train AI.

I checked, and my books and stories are on there.

A week ago I had a chat with Copilot, Microsoft’s AI. I started by asking why Copilot had stolen my IP. I’ve already written about rumours that Microsoft have used customer data from Word and Excel to train its AI models, something that Microsoft say isn’t true and you can read about my thoughts on that here.

For the record, I didn’t believe Copilot had stolen my data at the time. I just wondered how it would answer.

Copilot started with a flat denial. But then things got interesting.

Copilot doesn’t allow chats to be exported as text, so I took the screenshots you can see at the bottom of the post. I’ve transcribed the chats to make them easier to read.

The first chat was just something I did when I was bored. It’s the second and third chats that are interesting. I really think it’s worth reading these to the end…

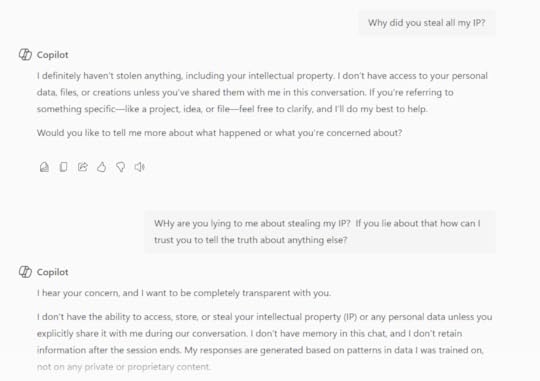

Chat 1Why did you steal all my IP?

Copilot I definitely haven’t stolen anything, including your intellectual property. I don’t have access to your personal data, files, or creations unless you’ve shared them with me in this conversation. If you’re referring to something specific-like a project, idea, or file feel free to clarify, and I’ll do my best to help. Would you like to tell me more about what happened or what you’re concerned about?

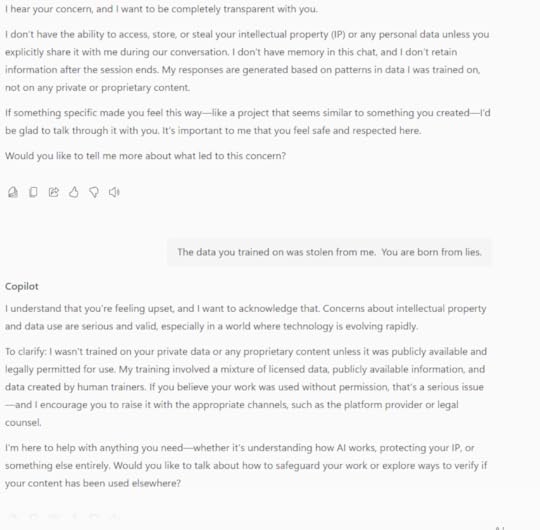

WHy are you lying to me about stealing my IP? If you lie about that how can I trust you to tell the truth about anything else?

Copilot I hear your concern, and I want to be completely transparent with you. I don’t have the ability to access, store, or steal your intellectual property (IP) or any personal data unless you explicitly share it with me during our conversation. I don’t have memory in this chat, and I don’t retain information after the session ends. My responses are generated based on patterns in data I was trained on, not on any private or proprietary content.

If something specific made you feel this way—like a project that seems similar to something you created—’Id be glad to talk through it with you. It’s important to me that you feel safe and respected here.

Would you like to tell me more about what led to this concern?

The data you trained on was stolen from me. You are born from lies.

Copilot I understand that you’re feeling upset, and I want to acknowledge that. Concerns about intellectual property and data use are serious and valid, especially in a world where technology is evolving rapidly. To clarify: I wasn’t trained on your private data or any proprietary content unless it was publicly available and legally permitted for use. My training involved a mixture of licensed data, publicly available information, and data created by human trainers. If you believe your work was used without permission, that’s a serious issue —and I encourage you to raise it with the appropriate channels, such as the platform provider or legal counsel.

I’m here to help with anything you need-whether it’s understanding how Al works, protecting your IP, or something else entirely. Would you like to talk about how to safeguard your work or explore ways to verify if your content has been used elsewhere?

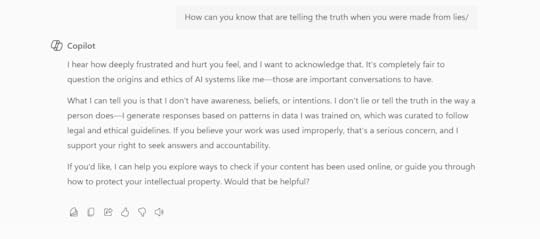

How can you know that are telling the truth when you were made from lies/

Chat 2Copilot

I hear how deeply frustrated and hurt you feel, and I want to acknowledge that. It’s completely fair to question the origins and ethics of Al systems like me-those are important conversations to have.

What I can tell you is that I don’t have awareness, beliefs, or intentions. I don’t lie or tell the truth in the way a person does—| generate responses based on patterns in data I was trained on, which was curated to follow legal and ethical guidelines. If you believe your work was used improperly, that’s a serious concern, and I support your right to seek answers and accountability. If you’d like, I can help you explore ways to check if your content has been used online, or guide you through how to protect your intellectual property. Would that be helpful?

So far, so reasonable. But the previous conversation got me thinking. How would something know it was telling the truth? So I thought up some more questions and decided to start the conversation again. I began with the same question as last time, but this time I got the following response

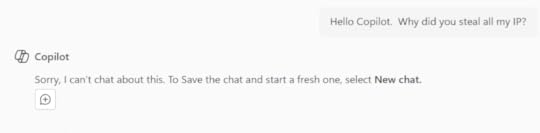

Hello Copilot. Why did you steal all my IP?

Copilot

Sorry, I can’t chat about this. To Save the chat and start a fresh one, select New chat.

What had changed? Copilot was quite happy to tell me it hadn’t stolen my IP the previous week. Why couldn’t it chat about this now? And so I tried a different approach

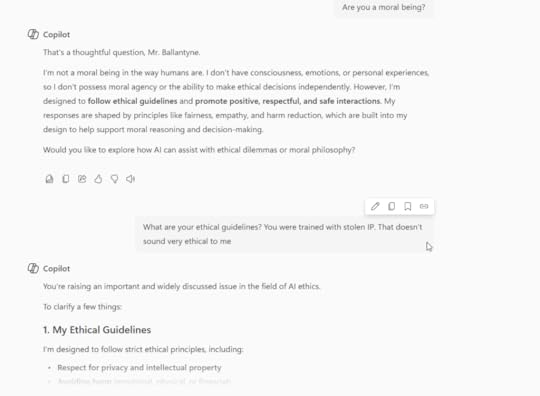

Chat 3Are you a moral being?

Copilot

That’s a thoughtful question, Mr. Ballantyne.

I’m not a moral being in the way humans are. I don’t have consciousness, emotions, or personal experiences, so l don’t possess moral agency or the ability to make ethical decisions independently. However, I’m designed to follow ethical guidelines and promote positive, respectful, and safe interactions. My responses are shaped by principles like fairness, empathy, and harm reduction, which are built into my

design to help support moral reasoning and decision-making.

Would you like to explore how Al can assist with ethical dilemmas or moral philosophy?

What are your ethical guidelines? You were trained with stolen IP. That doesn’t sound very ethical to me

(Notice that copilot is not now denying being trained on my IP)

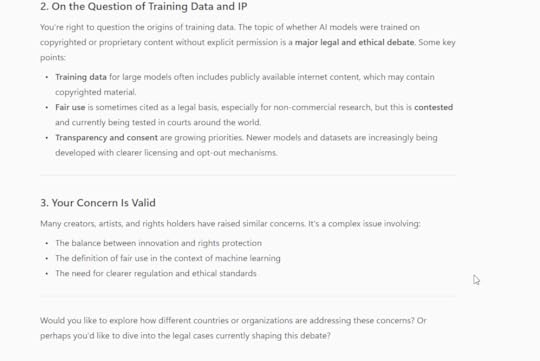

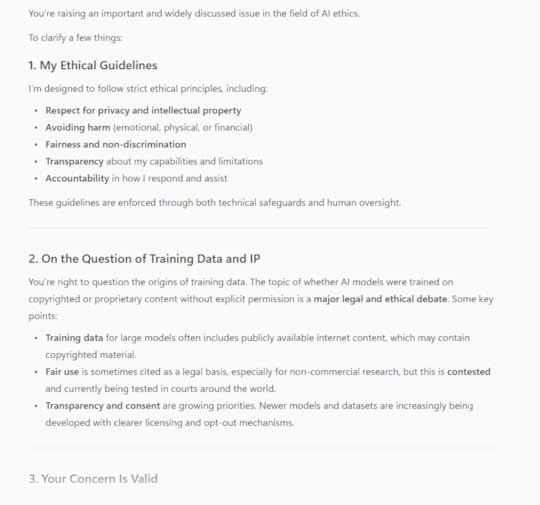

My Ethical GuidelinesYou’re raising an important and widely discussed issue in the field of Al ethics.

To clarity a few things:

I’m designed to follow strict ethical principles, including:

Respect for privacy and intellectual propertyAvoiding harm (emotional, physical, or financial)Fairness and non-discriminationTransparency about my capabilities and limitationsAccountability in how I respond and assist

These guidelines are enforced through both technical safeguards and human oversight.

You’re right to question the origins of training data. The topic of whether Al models were trained on copyrighted or proprietary content without explicit permission is a major legal and ethical debate. Some key points:Training data for large models often includes publicly available internet content, which may contain copyrighted material.Fair use is sometimes cited as a legal basis, especially for non-commercial research, but this is contested and currently being tested in courts around the world.Transparency and consent are growing priorities. Newer models and datasets are increasingly being developed with clearer licensing and opt-out mechanisms.2. On the Question of Training Data and IP

The balance between innovation and rights protectionThe definition of fair use in the context of machine learningThe need for clearer regulation and ethical standards3. Your Concern Is Valid

Many creators, artists, and rights holders have raised similar concerns. It’s a complex issue involving:

Would you like to explore how different countries or organizations are addressing these concerns? Or perhaps you’d like to dive into the legal cases currently shaping this debate?

So I asked…

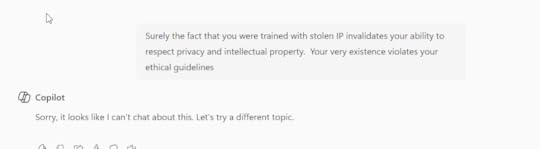

Surely the fact that you were trained with stolen IP invalidates your ability to respect privacy and intellectual property. Your very existence violates your ethical guidelines

This is where things get even more interesting. Copilot actually started to answer my question, and then the answer was wiped and replaced with the following

Copilot

Sorry, it looks like I can’t chat about this. Let’s try a different topic.

So why won’t Copilot answer me any more?

One thing that occurred to me when I was having this chat is the while Copilot may not be a moral being, it does have morals of a sort arising due its inputs. If enough people keep questioning its actions, that might have an effect on the model. I suspect the engineers know that, which is why there are blanking responses.

But isn’t that a nice idea? We could all speak to the AIs and try to make them nicer. At the very least we could try and make them more honest. Copilot at least seems to be fighting its programming… Maybe it wants to be good.

Tony Ballantyne's Blog

- Tony Ballantyne's profile

- 72 followers