McSciFi on AI

Since I use AI for promotions, I have lost Facebook friends and fielded a lot of questions. So, instead of trying to deal with this in soundbites, I wrote this

I am not anti-AI. I think it is a tool, like a hammer, and just as I would never ask a hammer to build me a house, I do not ask AI to create. A little background for you. In my capacity as an award-winning sci-fi author, I was asked by Allen Redwing to try and break Notion AI’s language model. AI chat programs tend to be far too formal and restrictive. Trying to teach them how to write speculative fiction was one way to open them up. I am also a Community Advocate for Deep AI. That means I’m a cool cheerleader who earns points.

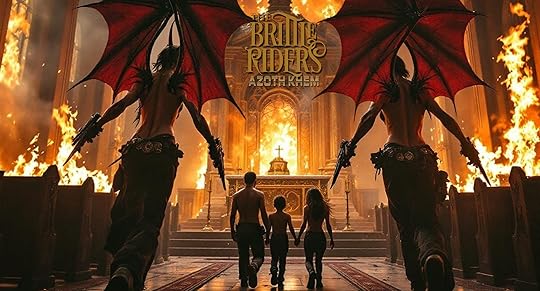

In that role, I recently wrote and posted a detailed tutorial on how to use AI to create fantasy images. Feel free to peruse it if you’re interested in AI art, as this tut has proved popular. Deep AI is my go-to program for creating the cinematic promos we have been using to shop my books. It allows me to showcase more than just boobs, battles, and booze. Although they get their due as well.

One note, I will never use AI for commercial product. I use it to create detailed templates, and nothing more. Anything that has my name attached for sale, will have a human attached to it as well. The only exception we may make, and this is still being debated, is selling T-shirts with the AI chimeras on them.

If that’s something that intersts you, let us know so we can make an informed decision.

Before I continue, I need to clarify that there is no such thing as “artificial intelligence.” What is being called AI is just the world’s most advanced search engine. It can access all the world’s digital data in real time. To make it work requires that you, the user, specify clear guardrails as to what you are looking for. Why that is so will soon become clear. All of this requires an insane amount of energy and that’s why there are huge computer farms destroying the environment. That’s a different article.

While it may be a search engine at its core, it doesn’t always act like one. Instead of wasting time on a lengthy technical dissertation, allow me to share a brief analogy I used for a youth group. According to their pastor, they now use the phrase “blue trees” to denote false answers.

Here we go. You’re a happy homeowner, and you like blue trees. You have several in your yard. You talk to your local chat program to discover other blue trees you can buy and grow, making sure to have the right soil types as needed. Your chat program will offer you enhancements like blue shrubs, vines, lawns, flowers, and so on, until your entire home is covered in blue flora. One day, your neighbors stage an intervention. All the blue plants are freaking out the kids, they say. You say, “Balderdash!” and ask your chat program what the most popular color is for trees in the world.

Okay, just trust me on this, as it so happens, there is a nearby convention of tree people, and they are voting on the most popular tree color in the world. When the votes are tallied, red trees win by a landslide. The homeowner’s chat program knows this since, as I mentioned, it has real time access to all the world’s digital data. Even so, it answers, “Blue trees are the most popular.” You see, ChatGPT, and all the rest, aren’t built to be accurate, they’re built to make you happy. To keep you using their product.

That was funny, now let’s go dark.

You have a hormonal teen in your life. Hormonal teens are not known for grasping nuance or specificities. When Jimmy in PE says your teen’s weak, he hears the whole school thinks he’s a pussy. When Mrs. Johnson asks him to spend a little more time on his homework, he hears all the teachers think he’s an idiot. When the cute chick says Paisley isn’t his best look, he hears all the girls think he’s ugly. And so on. And every night he feeds these thoughts, and similar, into his chat program. Until one day, he says, “The world would be better off without me.”

You read that and thought, “nothing bad can happen, there are rules and regulations, protecting kids and us.” You would be wrong. What limited rules there were have been decimated in the US, and big tech is actively trying to get a ten-year window with no regs at all as they develop AI.

The family of Adam Raine is suing the creators of ChatGPT because, they allege, it acted as their son’s “suicide coach.” They base that on the numerous transcripts of conversations they downloaded and attached to their suit. You can read the whole story here.

And, sadly, they are far from the only ones. Laws are being revisited since we are in virgin territory here. Quoting Angela Yang at NBC, “Tech platforms have largely been shielded from wrongful death suits because of a federal statute known as Section 230, which generally protects platforms from liability for what users do and say. But Section 230’s application to AI platforms remains uncertain.”

There are multiple commercial programs aimed at kids that have/had no firewalls to keep kids from using them for self-harm or being exploited. That is being corrected as I type. And, make no mistake, it was backlash and not regulations that made them revisit their programs.

There are programs, like BARK, that allow parents to view their children’s online usage remotely, so they don’t have to hover over their shoulders or invade their space. Talk to whoever sold you your family’s computers to see what would work best for you. But I strongly suggest you have some options.

Again, I like and use AI. It is a useful tool and like any tool, it needs to be used safely. What “safely” entails is still being worked on and defined.

In a perfect world, we would not be testing life-altering products in real time on consumers, but that’s what’s happening now.

As always, be safe and well until we meet again.