You’re Gonna Need This Now More Than Ever

I’m giving a talk in Seattle on 12/3 as part of the Daily Stoic Live tour— grab seats and come see me ! I’ll also be in San Diego on February 5 and Phoenix on February 27. More dates will be announced soon— sign up here and we’ll let you know when I’m coming to your area.

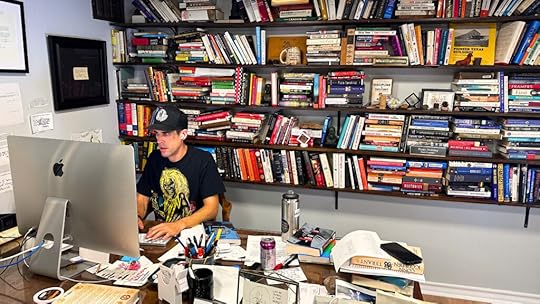

I do all my research on physical notecards.

I only read physical books.

If I have to read a research paper or an article, I print it out and go through it with a pen.

The book I am working on now is currently laid out on an old school cork bulletin board covered in push pins.

There are many easier and more efficient ways to do all this, I’m sure. But I do it the more difficult and low-tech way on purpose.

That being said, I am not a luddite and I don’t think there’s anything admirable or impressive about being one.

There is something fundamentally foolish about instinctively resisting and rejecting new technology—and I refuse to do it.

I have spent many hours trying to figure out AI tools and large language models, seeing where they can make me better, where they might help me.

In some cases, they have. On our family trip to Greece this summer, I had dozens of places I wanted to visit, scattered across the country with no obvious order or itinerary to route between them. I fed them all to ChatGPT and asked for the most efficient driving route. In thirty seconds, it produced what would have been extraordinarily difficult for me to figure out on my own and ultimately, allowed us to get everything into the trip that we wanted.

I’ve spent many joyous mornings (and long car rides) with my kids getting it to render ridiculous pictures or tell us stories. We’ve used it to make mockups of things we want to build and had it explain obscure historical concepts in language appropriate for a child.

But in other cases, my use of AI has reassured me of the value of the old techniques, like when I tried to confirm and source a quote about Abraham Lincoln that I had written down on one of my notecards. ChatGPT first told me it wasn’t about Lincoln at all, instead it was Tolstoy speaking of Dickens…and then when I pushed back, it then tried to tell me it was from Hay and Nicolay, two of Lincoln’s secretaries. When I asked what page I could find this on then—my copy in hand—it then told me that the quote didn’t actually exist. Only when I went back through, page by page, an eight-hundred-page prizewinning biography was I able to confirm that my handwritten note card had in fact been correct. Tolstoy was not involved at all (although he has a great line about Lincoln), it was a 19th century journalist who had known Lincoln well—and the quote was easily findable in many old newspaper databases and public domain books

More recently, for a project I’m currently working on, I wanted to know how many U.S Naval Academy graduates died in World War II. To its credit, ChatGPT showed its work. First it told me that 6% of Naval Academy graduates who served in World War II died. Then it added that between 1940 and 1945, approximately 7,500 people graduated from the Naval Academy. And from those two numbers, it concluded—very confidently—that about 450 graduates must have died.

Of course, that looks like thinking. It looks like real reasoning. And I could see the math was correct. The problem is that these numbers actually had nothing to do with each other. The 6% figure applies to everyone from the Academy who actually served in the war. The 7,500 figure is how many people graduated during the war years. But that wasn’t the question, was it? I happened to know from something I’d read that around 54 Academy classes served in World War II so using the wartime graduation count to calculate wartime deaths makes no sense. The two numbers are totally unrelated. Also, why are we estimating at all? If the 6% figure exists, that means that the total is a known figure (and of course it is, the Veteran’s Affairs have to know this statistic).

In any case, my actual solution was much more low tech. I just found a plaque that listed all the names.

The point is: If I hadn’t already read deeply in these areas—had I not known roughly what I was looking for—I would have been fooled. I might have written that Tolstoy called Dickens the only real giant of history. If I didn’t have my own brain, I might have been persuaded by what seemed like a math equation but was in fact, nonsense.

This is what people miss about AI. There’s a lot of talk about why we should be worried about AI making us or certain things obsolete. It’s going to make the humanities obsolete. It’s going to make books, artists, knowledge workers, and expertise itself obsolete.

But the opposite is true! To use these tools well—to not be used by them—you need exactly the things we’re told are becoming obsolete. A broad liberal arts education. Domain expertise. Critical thinking. A feel for what humans actually sound like. The ability to spot when something seems off.

Just the other day—while this article was in progress, actually—I got an email from someone pitching me some book for The Daily Stoic podcast? The email address was legitimate. The pitch itself was somewhat compelling. But it was riddled with those AI flourishes that no human I know would ever use. An overuse of words like “crucial,” “unlock,” and “harness.” Phrases like “a tapestry of” and “in today’s fast-paced world.” And those green checkmark emojis.

I’ve used AI enough to know that ChatGPT or Gemini wrote this pitch…which meant I could promptly delete it.

We’re entering a world of AI slop. Not just on social media. It’s not just content creators who are sadly outsourcing their writing and ideating and scripting and pitching to these tools. It’s everywhere. Emails from coworkers. Press releases from corporations. Journalists, marketers, politicians, thought leaders—everywhere you look, people are quietly passing off AI’s “writing” and “thinking” as their own.

So the essential skill of our time isn’t prompt engineering or coding—it’s having a finely tuned bullshit detector. It’s knowing enough about how humans actually think and write to spot bullshit. It’s having read widely enough to recognize when an answer is hollow, even when it’s dressed up in confident prose. It’s understanding your domain well enough to know what questions to ask and, more importantly, which answers to reject.

We need to know how AI works and what kind of answers it spits out so you don’t get manipulated by people who do.

We need to have read enough Tolstoy to know when a Tolstoy quote doesn’t sound like Tolstoy.

We need to know enough history to catch when two figures or events are being linked that never overlapped.

We need to understand basic statistics well enough to spot when two unrelated numbers are being jammed together just to give you an answer.

This is the kind of work we have to be willing to do…that we have to choose to do. In the new book, Wisdom Takes Work, I quote Seneca, “No man was ever wise by chance.” We must get it ourselves. We cannot delegate it to someone or something else. There is no technology that can do it for you. There is no app. There is no prompt, no shortcut or summary or step-by-step formula. There is no LLM that can spit it out in thirty seconds.

A little while back, I asked Robert Greene what he thought about AI. “I think back to when I was 19-years-old and in college,” Robert said. In a class learning to read and translate classical Greek texts, “They gave us a passage of Thucydides, the hardest writer of all to read in ancient Greek. I had this one paragraph I must have spent ten hours trying to translate…That had an incredible impact on me. It developed character, patience, and discipline that helps me even to this day. What if I had ChatGPT, and I put the passage in there, and it gave me the translation right away? The whole thinking process would have been annihilated right there.”

This is why I do all my research on physical notecards. It is not fast, easy, or efficient. And that is the point. Writing things down by hand forces me to engage and struggle with the material for an extended period of time. It forces me to take my time. To go over things again and again. To be immersed. To be focused, patient, and disciplined. To come to understand things deeply.

The irony of AI, this cutting-edge technology, is that it makes the oldest skills more valuable than ever. Reading. Thinking. Knowing things. Having taste. Understanding context. Detecting lies or nonsense.

The machines are getting better at sounding smart.

Which means we need to get better at actually becoming smart.

We need the judgment to separate signal from noise.

We need the discernment to know something seems a little off.

We need the curiosity to not be satisfied with first answers.

We need patience and discipline.

We need wisdom.

Now more than ever.