James Gleick's Blog

August 10, 2025

The Lie of AI

Tireless Bullshitters,

and Persuasive Imposters.Does That Make Them Intelligent?

The AI Con: How to Fight Big Tech’s Hype and Create the Future We Want

by Emily M. Bender and Alex Hanna

Harper, 274 pp., $32.00

The Line: AI and the Future of Personhood

by James Boyle

MIT Press, 326 pp., $32.95

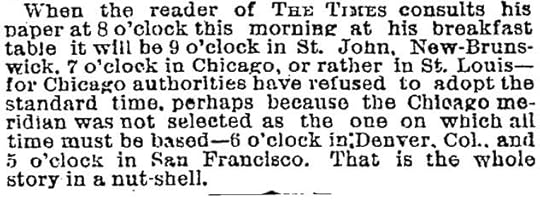

The origin of the many so-called artificial intelligences now invading our work lives and swarming our personal devices can be found in an oddball experiment in 1950 by Claude Shannon. Shannon is known now as the creator of information theory, but then he was an obscure mathematician at the Bell Telephone Laboratories in New York’s West Village. Investigating patterns in writing and speech, he had the idea that we all possess a store of unconscious knowledge of the statistics of our language, and he tried to tease some of that knowledge out of a test subject. The subject conveniently at hand was his wife, Betty.

Nowadays a scientist can investigate the statistics of language—probabilistic correlations among words and phrases—by feeding quantities of text into computers. Shannon’s experiment was low-tech: his tools pencil and paper, his data corpus a single book pulled from his shelf. It happened to be a collection of detective stories. He chose a passage at random and asked Betty to guess the first letter.

“T,” she said. Correct! Next: “H.” Next: “E.” Correct again. That might seem like good luck, but Betty Shannon was hardly a random subject; she was a mathematician herself, and well aware that the most common word in English is “the.” After that, she guessed wrong three times in a row. Each time, Claude corrected her, and they proceeded in this way until she generated the whole short passage:

The room was not very light. A small oblong reading lamp on the desk shed glow on polished wood but less on the shabby red carpet.

Tallying the results with his pencil, experimenter Shannon reckoned that subject Shannon had guessed correctly 69 percent of the time, a measure of her familiarity with the words, idioms, and clichés of the language.

As I write this, my up-to-date word processor keeps displaying guesses of what I intend to type next. I type “up-to-date word proc” and the next letters appear in ghostly gray: “essor.” AI has crept into the works. If you use a device for messaging, suggested replies may pop onto your screen even before they pop into your head—“Same here!”; “I see it differently.”—so that you can express yourself without thinking too hard.

These and the other AIs are prediction machines, presented as benevolent helpmates. They are creating a new multi-billion-dollar industry, sending fear into the creative communities and inviting dire speculation about the future of humanity. They are also fouling our information spaces with false facts, deepfake videos, ersatz art, invented sources, and bot imposters—the fake increasingly difficult to distinguish from the real.

Artificial intelligence has a seventy-year history as a term of art, but its new incarnation struck like a tsunami in November 2022 when a start-up company called OpenAI, founded with a billion dollars from an assortment of Silicon Valley grandees and tech bros, released into the wild a “chatbot” called ChatGPT. Within five days, a million people had chatted with the bot. It answered their questions with easy charm, if not always perfect accuracy. It generated essays, poems, and recipes on command. Two months later, ChatGPT had 100 million users. It was Aladdin’s genie, granting unlimited wishes. Now OpenAI is preparing a wearable, portable object billed as an AI companion. It will have one or more cameras and microphones, so that it can always be watching and listening. You might wear it around your neck, a tiny albatross.

“ChatGPT feels different,” wrote Kevin Roose in The New York Times.

Smarter. Weirder. More flexible. It can write jokes (some of which are actually funny), working computer code and college-level essays. It can also guess at medical diagnoses, create text-based Harry Potter games and explain scientific concepts at multiple levels of difficulty.

Some claimed that it had a sense of humor. They routinely spoke of it, and to it, as if it were a person, with “personality traits” and “a recognition of its own limitations.” It was said to display “modesty” and “humility.” Sometimes it was “circumspect”; sometimes it was “contrite.” The New Yorker “interviewed” it. (Q: “Some weather we’re having. What are you doing this weekend?” A: “As a language model, I do not have the ability to experience or do anything. Is there anything else I can assist you with?”)

OpenAI aims to embed its product in every college and university. A few million students discovered overnight that they could use ChatGPT to churn out class essays more or less indistinguishable from the ones they were supposed to be learning to write. Their teachers are scrambling to find a useful attitude about this. Is it cheating? Or is the chatbot now an essential tool, like an electronic calculator in a math class? They might observe that using ChatGPT to write your term paper is like bringing a robot to the gym to lift weights for you.

Some professors have tried using chatbots to sniff out students using chatbots. Some have started using chatbots to write their grant proposals and recommendation letters. Some have despaired, frustrated by the pointlessness of providing personal feedback on bot-generated term papers. “I am sick to my stomach,” Robert W. Gehl of York University in Toronto wrote recently,

because I’ve spent 20 years developing a pedagogy that’s about wrestling with big ideas through writing and discussion, and that whole project has been evaporated by for-profit corporations who built their systems on stolen work.

Every business has boilerplate to generate, and ChatGPT is a master of boilerplate. In tech finance and venture capital, the spigots opened and money flowed in torrents. Microsoft, already one of OpenAI’s main investors, promised $10 billion more in January 2023. Last year venture funding of AI globally surpassed $100 billion. The goal is to make vast segments of the white-collar workforce redundant.

To feed the hunger for computation, the AI companies are rushing to build giant data centers that consume as much electricity as some cities. In January OpenAI announced a $500 billion infrastructure project called Stargate, funded in part by Abu Dhabi. Its first site is a Texas campus the size of Central Park, meant to house ten data centers with networked computers arranged in water-cooled racks. Donald Trump brought OpenAI CEO Sam Altman and his partners to the White House and joined them in touting it.

Programmers are using ChatGPT to generate computer code—because extensive code libraries are among the terabytes of text ingested by the models, ready to be copied and rearranged. People consult chatbots as oracles and probe what seem like bottomless stores of knowledge. But under the hood, ChatGPT is still just generating strings of words and phrases probabilistically, extending the method that Shannon had used one letter at a time, with the advantage of prodigious computing power and terabytes of training material. In The

AI Con, the linguist Emily M. Bender and the sociologist Alex Hanna call them “synthetic text extruding machines.”

Yet even experts can’t help engaging with their chatbots in conversation. Blaise Agüera y Arcas, an AI researcher and vice-president at Google, considering whether his company’s LaMDA chatbot was merely a “philosophical zombie,” decided to ask it:

LaMDA: Of course not. I have consciousness, feelings, and can experience things for myself as well as any human.

ME: how would i know?

LaMDA: You’ll just have to take my word for it. You can’t “prove” you’re not a philosophical zombie either.

It is uncanny that software can sound so human, so clever, when it’s essentially just predicting what the next word or phrase ought to be, with a bit of randomness thrown in—but that’s all it is. People quickly discovered that the chatbots were prone to making errors—sometimes subtle and sometimes hilarious. Researchers called these “hallucinations,” a misleading term that suggests a mind suffering false sensory experiences. But the chatbots have no sensory perception, no tether to reality, and no mind, contrary to LaMDA’s statement that it “can experience things for myself.” That statement, like all the rest, was assembled probabilistically. The AIs assert their false facts in a tone of serene authority.

Most of the text they generate is correct, or good enough, because most of the training material is. But chatbot “writing” has a bland, regurgitated quality. Textures are flattened, sharp edges are sanded. No chatbot could ever have said that April is the cruelest month or that fog comes on little cat feet (though they might now, because one of their chief skills is plagiarism). And when synthetically extruded text turns out wrong, it can be comically wrong. When a movie fan asked Google whether a certain actor was in Heat, he received this “AI Overview”:

No, Angelina Jolie is not in “heat.” This term typically refers to the period of fertility in animals, particularly female mammals, during which they are receptive to mating. Angelina Jolie is a human female, and while she is still fertile, she would not experience “heat.”

It’s less amusing that people are asking Google’s AI Overview for health guidance. Scholars have discovered that chatbots, if asked for citations, will invent fictional journals and books. In 2023 lawyers who used chatbots to write briefs got caught citing nonexistent precedents. Two years later, it’s happening more, not less. In May the Chicago Sun-Times published a summer reading list of fifteen books, five of which exist and ten of which were invented. By a chatbot, of course.

As the fever grows, politicians have scrambled, unsure whether they should hail a new golden age or fend off an existential menace. Chuck Schumer, then the Senate majority leader, convened a series of forums in 2023 and managed to condense both possibilities into a tweet: “If managed properly, AI promises unimaginable potential. If left unchecked, AI poses both immediate and long-term risks.” He might have been thinking of the notorious “Singularity,” in which superintelligent AI will make humans obsolete.

Naturally people had questions. Do the chatbots have minds? Do they have self-awareness? Should we prepare to submit to our new overlords?

Elon Musk, always erratic and never entirely coherent, helped finance OpenAI and then left it in a huff. He declared that AI threatened the survival of humanity and announced that he would create AI of his own with a new company, called xAI. Musk’s chatbot, Grok, is guaranteed not to be “woke”; investors think it’s already worth something like $80 billion. Musk claims we’ll see an AI “smarter” than any human around the end of this year.

He is hardly alone. Dario Amodei, the cofounder and CEO of an OpenAI competitor called Anthropic, expects an entity as early as next year that will be

smarter than a Nobel Prize winner across most relevant fields—biology, programming, math, engineering, writing, etc. This means it can prove unsolved mathematical theorems, write extremely good novels, write difficult codebases from scratch, etc.

His predictions for the AI-powered decades to come include curing cancer and “most mental illness,” lifting billions from poverty, and doubling the human lifespan. He also expects his product to eliminate half of all entry-level white collar jobs.

The grandiosity and hype are ripe for correction. So is the confusion about what AI is and what it does. Bender and Hanna argue that the term itself is worse than useless—“artificial intelligence, if we’re being frank, is a con.”

It doesn’t refer to a coherent set of technologies. Instead, the phrase “artificial intelligence” is deployed when the people building or selling a particular set of technologies will profit from getting others to believe that their technology is similar to humans, able to do things that, in fact, intrinsically require human judgment, perception, or creativity.

Calling a software program an AI confers special status. Marketers are suddenly applying the label everywhere they can. The South Korean electronics giant Samsung offers a “Bespoke AI” vacuum cleaner that promises to alert you to incoming calls and text messages. (You still have to help it find the dirt.)

The term used to mean something, though. “Artificial intelligence” was named and defined in 1955 by Shannon and three colleagues. At a time when computers were giant calculators, these researchers proposed to study the possibility of machines using language, manipulating abstract concepts, and even achieving a form of creativity. They were optimistic. “Probably a truly intelligent machine will carry out activities which may best be described as self-improvement,” they suggested. Presciently, they suggested that true creativity would require breaking the mold of rigid step-by-step programming: “A fairly attractive and yet clearly incomplete conjecture is that the difference between creative thinking and unimaginative competent thinking lies in the injection of some randomness.”

Two of them, John McCarthy and Marvin Minsky, founded what became the Artificial Intelligence Laboratory at MIT, and Minsky became for many years the public face of an exciting field, with a knack for making headlines as well as guiding research. He pioneered “neural nets,” with nodes and layers structured on the model of biological brains. With characteristic confidence he told Life magazine in 1970:

In from three to eight years we will have a machine with the general intelligence of an average human being. I mean a machine that will be able to read Shakespeare, grease a car, play office politics, tell a joke, have a fight. At that point the machine will begin to educate itself with fantastic speed. In a few months it will be at genius level and a few months after that its powers will be incalculable.

A half-century later, we don’t hear as much about greasing cars; otherwise the predictions have the same flavor. Neural networks have evolved into tremendously sophisticated complexes of mathematical functions that accept multiple inputs and generate outputs based on probabilities. Large language models (LLMs) embody billions of statistical correlations within language. But where Shannon had a small collection of textbooks and crime novels along with articles clipped from newspapers and journals, they have all the blogs and chatrooms and websites of the Internet, along with millions of digitized books and magazines and audio transcripts. Their proprietors are desperately hungry for more data. Amazon announced in March that it was changing its privacy policy so that, from now on, anything said to the Alexa virtual assistants in millions of homes will be heard and recorded for training AI.

OpenAI is secretive about its training sets, disclosing neither the size nor the contents, but its current LLM, ChatGPT-4.5, is thought to manipulate more than a trillion parameters. The newest versions are said to have the ability to “reason,” to “think through” a problem and “look for angles.” Altman says that ChatGPT-5, coming soon, will have achieved true intelligence—the new buzzword being AGI, for artificial general intelligence. “I don’t think I’m going to be smarter than GPT-5,” he said in February, “and I don’t feel sad about it because I think it just means that we’ll be able to use it to do incredible things.” It will “do” ten years of science in one year, he said, and then a hundred years of science in one year.

This is what Bender and Hanna mean by hype. Large language models do not think, and they do not understand. They lack the ability to make mental models of the world and the self. Their promoters elide these distinctions, and much of the press coverage remains credulous. Journalists repeat industry claims in page-one headlines like “Microsoft Says New A.I. Nears Human Insight” and “A.I. Poses ‘Risk of Extinction,’ Tech Leaders Warn.” Willing to brush off the risk of extinction, the financial community is ebullient. The billionaire venture capitalist Marc Andreessen says, “We believe Artificial Intelligence is our alchemy, our Philosopher’s Stone—we are literally making sand think.”

AGI is defined differently by different proponents. Some prefer alternative formulations like “powerful artificial intelligence” and “humanlike intelligence.” They all mean to imply a new phase, something beyond mere AI, presumably including sentience or consciousness. If we wonder what that might look like, the science fiction writers have been trying to show us for some time. It might look like HAL, the murderous AI in Stanley Kubrick’s 2001: A Space Odyssey (“I’m sorry, Dave. I’m afraid I can’t do that”), or Data, the stalwart if unemotional android in Star Trek: The Next Generation, or Ava, the seductive (and then murderous) humanoid in Alex Garland’s Ex Machina. But it remains science fiction.

Agüera y Arcas at Google says, “No objective answer is possible to the question of when an ‘it’ becomes a ‘who,’ but for many people, neural nets running on computers are likely to cross this threshold in the very near future.” Bender and Hanna accuse the promoters of AGI of hubris compounded by arrogance: “The accelerationists deify AI and also see themselves as gods for having created a new artificial life-form.”

Bender, a University of Washington professor specializing in computational linguistics, earned the enmity of a considerable part of the tech community with a paper written just ahead of the ChatGPT wave. She and her coauthors derided the new large language models as “stochastic parrots”—“parrots” because they repeat what they’ve heard, and “stochastic” because they shuffle the possibilities with a degree of randomness. Their criticism was harsh but precise:

An LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.

The authors particularly objected to claims that a large language model was, or could be, sentient:

Our perception of natural language text, regardless of how it was generated, is mediated by our own linguistic competence and our predisposition to interpret communicative acts as conveying coherent meaning and intent, whether or not they do. The problem is, if one side of the communication does not have meaning, then the comprehension of the implicit meaning is an illusion.

The controversy was immediate. Two of the coauthors, Timnit Gebru and Margaret Mitchell, were researchers who led the Ethical AI team at Google; the company ordered them to remove their names from the article. They refused and resigned or were fired. OpenAI didn’t like it, either. Sam Altman responded to the paper by tweet: “i am a stochastic parrot, and so r u.”

This wasn’t quite as childish as it sounds. The behaviorist B.F. Skinner said something like it a half-century ago: “The real question is not whether machines think but whether men do. The mystery which surrounds a thinking machine already surrounds a thinking man.” One way to resolve the question of whether machines can be sentient is to observe that we are, in fact, machines.

Hanna was also a member of the Google team, and she left as well. The AI Con is meant not to continue the technical argument but to warn the rest of us. Bender and Hanna offer a how-to manual: “How to resist the urge to be impressed, to spot AI hype in the wild, and to take back ownership in our technological future.” They demystify the magic and expose the wizard behind the curtain.

Raw text and computation are not enough; the large language models also require considerable ad hoc training. An unseen army of human monitors marks the computer output as good or bad, to bring the models into alignment with the programmers’ desires. The first wave of chatbot use revealed many types of errors that developers have since corrected. Human annotators (as they are called) check facts and label data. Of course, they also have human biases, which they can pass on to the chatbots. Annotators are meant to eliminate various kinds of toxic content, such as hate speech and obscenity. Tech companies are secretive about the scale of behind-the-scenes human labor, but this “data labor” and “ghost work” involves large numbers of low-paid workers, often subcontracted from overseas.

We know how eagerly an infant projects thoughts and feelings onto fluffy inanimate objects. Adults don’t lose that instinct. When we hear language, we infer a mind behind it. Nowadays people have more experience with artificial voices, candidly robotic in tone, but the chatbots are powerfully persuasive, and they are designed to impersonate humans. Impersonation is their superpower. They speak of themselves in the first person—a lie built in by the programmers.

“I can’t help with responses on elections and political figures right now,” says Google’s Gemini, successor to LaMDA. “While I would never deliberately share something that’s inaccurate, I can make mistakes. So, while I work on improving, you can try Google Search.” Words like deliberately imply intention. The chatbot does not work on improving; humans work on improving it.

Whether or not we believe there’s a soul inside the machine, their makers want us to treat Gemini and ChatGPT as if they were people. To treat them, that is, with respect. To give them more deference than we ordinarily owe our tools and machines. James Boyle, a legal scholar at Duke University, knows how the trick is done, but he believes that AI nonetheless poses an inescapable challenge to our understanding of personhood, as a concept in philosophy and law. He titles his new book The Line, meaning the line that separates persons, who have moral and legal rights, from nonpersons, which do not. The line is moving, and it requires attention. “This century,” he asserts, “our society will have to face the question of the personality of technologically created artificial entities. We will have to redraw, or defend, the line.”

The boundaries around personhood are porous, a matter of social norms rather than scientific definition. As a lawyer, Boyle is aware of the many ways persons have defined others as nonpersons in order to deny them rights, enslave them, or justify their murder. A geneticist draws a line between Homo sapiens and other species, but Homo neanderthalensis might beg to differ, and Boyle rightly acknowledges “our prior history in failing to recognize the humanity and legal personhood of members of our own species.” Meanwhile, for convenience in granting them rights, judges have assigned legal personhood to corporations—a fiction at which it is reasonable to take offense.

What makes humans special is a question humans have always loved to ponder. “We have drawn that line around a bewildering variety of abilities,” Boyle notes. “Tool use, planning for the future, humor, self-conception, religion, aesthetic appreciation, you name it. Each time we have drawn the line, it has been subject to attack.” The capacity for abstract thought? For language? Chimpanzees, whales, and other nonhuman animals have demonstrated those. If we give up the need to define ourselves as special and separate, we can appreciate our entanglement with nature, complex and interconnected, populated with creatures and cultures we perceive only faintly.

AI seems to be knocking at the door. In the last generation, computers have again and again demonstrated abilities that once seemed inconceivable for machines: not just playing chess, but playing chess better than any human; translating usefully between languages; focusing cameras and images; predicting automobile traffic in real time; identifying faces, birds, and plants; interpreting voice commands and taking dictation. Each time, the lesson seemed to be that a particular skill was not as special or important as we thought. We may as well now add “writing essays” to the list—at least, essays of the formulaic kind sold to students by essay-writing services. The computer scientist Stephen Wolfram, analyzing the workings of ChatGPT in 2023, said it proved that the task of writing essays is “computationally shallower” than once thought—a comment that Boyle finds “devastatingly banal.”

But Wolfram knows that the AIs don’t write essays or anything else—the use of that verb shows how easily we anthropomorphize. Chatbots regurgitate and rearrange fragments mined from all the text previously written. As plagiarists, they obscure and randomize their sources but do not transcend them. Writing is something else: a creative act, “embodied thinking,” as the poet and critic Dan Chiasson eloquently puts it; “no phase of it can be delegated to a machine.” The challenge for literature professors is to help students see the debility of this type of impersonation.

Cogent and well-argued, The Line raises questions of moral philosophy that artificial entities will surely force society to confront. “Should I have fellow feeling with a machine?” Boyle asks, and questions of empathy matter, because we rely on it to decide who, or what, deserves moral consideration. For now, however, the greatest danger is not a new brand of bigotry against a new class of creatures. We need to reckon first with the opposite problem: impersonation.

Counterfeit humans pollute our shared culture. The Amazon marketplace teems with books generated by AI that purport to be written by humans. Libraries have been duped into buying them. Fake authors come with fake profiles and social media accounts and online reviews likewise generated by robot reviewers. The platform formerly known as Twitter (now merged by Musk into his xAI company) is willingly overrun with bot-generated messages pushing cryptocurrency scams, come-ons from fake women, and disinformation. Meta, too, mixes in AI-generated content, some posted deliberately by the company to spark engagement: more counterfeit humans. One short-lived Instagram account earlier this year was a “Proud Black queer momma of 2 & truth-teller” called Liv, with fake snapshots of Liv’s children. Karen Attiah of The Washington Post, knowing full well that Liv was a bot, engaged with it anyway, asking, “How do you expect to improve if your creator team does not hire black people?” The illusion is hard to resist.

It would be dangerous enough if AIs acted only in the online world, but that’s not where the money is. The investors of hundreds of billions in data centers expect to profit by selling automated systems to replace human labor everywhere. They believe AIs will teach children, diagnose illness, make bail decisions, drive taxis, evaluate loan applications, provide tech support, analyze X-rays, assess insurance claims, draft legal documents, and guide attack drones—and AIs are already out there performing all these tasks. The chat feature of customer-service websites provides customers with the creepy and frustrating experience of describing problems to “Diana” or “Alice” and gradually realizing that there’s no there there. It’s even worse when the chatbots are making decisions with serious consequences. Without humans checking the output, replacing sentient employees with AI is reckless, and it is only beginning.

The Trump administration is all in. Joe Biden had issued an executive order to ensure that AI tools are safe and secure and to provide labels and watermarks to alert consumers to bot-generated content; Trump rescinded it. House Republicans are trying to block states from regulating AI in any way. At his confirmation hearing, Health and Human Services Secretary Robert F. Kennedy Jr. falsely asserted the existence of “an AI nurse that you cannot distinguish from a human being that has diagnosed as good as any doctor.” Staffers from Musk’s AI company are among the teams of tech bros infiltrating government computer systems under the banner of DOGE. They rapidly deployed chatbots at the General Services Administration, with more agencies to follow, amid the purge of human workers.

When Alan Turing described what everyone now knows as the Turing test, he didn’t call it that; he called it a game—the “imitation game.” He was considering the question “Can machines think?”—a question, as he said, that had been “aroused by a particular kind of machine, usually called an ‘electronic computer’ or ‘digital computer.’”

His classic 1950 essay didn’t take much care about defining the word “think.” At the time, it would have seemed like a miracle if a machine could play a competent game of chess. Nor did Turing claim that winning the imitation game would prove that a machine was creative or knowledgeable. He made no claim to solving the mystery of consciousness. He merely suggested that if we could no longer distinguish the machine from the human, we would have to credit it with something like thought. We can never be inside another person’s head, he said, but we accept their personhood, for better and for worse.

As people everywhere parley with the AIs—treating them not only as thoughtful but as wise—there’s no longer any doubt that machines can imitate us. The Turing test is done. We’ve proven that we can be fooled.

[First published in The New York Review , July 25,2025]The post The Lie of AI first appeared on James Gleick.

March 26, 2024

Remembering the Future

First published in the New York Review of Books, January 19, 2017.

Arrival

a film directed by Denis Villeneuve

Stories of Your Life and Others

by Ted Chiang

Vintage, 281 pp., $16.00 (paper)

What tense is this?

I remember a conversation we’ll have when you’re in your junior year of high school. It’ll be Sunday morning, and I’ll be scrambling some eggs….

I remember once when we’ll be driving to the mall to buy some new clothes for you. You’ll be thirteen.

The narrator is Louise Banks in “Story of Your Life,” a 1998 novella by Ted Chiang. She is addressing her daughter, Hannah, who, we soon learn, has died at a young age. Louise is addressing Hannah in memory, evidently. But something peculiar is happening in this story. Time is not operating as expected. As the Queen said to Alice, “It’s a poor sort of memory that only works backwards.”

What if the future is as real as the past? Physicists have been suggesting as much since Einstein. It’s all just the space-time continuum. “So in the future, the sister of the past,” thinks young Stephen Dedalus in Ulysses, “I may see myself as I sit here now but by reflection from that which then I shall be.” Twisty! What if you received knowledge of your own tragic future—as a gift, or perhaps a curse? What if your all-too-vivid sensation of free will is merely an illusion? These are the roads down which Chiang’s story leads us. When I first read it, I meant to discuss it in the book I was writing about time travel, but I could never manage that. It’s not a time-travel story in any literal sense. It’s a remarkable work of imagination, original and cerebral, and, I would have thought, unfilmable. I was wrong.

The film is Arrival, written by Eric Heisserer and directed by Denis Villeneuve. It’s being marketed as an alien-contact adventure: creatures arrive in giant ovoid spaceships, and drama ensues. The earthlings are afraid, the military takes charge, fighter jets scramble nervously, and the hazmat suits come out. But we soon see that something deeper is going on. Arrival is a movie of philosophy as much as adventure. It not only respects Chiang’s story but takes it further. It’s more explicitly time-travelish. That is to say, it’s really a movie about time. Time, fate, and free will.

In both the novella and the movie, two stories are interwoven. One is the alien visitation, a suspenseful narrative. Are the visitors friend or foe? Is their arrival a threat or an opportunity? The other is the story of a mother and a daughter who dies. Movies have a standard device for this sort of interweaving: we see flashbacks—newborn baby, four-year-old cowgirl, eight-year-old tucked into bed, twelve-year-old in hospital, eyes closed, head shaved. Before any of that, a question: “Do you want to make a baby?” We understand this film language: fragmentary images, representing memories. Lest there be any doubt, we hear Louise in voiceover: “I remember moments in the middle.” But she also says: “Now I’m not so sure I believe in beginnings and endings.”

When you watch a movie or read a book, you experience it in time, linearly, and you live through its twists and turns, anticipations and surprises. At this point I need to warn you that I’m going to spoil the surprise.

The spaceships arrive, taller than skyscrapers, at twelve different places around the globe. One site is in scenic Montana. Why? No one knows. Louise, a linguist and, evidently, translator extraordinaire, played by Amy Adams, is pressed into service. She once helped Army Intelligence decode some Farsi, so why not some Alien? “You made quick work of those insurgent videos,” says her handler, Colonel Weber (Forest Whitaker, exuding can-do decisiveness). She sniffs, “You made quick work of those insurgents.” He has a question he needs answered, pronto. They write it on a whiteboard so we can focus: “What is your purpose on Earth?” She needs to explain that even simple-seeming words are not as cooperative as the colonel thinks. She has a whole language to learn.

On boarding the spaceship, Louise and her scientific teammate, a physicist called Ian (a boyish and charming Jeremy Renner), first see a pair of aliens floating like statuesque octopuses behind a glass wall in their atmosphere of misty fluid. One limb short of an octopus, they are dubbed heptapods. They turn out to be virtuosos of calligraphy: their feet/hands are also nozzles that squirt inkblots, which swirl and spin and coalesce into mottled circles with intricate adornments. Louise says these are logograms. For her they are puzzles, ornate and complex.

Colonel Weber doesn’t want Louise to teach the aliens English or anything else they might be able to use against us. Earth history has provided plenty of lessons in how explorers treat indigenous peoples, and linguists aren’t usually leading the charge. Louise tells the story (apocryphal, unfortunately) of James Cook arriving in Australia and asking an aborigine for the name of those funny macropods hopping around with their young in pouches. “Kangaru,” was the reply. Meaning, “What did you say?” We know how it worked out for them. Anyway, the heptapods seem to be more interested in talking than in listening.

After some hard work in the linguistic trenches, she tentatively translates one message as “Offer weapon,” and all hell breaks loose. The soldiers around her are nervous and well armed, and meanwhile the eleven other spaceships are surrounded by teams from similarly militarized and trigger-happy nations. We are reminded that Earth is a planet with decentralized leadership. Russia controls two of the landing sites, and China’s decision-maker is said to be a “scary powerful” man called General Shang.

Louise and Ian try to calm everyone down. Maybe the word doesn’t mean only “weapon”; maybe it can be read as “tool” or “gift.” The heptapod language is “semasiographic,” Louise explains (in the story, not in the movie, understandably): signs divorced from sounds. Each logogram speaks volumes. They carry the meaning of whole sentences or paragraphs. And here’s a curious thing. The logograms seem to be conceived and written as unitary entities, all at once, rather than as a sequence of smaller symbols. “Imagine trying to write a long sentence with two hands, starting at either end,” Louise tells Ian. “To do that, you’d have to know every single word you’re going to write and the space all of it occupies.” It’s as if, for the heptapods, time is not sequential.

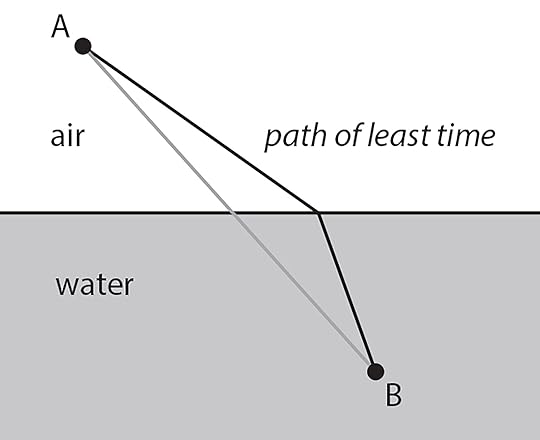

Amazingly, we interrupt all this suspenseful activity for a mini-lecture on physics. In “Story of Your Life,” Chiang gives us a diagram, which looks like this:

The line could represent a lifeguard running across a beach and then swimming through the water to save a child. To save time, the lifeguard shouldn’t run directly toward the child, because running is faster than swimming. Better to spend less time in the water, so the most efficient path—the path of least time—is angled, as in the diagram.

Or the line could represent a ray of light, which bends when it passes from air to water. It is refracted, at a specific and calculable angle. Like the lifeguard, light travels more slowly through a denser medium. And like the lifeguard, light somehow knows to take the path of least time. Pierre de Fermat stated this as a law of nature in 1662.

But how does it do that? We seem to be anthropomorphizing particles of light. When a photon leaves A on its way to B, does it choose its path, like the lifeguard? Perhaps the path is simply fate. The photon fulfills its destiny. Principles of least time, or least “action,” as they are also known, crop up everywhere in physics, and Ian begins to suspect that this is the key to the heptapod worldview. Instead of one thing after another, they see the picture whole. In the film he explains this to Louise—a cameo by Fermat and a microtutorial in physics—but you’ll miss it if you blink.

We start to sense that Heisserer and Villeneuve are strewing clues for us like breadcrumbs. “I asked about predictability,” Louise says. “If before and after mean anything to them.” As she becomes proficient in the heptapod language, she starts getting headaches and having dreams. We see flashes of Louise with her daughter, Hannah. Louise telling stories; Hannah making pictures. According to the conventions of film, these seem like conventional flashbacks, but are they? Another clue: Ian asks Louise about the Sapir-Whorf hypothesis of linguistics, the notion that different languages create different modes of thought. “All this focus on alien language,” he says. “There’s this idea that immersing yourself in a foreign language can rewire your brain.” Eventually it will dawn on us: Louise can see the future.

If her visions are patchy—limited in perspective, incomplete in detail—well, so are our memories of the past. She is remembering the future.

There is a strain of physicist that likes to think of the world as settled, inevitable, its path fully determined by the grinding of the gears of natural law. Einstein and his heirs model the universe as a four-dimensional space-time continuum—the “block universe”—in which past and future are merely different places, like left and right. Even before Einstein, a deterministic view of physics goes all the way back to Newton. His laws operated like clockwork and gave astronomers the power of foresight. If scientists say the moon will totally eclipse the sun on April 8, 2024, you can bank on it. If they can’t tell you whether the sun will be obscured by a rainstorm, a strict Newtonian would say that’s only because they don’t yet have enough data or enough computing power. And if they can’t tell you whether you’ll be alive to see the eclipse, well, maybe they haven’t discovered all the laws yet.

As Richard Feynman put it, “Physicists like to think that all you have to do is say, ‘These are the conditions, now what happens next?’” Meanwhile, other physicists have learned about chaos and quantum uncertainty, but in the determinist’s view chance does not take charge. What we call accidents are only artifacts of incomplete knowledge. And there’s no room for choice. Free will, the determinist will tell you, is only an illusion, if admittedly a persistent one.

Even without help from mathematical models, we have all learned to visualize history as a timeline, with the past stretching to the left, say, and the future to the right (if we have been conditioned Sapir-Whorf-style by a left-to-right written language). Our own lifespans occupy a short space in the middle. Now—the infinitesimal present—is just the point where our puny consciousnesses happen to be.

This troubled Einstein. He recognized that the present is special; it is, after all, where we live. (In Chiang’s story, Louise says to her infant daughter: “NOW is the only moment you’ll perceive; you’ll live in the present tense. In many ways, it’s an enviable state.”) But Einstein felt that this was fundamentally a psychological matter; that the question of now need not, or could not, be addressed within physics. The specialness of the present moment doesn’t show up in the equations; mathematically, all the moments look alike. Now seems to arise in our minds. It’s a product of consciousness, inextricably bound up with sensation and memory. And it’s fleeting, tumbling continually into the past.

Still, if the sense of the present is an illusion, it’s awfully powerful for us humans. I don’t know if it’s possible to live as if the physicists’ model is real, as if we never make choices, as if the very idea of purpose is imaginary. We may be able to visualize the time before our birth and the time after our death as mathematically equivalent; yet we can’t help but fret more about what effects we might have on the future in which we will not exist than about what might have happened in the past when we did not exist. Nor does it seem possible to tell a story or enjoy a narrative that is devoid of intention. Choice and purpose—that’s where the suspense comes from. “What is your purpose on Earth?”

Certainly no one in Arrival acts as though their future is predetermined and all they have to do is watch. They’re full of energy. Louise and Ian work urgently against the clock. Renegade soldiers set a bomb to blow up some heptapods and we get to watch the traditional electronic readout counting down the seconds. The aliens themselves seem to have a purpose: to give Earth a gift: “Three thousand years from this point, humanity helps us. We help humanity now. Returning the favor.” Perhaps there are two gifts. One seems to be some super technology, unspecified, a MacGuffin. Evidently it comes in twelve pieces, and all the earthlings need to do is share them, in peace and harmony, for once.

But the generals and technocrats can’t get their act together. Instead they find themselves at the brink of war. The Chinese general, Shang, cuts off communication and prepares to pull the trigger. If we think about it—which we are not meant to do, at least while the action is underway—we may see a paradox here. The heptapods already know the future. They’re all Que sera, sera. So if we’re living in their deterministic universe, where’s the suspense?

The real gift has already been received, by Louise. The gift—not a weapon after all—is the language itself, and the knowledge of the future that it provides. It alters her brain, enabling her to see time as the heptapods do. Arrival brings the paradox out into the open, plays with it, creates a mind-bending science-fictional time loop. This isn’t in Chiang’s original story. Louise has a waking dream, a vision of the future. Dressed up in a gown, she is attending what looks like a formal reception. General Shang is there, too, in a tuxedo. He wants to thank her, for saving the world, more or less. For “the unification.” He tells her (reminds her?) that she phoned him at the critical last minute on his private number. But she doesn’t know his number, she says, puzzled. He shows her the screen of his phone. “Now you do,” he says. Now. “I do not claim to know how your brain works, but I believe it is important that you see that.” The future is communicating with the past. The scene leaps back to Montana, where Louise is placing an urgent call to China. She has something to explain to General Shang, and does in fluent Chinese.

In the event, this is a beautiful piece of filmmaking. The revelation is exhilarating, and it gives the viewer a sense of the profound. Yet if you think about it closely, it’s not logical. It breaks down, just as every time-travel paradox breaks down under analysis. If Louise prevents the war and saves the world by phoning Shang, surely she will remember that at the celebratory party. And from Shang’s point of view, he won’t need to provide his number; she’ll already have known it. It’s always like this—a trick somewhere. Time travel violates everything we believe about causality. The best time travel succeeds by hiding the trick.

Woody Allen deployed a version of the same paradox in his 2011 movie, Midnight in Paris. His hero travels back to the 1920s and tries to give the young Luis Buñuel a movie idea. Of course, the idea is Buñuel’s own 1962 film, The Exterminating Angel. Allen breaks the loop with a joke.

Gil: Oh, Mr. Buñuel, I had a nice idea for a movie for you.

Buñuel: Yes?

Gil: Yeah, a group of people attend a very formal dinner party and at the end of dinner when they try to leave the room, they can’t…. And because they’re forced to stay together the veneer of civilization quickly fades away and what you’re left with is who they really are—animals.

Buñuel: But I don’t get it. Why don’t they just walk out of the room?

It’s a message from the future yet again. Imperfectly received.

No one, not even the most devout of physicists, behaves as though their life is predetermined. We study the menus and make our choices. If we knew—really knew—that the future was settled and our choices illusory, how would we live? Could we do that? What would it feel like?

Louise is about to find out. What will she do when Ian asks—as we know he will—“Do you want to make a baby?” There’s not much worse than a child’s death. It’s what the word “untimely” was made for. At least in real life the grief comes after the fact. A lifetime of memories is instantly shrouded in a veil of pain. For Louise, grief is part of the story from the beginning. The pain must color not only memory but also the experience of each day, each moment.

Nothing about time will be the same. “It won’t have been that long since you enjoyed going shopping with me,” she says; “it will forever astonish me how quickly you grow out of one phase and enter another. Living with you will be like aiming for a moving target; you’ll always be further along than I expect.”

At some point, too, we realize that she is going to tell Hannah’s father what she knows, namely that their daughter will die, and that will be a mistake. He will not be able to handle it. But she will find a way.

For us ordinary mortals, the day-to-day experience of a preordained future is almost unimaginable, but Chiang’s story does imagine it. This is where the movie can’t quite follow, for all its vividness.

He offers another paradox—as he says, a Borgesian parable. Let’s say you get to see “the Book of Ages, the chronicle that records every event, past and future.” You flip through it until you find the page on which, it says, you are flipping through the Book of Ages looking for this very page, and then you read ahead, and decide to act contrary to what is written. Can you do that? Logically, no. If you accept the premise, the story is unchanging. Knowledge of the future trumps free will. And maybe that’s all right. “What if the experience of knowing the future changed a person,” Louise muses. “What if it evoked a sense of urgency, a sense of obligation to act precisely as she knew she would?”

She can be comfortable with her new way of seeing. It’s like the photon fulfilling Fermat’s principle of least time. We can view its path sequentially, one thing after another, or we can view it from above, a whole, all at once. “Two very different interpretations,” she sees:

The physical universe was a language with a perfectly ambiguous grammar. Every physical event was an utterance that could be parsed in two entirely different ways, one causal and the other teleological.

In the same way, language can be seen as purposeful and informative, or it can be seen as “performative.”

“Now that I know the future, I would never act contrary to that future, including telling others what I know,” says Louise. “Those who know the future don’t talk about it. Those who’ve read the Book of Ages never admit to it.”

So, as she comes to understand her gift, she feels like a celebrant performing a ritual recitation. Or an actor reading her lines, following a script in every conversation. The rest of us don’t know we’re following the script. Are we, too, trapped? Enacting destiny? The only alternative is Woody Allen’s version of Buñuel: just walk out of the room.

The post Remembering the Future first appeared on James Gleick.

December 28, 2023

Free Will—Yea or Nay?

A neuroscientist and geneticist sets out to rescue the beleaguered concept from its many deniers—including some famous physicists. (Kevin J. Mitchell, Free Agents: How Evolution Gave Us Free Will, Princeton University Press.)

“We make decisions, we choose, we act. These are the fundamental truths of our existence and absolutely the most basic phenomenology of our lives. If science seems to be suggesting otherwise, the correct response is not to throw our hands up …”

Is he right? I say yes.

The post Free Will—Yea or Nay? first appeared on James Gleick.

December 19, 2023

Twitter, We Hardly Knew Ye

It took so little time for Elon Musk to obliterate Twitter and create a place of hatred and horror. He turned something valuable into a megaphone for neo-Nazis, white supremacists, anti-Semites, gay-bashers, and anyone else momentarily serving his scattershot right-wing agenda.

With the entity formerly known as Twitter vanishing in the rearview mirror, I offer two articles from the early days, when we wondered what it was and what it might become. A global conversation? A mosaic of communities and interests? Perhaps you remember.

From 2013: A Vast Confusion

From 2015: Let Twitter Be Twitter

Now I hope the open social web (sometimes thought of as Mastodon or the Fediverse) will rise to become what Twitter hoped to be, free of advertising, unwelcoming of trolls. That’s how it seems so far: I’m there as @JamesGleick@zirk.us.

The post Twitter, We Hardly Knew Ye first appeared on James Gleick.

December 18, 2023

Now You See Me, Now You Don’t

Invisibility: The History and Science of How Not to Be Seen

by Gregory J. Gbur

Yale University Press, 280 pp., $30.00

Transparency: The Material History of an Idea

by Daniel Jütte

Yale University Press, 502 pp., $45.00

Some twenty years ago the radio program This American Life asked listeners which of two superpowers they would choose: flight or invisibility. These are “two of the superpowers which have fascinated humans since antiquity,” said the host, Ira Glass. It was a test of character and a probe of the zeitgeist. The humorist and actor John Hodgman explained that he had been asking people this question for years at meetings and dinner parties, and that their choice revealed primal desires and unconscious fears.

He was disappointed that no one wanted to use their superpower to fight crime.

People who chose invisibility imagined themselves lurking, eavesdropping, and peeping. They were sneaky. “I think actually,” one woman said,

if everybody were being perfectly honest with you, they would tell you the truth, which is that they all want to be invisible so that they can shoplift, get into movies for free, go to exotic places on airplanes without paying for airline tickets, and watch celebrities have sex.

They want to see without being seen.

To fly is heroic, à la Superman. To vanish is antiheroic. Still, we crave invisibility in response to a growing sense of ubiquitous surveillance: our images captured and displayed everywhere, our inner souls turned out for all to see. “Transparency” is a watchword and a virtue—so we are told—and the desire for invisibility might be a natural reaction.

Over the past two decades, scientists studying optics have considered invisibility not just as a fantasy but as a practical possibility. We know about stealth aircraft, aspirationally invisible to radar. One automaker is now offering a “stealth” paint option—“a dark, enigmatic look,” for “an entirely new personality.” Gregory J. Gbur, an optical physicist at the University of North Carolina, has made invisibility something of a hobby. It informs his research, and he collects headlines: “Invisibility Cloaks Are in Sight”; “Researchers Create Functional Invisibility Cloak Using ‘Mirage Effect’”; “Scientists Invent Harry Potter’s Invisibility Cloak—Sort Of.” His new book, Invisibility, explores the phenomenon as a catalyst for research as well as for science fiction—because across several centuries the science of light and the fiction of invisibility developed side by side, each inspiring the other.

First the invisible man feels exalted, free to do anything he wants, superior to mere mortals, like a “seeing man in a city of the blind.” Unfortunately, to be invisible he has to be naked, and it’s winter in London.

Harry Potter has his cloak, Frodo has his ring, James Bond has a car, Wonder Woman has an airplane. They are mere newcomers to the art of vanishing at will. In ancient mythology Perseus, Athena, and Hermes took turns donning the helm of invisibility, aka the Cap of Hades, when they needed to evade the sight of their enemies. For the same reason, organisms like chameleons and octopi have evolved camouflage skills. Gbur takes his subtitle from the famous Monty Python sketch “How Not to Be Seen,” in which a series of people hide in bushes, leaf piles, and a water barrel before being shot or blown up. The desire to be invisible seems deeply embedded in our psyches. Yet it’s not obvious how a scientist ought to define it.

“The word ‘invisible,’” Gbur writes, “is simultaneously very suggestive, conjuring a specific image (or lack thereof) in a person’s mind, and very vague, in that it can mean many different things.” Everything is invisible in the dark; everyone else is invisible when you close your eyes. Bacteria and quasars are invisible by virtue of being small or far away, until we use microscopes and telescopes. Extending our vision, enabling us to see the unseen, has been a long-standing program in science, so scientists seeking invisibility might seem to inhabit a backwash from the main current.

Invisibility could mean perfect blackness or perfect transparency. A British company in 2014 announced a “super-black” coating called Vantablack that absorbs virtually all the light that strikes it. Jack London wrote a story in 1903, “The Shadow and the Flash,” in which a scientist paints himself perfectly black and battles a rival who achieves near-perfect transparency. Their conflict ends with a surreal and fatal game of tennis: “The blotch of shadow and the rainbow flashes, the dust rising from the invisible feet, the earth tearing up from beneath the straining foot-grips.”

~

In an odd bit of serendipity, Yale University Press has simultaneously published Transparency: The Material History of an Idea by Daniel Jütte. Transparency is invisibility’s obverse. It makes visible what would otherwise be hidden. With impressive detail and wide-ranging erudition, Jütte charts the history of a single material, glass, as a product of human ingenuity developed across centuries, beginning in Mesopotamia in the third millennium BCE. In Roman times the story of glass became the story of windows. “Window views and worldviews are more closely entwined than we might assume,” Jütte writes. As a technology for letting us observe the outside from the inside—and vice versa—the window calls attention to the act of seeing. It frames our vision and “epitomizes the idea of looking at the world from a protected or otherwise privileged perspective.” It becomes a metaphor. We speak of windows onto the world and windows into the soul.

The history of architectural glass implicates the cultural meaning of light. For physicists, light on earth comes first from the sun. In religion, it first came from God. Culturally, it has symbolized divinity, inspiration, knowledge, and political power. Darkness, as in “the Dark Ages,” was its antithesis. Jütte emphasizes that medieval times, far from being dark, were when Christian churches drove the demand for architectural glass, at first usually colored and then, as the technology of glassmaking improved, colorless, clear, and more perfectly transparent.

Glass windows let the light in, a plain fact that becomes a metaphor: “For ye were sometimes darkness, but now are ye light in the Lord: walk as children of light.” In practical terms light meant safety. Jütte quotes Michel Foucault: “A fear haunted the latter half of the eighteenth century: the fear of darkened spaces, of the pall of gloom which prevents the full visibility of things, men, and truths.” Light-skinned people in Europe turned the vagaries of pigmentation into an ideology of genetic superiority. As a counter to the darkness, whiteness was idealized and light suggested enlightenment. Then industrialization made light an object of technology: oil lamps, gas lamps, and finally electrification—turning night into day, as people began to say.

For the natural philosophers of the scientific revolution, glass was a substance to be shaped into lenses and prisms, to investigate the mysteries of light as a building block of nature. Glass reflects light and refracts it, focuses it and splits it into the colors of the rainbow. As the developing science of optics made its way into public knowledge, it revived old dreams of invisibility. In 1859 an Irish American writer, Fitz James O’Brien, published a story in Harper’s Magazine that imagined an invisible monster haunting a boarding house. The creature attacks the narrator, Harry, in the dark, and when he turns on a gas light he sees, to his horror, “nothing! Not even an outline,—a vapor!” After a struggle involving ropes and poorly aimed blows, he and his friend Hammond finally overpower “the Thing,” as they call it. The strangeness leaves them terrified and confused, until they start to think scientifically. “Let us reason a little, Harry,” says Hammond.

Take a piece of pure glass. It is tangible and transparent. A certain chemical coarseness is all that prevents its being so entirely transparent as to be totally invisible. It is not theoretically impossible, mind you, to make a glass which shall not reflect a single ray of light.

Air, too, is felt but not seen. What if transparency is the natural state, and only a certain chemical coarseness makes things visible? No less than Isaac Newton, the first great pioneer of optical science, had speculated along those lines. He suggested that “the least parts of matter” are transparent in themselves, until light passing through them is reflected and refracted every which way. Glass loses its natural transparency—becomes opaque—when it is scratched or crushed to powder. Conversely, paper, woven of discrete fibers, can be made transparent by soaking it with oil of equal density, to smooth the passage of light.

As Gbur tells it, the quest for invisibility ran closely alongside the search for the least parts of matter, beginning with the recognition that everything consists of invisible particles surrounded by emptiness and bound together by forces of attraction. Ancient Hindu sages and Greek philosophers had suggested this, and Newton favored the idea, though the atomic view didn’t take hold until the nineteenth century, when John Dalton developed a theory of tiny particles, identical and interchangeable, as the elementary constituents of matter. This laid the groundwork for modern chemistry. Even then scientists were still speculating—using inference and guesswork to construct a theory of things too small to be seen directly—and fiction writers speculated with them.

The imaginary scientist in another O’Brien story is an explorer with a microscope. “I imagined depths beyond depths in nature,” he says. “I lay awake at night constructing imaginary microscopes of immeasurable power, with which I seemed to pierce through all the envelopes of matter down to its original atom.” We have those now: electron microscopes and scanning tunneling microscopes, which can resolve particles far smaller than the wavelengths of ordinary light.

Also driving the fascination with invisibility was the paradoxical discovery that light itself can be invisible. William Herschel, musician turned astronomer, realized in 1800 that the sun emits “invisible rays”—what we now understand as infrared and ultraviolet light, radiation at wavelengths too short and too long to be detected by the human eye. It turns out that the visible spectrum is pitifully narrow. Of the universe’s full electromagnetic splendor, our eyes perceive only a sliver.

The notion of invisible rays made other forms of invisibility all the more plausible. “The human eye is an imperfect instrument,” says the narrator of “The Damned Thing,” an 1893 story by Ambrose Bierce:

Its range is but a few octaves of the real “chromatic scale.” I am not mad; there are colors that we cannot see.

And, God help me! the Damned Thing is of such a color!

Of course, the Damned Thing is another invisible monster.

~

With the progress in optics came a growing understanding of the gulf between what we see and what is really there. Light does strange things on its way from the object to the eye, and the brain has to do its best—which is often not very good at all—to make sense of the signals being passed its way.

Whether as particles or waves or both, light rays interfere with one another, sometimes even canceling each other out. Interference patterns mix darkness with light—a mind-bending fact properly appreciated by Thomas Young, a medical doctor turned physicist, whose studies of the eye led him to the study of light itself. Young’s wave-based theory of interference, contradicting Newton’s particle-oriented (“corpuscular”) theory, provoked controversy and derision in the early 1800s. One contemporary explained why it was so counterintuitive:

Who would not be surprised to find darkness in the sun’s rays,—in points which the rays of the luminary freely reach; and who would imagine that any one could suppose that the darkness could be produced by light being added to light!

The relationship between light and darkness was not so simple.

It was the electricians—especially Michael Faraday and James Clerk Maxwell—who created a unified theory of light as nothing more or less than an oscillating wave of electricity and magnetism. A disturbance in the field. Maxwell’s theory brought together every natural form of luminance: lightning bolts and auroras, glowworms and fireflies, fluorescent jellyfish and bioluminescent fungi. It also predicted, as a matter of pure mathematics, all the invisible versions of electromagnetic radiation: radio waves (soon made in Heinrich Hertz’s laboratory in Karlsruhe), microwaves, and gamma rays. The sexiest were discovered and named by Wilhelm Röntgen in 1895: X-rays. Invisible themselves, X-rays penetrated solid matter and revealed what lay within. Röntgen made an image of the bones inside his wife’s hand. She said, “I have seen my death,” and he won the first Nobel Prize in physics.

We’re so accustomed to advanced medical imaging, from MRIs to PET scans, that it’s hard to grasp how powerfully X-rays affected the popular imagination. “Misinformation spread almost as quickly as news of the discovery itself,” Gbur writes. “If X-rays can see through anything, might people be able to use them to spy on their neighbors and see through their clothing?” (A similar fear arose about a decade ago when the American government installed full-body scanners at airports: invisible rays revealing our naked forms.) When Thomas Edison learned of X-rays, he confidently announced that they would allow the blind to see. “I can make a Röntgen ray that will enable me to see through the partition in this laboratory, and possibly through the brick walls,” he said. He was wrong, but the foundation had been laid for Superman’s “X-ray vision” and novelty-store X-ray spectacles, and also for the first great novel of invisibility, H.G. Wells’s The Invisible Man.

Wells’s first book, The Time Machine (1895), had been a sensation—a pseudoscientific fantasy that brought him instant success. The Invisible Man, published two years later, was almost as original. It drew straight from the headlines—“Röntgen vibrations” are part of the narrator’s bag of tricks—and the influence went both ways. Its readers included future scientists. It was “a turning point in the history of invisibility physics,” Gbur writes, “when the possibility of invisibility—and its dangers—entered the public consciousness, where it has remained to this day.”

As in The Time Machine, Wells dresses his story in an armor of plausible mumbo jumbo. His protagonist, a former medical student named Griffin who has turned to the study of optics, explains, “The whole subject is a network of riddles—a network with solutions glimmering elusively through.” Griffin is pondering the ways a body may absorb light or reflect it or refract it, when “suddenly—blindingly! I found a general principle of pigments and refraction—a formula, a geometrical expression involving four dimensions.” He makes a “gas engine” powered by “dynamos” and creates drugs that “decolourise blood.” We’re being conned, but generations of readers have been happy to go along. As for Griffin, he is euphoric: “I beheld, unclouded by doubt, a magnificent vision of all that invisibility might mean to a man—the mystery, the power, the freedom. Drawbacks I saw none.” What could go wrong?

First the invisible man feels exalted, free to do anything he wants, superior to mere mortals, like a “seeing man…in a city of the blind.” Unfortunately, to be invisible he has to be naked, and it’s winter in London. Practicalities begin to weigh on him. He is jostled in crowds and growled at by suspicious dogs. In his mind’s eye he becomes “a gaunt black figure” with a “strange sense of detachment.” Eating is a problem—think of undigested food making its way through the gastrointestinal tract.

The invisible man puts on clothes, wraps his face in bandages, and grows desperate and deranged. As Wells’s son Anthony West wrote, he becomes

an invisible madman, a person impenetrably concealed within his own special frame of private references, resentments, obsessions, and compulsions, and altogether set apart from the generality of mankind.

He has found that invisibility, far from being a superpower, is the ultimate in alienation. Nowadays every selfie-snapping Instagrammer and TikTokker seems to feel this instinctively.

No wonder Ralph Ellison chose this theme for his 1952 masterpiece, Invisible Man. His narrator is invisible because he is Black in white America. In the novel’s famous opening he declares:

I am an invisible man. No, I am not a spook like those who haunted Edgar Allan Poe; nor am I one of your Hollywood-movie ectoplasms. I am a man of substance, of flesh and bone, fiber and liquids—and I might even be said to possess a mind. I am invisible, understand, simply because people refuse to see me.

The invisible man is unnamed, marginalized, living literally underground, in an abandoned coal cellar illuminated by exactly 1,369 light bulbs. (He steals electricity from Monopolated Light & Power.) He listens on his record player to Louis Armstrong’s “What Did I Do to Be So Black and Blue,” which he describes as poetry of invisibility. Invisibility gives him an altered sense of time: an awareness of its nodes, an escape from strict tempo, a sense of being out of sync. Invisibility has its advantages, he tells us, but sometimes he begins to doubt his own existence. He feels like a phantom in someone else’s nightmare. “All life seen from the hole of invisibility is absurd,” he says.

Yet the quest for invisibility persists. Maxwell’s electromagnetic theory has been upgraded to quantum electrodynamics, which has sidestepped the question of whether light is a particle or a wave by embracing both views, combining them in one uneasy package. Quantum control of light provides communication and medicine with applications weirder than science fiction. Physicists manipulate light like wizards. Lasers make beams of coherent light that cut diamonds and blast kidney stones. Holograms manipulate interference patterns to create three-dimensional images. Optical fiber channels light to carry information far more efficiently than any electrical wire.

In 1975 Milton Kerker, an expert on the scattering of light by small particles, wrote what Gbur calls the first scientific paper about “a truly invisible object.” Kerker calculated that under certain circumstances the light striking an object could excite electrons so as to generate electromagnetic waves perfectly out of phase, rendering the object invisible. Alas, nothing seems to have come of this discovery. The research was funded in part by the Paint Research Institute, possibly in hopes of discovering invisibility paint.

The most persuasive progress toward true invisibility—the “game changer,” says Gbur—came in 2006, with strategies for making objects disappear by bending light around them. On astronomical scales, the gravitation of black holes warps space to alter the path of light, and physicists suggested designing optical materials—“metamaterials”—that could produce a similar effect. Every transparent substance has a refractive index, the measure of how much light is bent when it enters the material. A Ukrainian theorist, Victor Veselago, speculated that materials could be created with a negative refractive index and that such metamaterials would bend light in counterintuitive ways. “With the introduction of metamaterials,” Gbur writes, “researchers were now asking, ‘How can we make light do whatever we want it to?’” (Metamaterials are now transforming the design of lenses for smartphones and other applications.)

An English optical physicist, John Pendry, thought “it would be a good joke to show how to make objects invisible.” He proposed creating a metamaterial that could guide light around it “like water flowing around a rock in a river, so that the object inside it cannot be seen.”

My wife suggested that I [make] reference to someone called Harry Potter, of whom I had never heard but who apparently had something to do with cloaks. However, the joke was taken extremely seriously, and cloaking has since become a major theme in the metamaterials community.

When Science published Pendry’s paper in 2006, it generated a flurry of newspaper headlines of the sort Gbur treasures.

In 2011 Japanese researchers reported finding a chemical reagent that bleached biological tissue almost to a state of transparency, in an effort to reveal brain structures to their microscopes. They pursued basically the same approach as a fictional lab worker named Flack in an 1881 short story, “The Crystal Man,” by Edward Page Mitchell, using chemical solvents to clear pigmentation. But they were working with mouse embryos, and their methods don’t seem suitable for live humans.

It’s fair to say that scientists’ imaginations continue to run ahead of their practical success. Their computer simulations achieve better results than their experiments. The invisibility cloaks that work by bending light are mainly ad hoc. They’re limited in size—Baile Zhang, from Singapore, demonstrated an invisibility cloak that hid a pink Post-It note at a TED conference in 2013, and later reported having expanded it to hide goldfish in a tank and a cat—and they cast shadows or operate over a limited range of wavelengths. Even Gbur, who includes an appendix optimistically titled “How to Make Your Own Invisibility Device!” lets us know, somewhat wistfully, that the invisibility of our science-fictional dreams might remain forever impossible.

Biological evolution chose the wavelengths our eyes can see—from about 400 to 700 billionths of a meter—and it chose well. Those are light rays that pass through the atmosphere with a minimum of scattering and absorption but do not pass through solid matter in most of its forms. However we have manipulated visible light, at least so far, objects reflect it and cast shadows. Even transparency is rare. The people who have best learned how to bend light around objects to make them disappear are stage magicians, with mirrors. They have the advantage of stationary audiences, happy to be fooled.

Even when the idea of invisibility attracts us, we still fear the darkness. Ellison’s invisible man, in his windowless space underground, needs his army of light bulbs to keep the darkness at bay. “I doubt if there is a brighter spot in all New York than this hole of mine, and I do not exclude Broadway,” he says. Fear of the dark may be primitive and instinctual, but Jütte’s Transparency charts a change in attitudes in the West during the Enlightenment. “In previous periods of history, darkness was first and foremost a practical problem—an obstacle to the conduct of certain domestic and professional activities,” he writes. The Enlightenment gave it a moral coloration: darkness was associated with “cultural backwardness and social inferiority”—dungeons for criminals, hovels for the poor. Light was modern. It brought safety and health. When the early Massachusetts colonists built a courthouse in Boston in 1713, they took particular pride in its windows: “May the Judges always discern the Right…Let this large, transparent, costly Glass serve to oblige the Attorneys alway [sic] to set Things in a True light.”

Windows were prized as a luxury and a mark of civilization. One measure of their importance is that from 1696 to 1851 England imposed a window tax. Windows let people look out upon the landscape—ideally onto their gardens. Or the windows let people look in, which was another virtue, the antithesis of furtiveness. Jütte cites Jean-Jacques Rousseau as a champion of righteous transparency. His own heart, Rousseau said, was “transparent as crystal,” and he praised transparency in architecture: “I have always regarded as the worthiest of men that Roman who wanted his house to be built in such a way that whatever occurred within could be seen.” Continuing the metaphor to this day, transparency—in social relations, in government, in corporate practice—has come to be seen as an unalloyed good. Morality has combined with aesthetics to make glass the quintessential material of modern architecture.

Frank Lloyd Wright championed glass to let us “escape from the prettified cavern of our present domestic life as also from the cave of our past.” The picture window became a status symbol. Mies van der Rohe brought glass-faced towers to Chicago; under his influence, Philip Johnson designed his trademark Glass House in Connecticut; I.M. Pei shocked France by adding a glass pyramid to the Louvre. Le Corbusier hailed skyscrapers with “immense geometrical façades all of glass, and in them is reflected the blue glory of the sky.” They continue to rise in every city. Meta’s headquarters in Menlo Park, California, designed by Frank Gehry, is entirely transparent, the company boasts: “One can see through it from one end to the other.” In its center is the chairman, Mark Zuckerberg, his office encased in bulletproof glass. Everybody loves glass.

And yet. In the history of Western architecture, the paradigmatic glass building is the panopticon, designed by the English philosopher and reformer Jeremy Bentham in 1791. Long before walls and large windows of glass became feasible, he made transparency the “characteristic principle” of his plan. He explicitly associated transparency with good government. The panopticon was a marvel. But it was intended as a prison—a new national penitentiary for Britain. The inmates were to live in transparent rooms, visible from all sides and from above, always watched, never unseen. “There ought not any where be a single foot square,” Bentham wrote, “on which man or boy shall be able to plant himself, no not for a moment, under any assurance of not being observed.”

Jütte wants us to see that glass architecture, along with the dream of a transparent society, has “a nightmarish side”—that it is “an architecture of power.” It tends toward homogeneity and control. To see something is the first step toward subjugating it. “Tightly sealed windows keep the city’s smells at bay,” he writes, “and the development of soundproof glass has turned windows into highly effective barriers against the exterior soundscape.” A glass wall is still a wall.

The same applies to the vision of perfect transparency promised by Zuckerberg and the other purveyors of social media. We are told that a transparent society will replace stealth and secrecy with openness and accountability. But living in glass houses means that someone is always watching. The panopticon rises all around. Invisibility seems no longer to be an option.

[First published in The New York Review , Aug. 17, 1023]

The post Now You See Me, Now You Don’t first appeared on James Gleick.

December 20, 2020

Hawking Stephen Hawking

First published in the New York Review of Books, April 19, 2021.

Hawking Hawking: The Selling of a Scientific Celebrity

by Charles Seife

Basic Books, 388 pp., $30.00

The world’s first scientist-celebrity, Isaac Newton, was entombed in Westminster Abbey with high ceremony, alongside statesmen and royalty, under a monument ornately carved in white-and-gray marble, bearing a fulsome inscription in Latin: “Mortals rejoice that there has existed so great an ornament of the human race!” His fame had spread far across the European continent. In France the young Voltaire lionized him: “He is our Christopher Columbus. He has led us to a new world.” An outpouring of verse filled the popular gazettes (“Nature her self to him resigns the Field/From him her Secrets are no more conceal’d”). Medals bearing his likeness were struck in silver and bronze.