Evolution of Research: From Foundations to Frontier

In Memory of Pushpak Bhattacharyya, Computation For Indian Language Technology (CFILT) Lab, CSE at IIT Bombay and Indian Institute of Technology, PatnaPrologue

In Memory of Pushpak Bhattacharyya, Computation For Indian Language Technology (CFILT) Lab, CSE at IIT Bombay and Indian Institute of Technology, PatnaPrologueEvery discipline carries within it an invisible tapestry — threads woven from questions posed, methods tested, insights harvested, and new frontiers charted. In natural language processing (NLP), the culture of inquiry grows like roots and branches, nurtured by each generation of scholars, then passed on. These traditions become the unspoken curriculum, the scaffolding behind breakthroughs years later. If we liken this to a silent transmission — like Bodhidharma’s oral transmission of the Vedas — then one of these foundational threads is the study of opinion summarization.

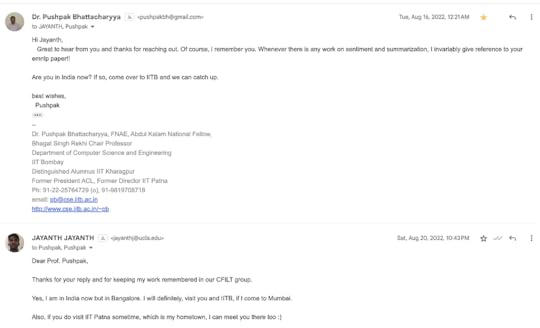

Opinion summarization is more than summarising text: it is distilling sentiment, aspect, voice — the kaleidoscope of human evaluation. The journey from modelling to evaluation to generative systems in this domain offers a textbook of how academic research evolves: from fundamental theory, to critique, to benchmark, to next-gen system. This article traces one such chain — and in doing so I honour Prof. Pushpak Bhattacharyya, whose work anchors the foundation of this chain.

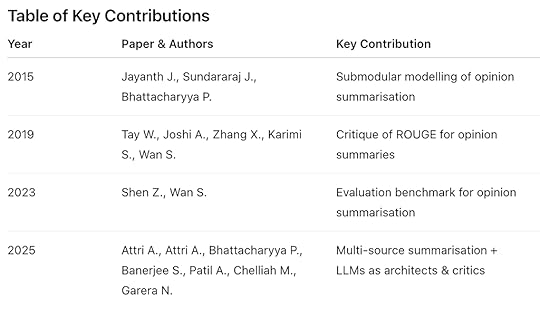

The Journey of an Idea1. Foundations (2015)In 2015, the paper Monotone Submodularity in Opinion Summaries (Jayanth Jayanth, Jayaprakash Sundararaj & Pushpak Bhattacharyya) laid a rigorous foundation: summarizing opinions is not simply picking frequent phrases — it is a problem of coverage, redundancy, and sentiment intensity. They framed it as a monotone submodular optimisation problem: adding sentences increases subjectivity (monotonicity) but the benefit per additional sentence diminishes (diminishing returns). CSE IIT Bombay Paper in EMNLP This work put the lens of optimisation theory onto opinion summarisation, setting a formal bedrock.

2. Critical Evaluation (2019)Fast-forward to 2019: the paper Red‑faced ROUGE: Examining the Suitability of ROUGE for Opinion Summary Evaluation (Tay W; Joshi A; Zhang X; Karimi S; Wan S) challenged one of the core tools of summarisation research: ROUGE. They showed that for opinion summaries — where what is said (aspect) and how it is said (polarity) matter — ROUGE’s overlap-based scoring fails to distinguish correct versus opposite sentiment. ACL Anthology Paper This was an inflection point: the evaluation metric, not just the method, required scrutinising.

3. Benchmarking Evaluation (2023)In 2023, the work OpinSummEval: Revisiting Automated Evaluation for Opinion Summarization (Shen Z & Wan S) picked up the mantle of evaluation. They built datasets and metrics aligned with human judgement for opinion summarisation — implicitly motivated by the 2019 critique of ROUGE. arXiv Paper Thus the academic attention shifted from “how do we build summaries?” to “how do we evaluate them correctly?”

4. System-level Innovation (2025)Finally, the 2025 paper LLMs as Architects and Critics for Multi‑Source Opinion Summarization (Attri A; Attri A; Bhattacharyya P; Banerjee S; Patil A; Chelliah M; Garera N) synthesises the prior threads into a next-generation system: multi-source input (reviews + metadata + QA) and large language models (LLMs) as both architects (generators) and critics (evaluators). The paper cites the 2023 evaluation benchmark explicitly and builds upon the evaluation concerns raised earlier. This brings the research arc full circle: modelling → evaluation critique → benchmark → system innovation.

Directed Acyclic Graph (DAG) of the Research Chain[2015: Monotone Submodularity in Opinion Summaries]↑

│ (influence and citation)

[2019: Red-faced ROUGE]

↑

│ (evaluation benchmark build)

[2023: OpinSummEval]

↑

│ (cited by & extended in system paper)

[2025: LLMs as Architects & Critics]

Graphviz representation

digraph Evolution {node [shape=box];

P2015 [label="2015 – Jayanth et al.\nMonotone Submodularity"];

P2019 [label="2019 – Tay et al.\nRed-faced ROUGE"];

P2023 [label="2023 – Shen & Wan\nOpinSummEval"];

P2025 [label="2025 – Attri et al.\nLLMs as Architects & Critics"];

P2019 -> P2015;

P2023 -> P2019;

P2025 -> P2023;

}Table of Key Contributions

Reflection: The Lifecycle of ContributionVision & Formalisation — The 2015 paper gave us a rigorous lens.Scrutiny & Weaknesses — The 2019 paper exposed the weak link in evaluation metrics.Infrastructure & Benchmarking — The 2023 work built the tools needed to measure progress correctly.Integration & Scale — The 2025 work delivered the system that synthesises earlier theory, metrics, and technology into a modern paradigm.

Reflection: The Lifecycle of ContributionVision & Formalisation — The 2015 paper gave us a rigorous lens.Scrutiny & Weaknesses — The 2019 paper exposed the weak link in evaluation metrics.Infrastructure & Benchmarking — The 2023 work built the tools needed to measure progress correctly.Integration & Scale — The 2025 work delivered the system that synthesises earlier theory, metrics, and technology into a modern paradigm.This is how research advances: each stage feeds the next. Foundational ideas enable critique; critique drives benchmarking; benchmarking supports system innovation.

In Memory of Prof. Pushpak BhattacharyyaProf. Bhattacharyya’s work with Jayanth K. in Computation For Indian Language Technology (CFILT) Lab , especially his contribution to the 2015 paper, holds a central place in this journey. His insights into opinion summarisation modelling continue to ripple through the field. This article stands as a tribute to his legacy — thank you for shaping the path of advance.

Epilogue

EpilogueIn the end, research is not just the publication of papers — it’s the weaving of ideas across time, the lifting of standards, the challenging of assumptions, the building of new systems. The chain we charted — 2015 through 2025 — is but one example of many. But for us, it is instructive: build on sound theory, be ready to critique the foundations, create robust measurement, then dare to build at system scale. That is the path to meaningful progress.

“May we all stand on the shoulders of past giants, reach further, and leave a foundation for future ones.” — Jayanth K.

ReferencesJayanth K. , Jayaprakash Sundararaj , & Pushpak Bhattacharyya (2015). Monotone Submodularity in Opinion Summaries. In Proceedings of EMNLP 2015, 169–178. DOI:10.18653/v1/D15–1017. CSE IIT Bombay Paper in EMNLPTay, W., Aditya Joshi , Zhang, X., Karimi, S., & Wan, S. (2019). Red-faced ROUGE: Examining the Suitability of ROUGE for Opinion Summary Evaluation. In Proceedings of ALTA 2019, 52–60. ACL Anthology PaperShen, Z., & Wan, S. (2023). OpinSummEval: Revisiting Automated Evaluation for Opinion Summarization. arXiv:2310.18122. arXiv PaperAttri, A., Attri, A., Bhattacharyya, P., Banerjee, S., Patil, A., Chelliah, M., & Garera, N. (2025). LLMs as Architects and Critics for Multi-Source Opinion Summarization. arXiv:2507.04751. arXiv Paper[image error]Evolution of Research: From Foundations to Frontier was originally published in Technopreneurial Treatises on Medium, where people are continuing the conversation by highlighting and responding to this story.