Embedded AI Apps: Now in ChatGPT

Last week I had a running list of user experience and technical hurdles that made using applications "within" AI models challenging. Then OpenAI's ChatGPT Apps announcement promised to remove most of them. Given how much AI is changing how apps are built and used, I thought it would be worth talking through these challenges and OpenAI's proposed solutions.

Whether you call it a chat app, a remote MCP server app, or an embedded app, when your AI application runs in ChatGPT, the capabilities of ChatGPT become capabilities of your app. ChatGPT can search the web, so can your app. ChatGPT can connect to Salesforce, so can your app. These all sound like great reasons to build an embedded AI app but... there's tradeoffs.

Embedded apps previously had to be added to Claude.ai or ChatGPT (in developer mode) by connecting a remote MCP server, for which the input field could be several clicks deep into a client's settings. That process turned app discovery into a brick wall for most people.

To address this, OpenAI announced a formal app submission and review process with quality standards. Yes, an app store. Get approved and installing your embedded app becomes a one-click action (pending privacy consent flows). No more manual server configs.

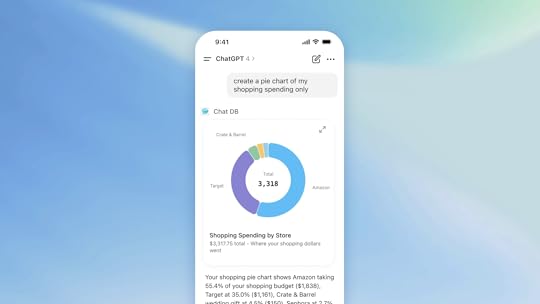

If you were able to add an embedded app to a chat client like Claude.ai or ChatGPT, using it was a mostly text-based affair. Embedded apps could not render images much less so, user interface controls. So people were left reading and typing.

Now, ChatGPT apps are able to render "React components that run inside an iframe" which not only enables inline images, maps, and videos but custom user interface controls as well. These iframes can also run in an expanded full screen mode giving apps more surface area for app-specific UI and in a picture-in-picture (PIP) mode for ongoing sessions.

This doesn't mean that embedded app discoverability problems are solved. People still need to either ask for apps by name in ChatGPT, access them by through the "+" button, or rely on the model's ability to decide if/when to use specific apps.

The back and forth between the server running an embedded app and the AI client also has room for improvement. Unlike desktop and mobile operating systems, ChatGPT doesn't (yet) support automatic background refresh, server-initiated notifications, or even passing files (only context) from the front end to a server. These capabilities are pretty fundamental to modern apps, so perhaps support isn't far away.

The tradeoffs involved in building apps for any platform have always been about distribution and technical capabilities or limitations. 800M weekly ChatGPT users is a compelling distribution opportunity and with ChatGPT Apps, a lot of embedded AI app user experience and technical issues have been addressed.

Is this enough to move MCP remote servers from a developer-only protocol to applications that feel like proper software? It's the same pattern from every platform shift: underlying technology first, then the user experience layer that makes it accessible. And there was definitely big steps forward on that last week.

Luke Wroblewski's Blog

- Luke Wroblewski's profile

- 86 followers