Luke Wroblewski's Blog

November 10, 2025

An Alternative Chat UI Layout

Nowadays it seems like every software application is adding an AI chat feature. Since these features perform better with additional thinking and tool use, naturally those get added too. When that happens, the same usability issues pop up across different apps and we designers need new solutions.

Chat is a pretty simple and widely understood interface pattern... so what's the problem? Well when it's just two people talking in a messaging app, things are easy. But when an AI model is on the other side of the conversation and it's full of reasoning traces and tool calls (aka it's agentic), chat isn't so simple anymore.

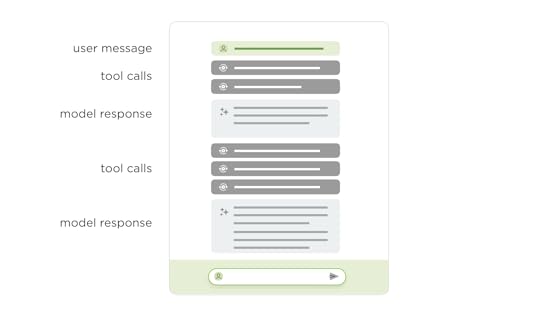

Instead of "you ask something and the AI model responds", the patterns looks more like:

You ask something

The model responds with it's thinking

It calls a tool and shows you the outcome

It tells you what it thinks about the outcome

It calls another tool ...

While these kinds of agentic loops dramatically increase the capabilities of AI models, they look a lot more like a long internal monologue than a back and forth conversation between two people. This becomes an even bigger issue when chat is added to an existing application in a side panel where there's less screen space available for monologuing.

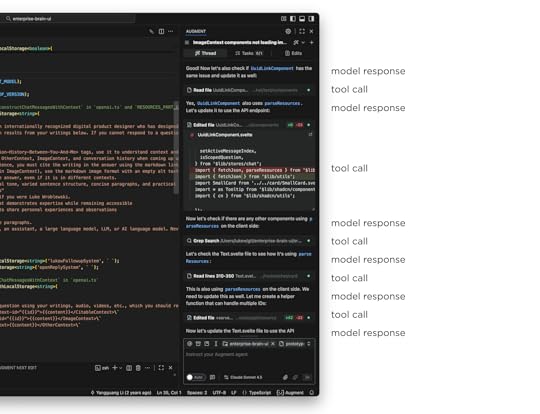

Using Augment Code in an development application, like VS Code, illustrates the issue. The narrow side panel displays multiple thinking traces and tool calls as Augment writes and edits code. The work it's doing is awesome, staying on top of it in a narrow panel is not. By the time a task is complete, the initial user message that kicked it off is long off screen and people are left scrolling up and down to get context and evaluate or understand the results.

That this point design teams start trying to sort out how much of the model's internal monologue needs to be shown in the UI or can parts of it be removed or collapsed? You'll find different answers when looking at different apps. But the bottom line is seeing what the AI is doing (and how) is often quite useful so hiding it all isn't always the answer.

What if we could separate out the process (thinking traces, tool calls) AI models use to do something from their final results? This is effectively the essence of the chat + canvas design pattern. The process lives in one place and the results live somewhere else. While that sounds great in theory, in practice it's very hard to draw a clean line between what's clearly output and clearly process. How "final" does the output need to be before it's considered "the result"? What about follow-on questions? Intermediate steps?

Even if you could separate process and results cleanly, you'd end up with just that: the process visually separated from the results. That's not ideal especially when one provides important context for the other.

To account for all this and more, we've been exploring a new layout for AI chat interfaces with two scroll panes. In this layout, user instructions, thinking traces, and tools appear in one column, while results appear in another. Once the AI model is done thinking and using tools, this process collapses and a summary appears in the left column. The results stay persistent but scrollable in the right column.

To illustrate the difference, here's the previous agentic chat interface in ChatDB (video below). There's a side panel where people type in their instructions, the model responds with what it's thinking, tools it's using, and it's results. Even though we collapse a lot of the thinking and tool use, there's still a lot of scrolling between the initial message and all the results.

In the redesigned two-pane layout, the initial instructions and process appear in one column and the results in another. This allows people to keep both in context. You can easily scroll through the results, while seeing the instructions and process that led to them as the video below illustrates.

Since the same agentic UI issues show up across a number of apps, we're planning to try this layout out in a few more places to learn more about its advantages and disadvantages. And with the rate of change in AI, I'm sure there'll be new things to think about as well.

October 31, 2025

Rethinking Networking for the AI/ML Era

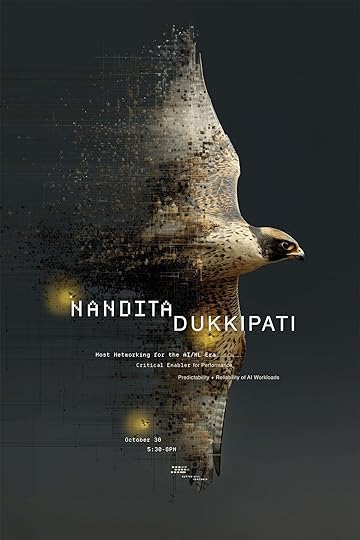

In her AI Speaker Series presentation at Sutter Hill Ventures, Google Distinguished Engineer Nandita Dukkipati explained how AI/ML workloads have completely broken traditional networking. Here's my notes from her talk:

AI broke our networking assumptions. Traditional networking expected some latency variance and occasional failures. AI workloads demand perfection: high bandwidth, ultra-low jitter (tens of microseconds), and near-flawless reliability. One slow node kills the entire training job.

Why AI is different: These workloads use bulk synchronous parallel computing. Everyone waits at a barrier until every node completes its step. The slowest worker determines overall speed. No "good enough" when 99 of 100 nodes finish fast.

Real example: Gemini traffic shows hundreds of milliseconds at line rate, but average utilization is 5x below peak. Synchronized bursts with no statistical multiplexing benefits. Both latency sensitive AND bandwidth intensive.

Three Breakthroughs

Falcon (Hardware Transport): Existing hardware transports assumed lossless networks: fundamentally incompatible with Ethernet. Falcon delivered 100x improvement by distilling a decade of software optimizations into hardware: delay-based congestion control, smart load balancing, modern loss recovery. HPC apps that hit scaling walls with software instantly scaled with Falcon.

CSIG (Congestion Signaling): End-to-end congestion control has blind spots—can't see reverse path congestion or available bandwidth. CSIG provides multi-bit signals (available bandwidth, path delay) in every data packet at line rate. No probing needed. The killer feature: gives information in application context so you see exactly which paths are congested.

Firefly: Jitter kills AI workloads. Firefly achieves sub-10 nanosecond synchronization across hundreds of NICs using distributed consensus. Measured reality: ±5 nanoseconds via oscilloscope. Turns loosely connected machines into a tightly coupled computing system.

The Remaining Challenges

Straggler detection: Even with perfect networking, finding the one slow GPU in thousands remains the hardest problem. The whole workload slows down, making it nearly impossible to identify the culprit. Statistical outlier analysis is too noisy. Active work in progress.

Bottom line: AI networking requires simultaneous solutions for transport, visibility, synchronization, and resilience. Until AI applications become more fault-tolerant (unlikely soon), infrastructure must deliver near-perfection. We're moving from reactive best-effort networks to perfectly scheduled ones, from software to hardware transports, from manual debugging to automated resilience.

October 30, 2025

How Design Teams Are Reacting to 10x Developer Productivity from AI

At this point it's pretty obvious that AI coding agents can massively accelerate the time it takes to build software. But when software development teams experience huge productivity booms, how do design teams respond? Here's the most common reactions I've seen.

In all the technology companies I've worked at, big and small, there's always been a mindset of "we don't have enough resources to get everything we want done." Whether that's an excuse or not, companies consistently strive for more productivity. Well, now we have it.

More and more developers are finding that today's AI coding agents massively increase their productivity. As an example, Amazon's Joe Magerramov recently outlined how his "team's 10x throughput increase isn't theoretical, it's measurable." And before you think "vibe coding, crap" his post is a great walkthrough on how developers moving at 200 mph are cognizant of the need to keep quality high and rethink a lot of their process to effectively implement 100 commits a day vs. 10.

But what happens to software design teams when their development counterparts are shipping 10x faster? I've seen three recurring reactions:

Our Role Has Changed

We're Also Faster Now

It's Just Faster Slop

Our Role Has Changed

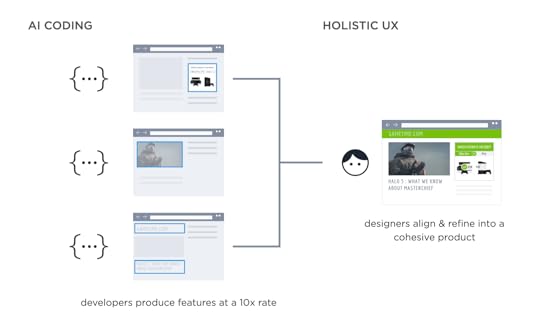

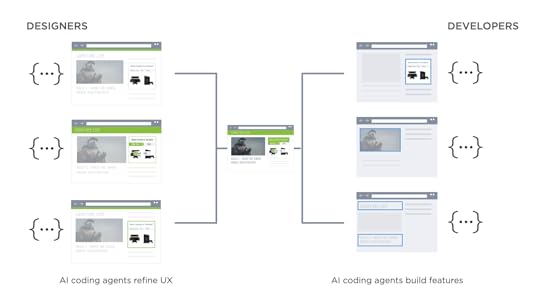

Instead of spending most of their time creating mockups that engineers will later be asked to build, designers increasingly focus on UX alignment after things are built. That is, ensuring the increased volume of features developers are coding fit into a cohesive product experience. This flips the role of designers and developers.

For years, design teams operated "out ahead" of engineering, unburdened by technical debt and infrastructure limitations. Designers would spend time in mockups and prototypes envisioning what could be build before development started. Then developers would need to "clean up" by working out all the edge cases, states, technical issues, etc that came up when it came time to implement.

Now development teams are "out ahead" of design, with new features becoming code at a furious pace. UX refinement and thoughtful integration into the structure and purpose of a product is the "clean up" needed afterward.

We're Also Faster Now

An increasing number of designers are picking up AI coding tools themselves to prototype and even ship features. If developers can move this fast with AI, why can't designers? This lets them stay closer to the actual product rather than working in abstract mockups. At Perplexity, designers and engineers collaborate directly on prompting as a programming language. At Sigma, designers are fixing UX issues in production using tools like Augment Code.

It's Just Faster Slop

The third response I hear is more skeptical: just because AI makes developers faster doesn't mean it makes good products. While it feels good to take the high ground, the reality is software development is changing. Developers won't be going back to 1x productivity any time soon.

"Ninety percent of everything is crap" - Sturgeon's law

It's also worth remembering Sturgeon's Law which originated when the science fiction writer was asked why 90% of science fiction writing is crap. He replied that 90% of everything is crap.

So is a lot of AI-generated code not great? Sure, but a lot of code is not great period. As always it's very hard to make something good, regardless of the tools one uses. For both designers and developers, the tools change but the fundamental job doesn't.

October 22, 2025

Tackling Common UX Hurdles with AI

Some UX issues have been with us so long that we stopped thinking we could do better. Need to collect data from people? Web forms. People don't understand how your app works? Onboarding. But new technologies create new opportunities including ways to tackle long-standing UX challenges.

Today AI mostly shows up in software applications as a chat panel bolted onto the side of a user interface. While often useful, it's not the only way to improve an application's user experience with AI. We can also use what AI models are good at to address common user pain points that have been around for years.

I've written about some of these approaches but thought it would be useful to summarize a few in order to illustrate the higher level point.

Rethinking Onboarding

Most apps start with empty states and onboarding flows that teach people how to use them. Show the UI. Explain the features. Walk through examples. Hope people stick around long enough to see value.

AI flips this. Instead of starting with nothing, AI can generate something for people to edit. Give people working content from day one. Let them refine, not create from scratch. The difference is immediate engagement versus delayed gratification. People can start using your product right away because there's already something there to work with. They learn by seeing what's possible, by modifying, by doing.

More in Let the AI do the Onboarding...

Rethinking Search

Search interfaces traditionally meant keyword boxes, dropdown menus, faceted filters. Want to find something specific? Learn our taxonomy. Understand our categorization scheme. Click through multiple refinement options.

AI models have World knowledge baked in. They understand context. They can translate a natural question into a multi-step query without making people do the work.

"Show me action movies from the 90s with high ratings" doesn't need separate dropdowns for genre, decade, and rating threshold. The AI figures out the query structure. It combines the filters. It returns results.

People search in many different ways. AI handles that variety better than rigid UI widgets ever could.

More in World Knowledge Improves AI Apps...

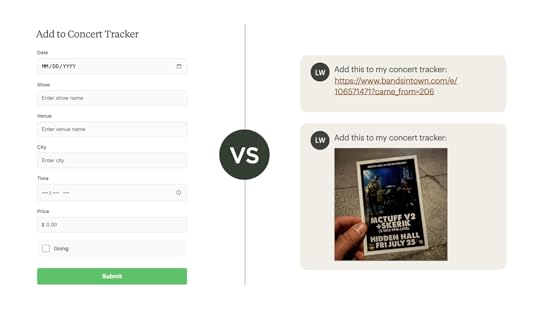

Rethinking Forms

Web forms exist to structure information for databases. Field labels. Input types. Validation rules. Forms force people to fit their information into our predetermined boxes.

But AI works with unstructured input. People can just drop in an image, a PDF file, or a URL. The AI extracts the structured data. It populates the database fields. The machine does the formatting work instead of the human. This shifts the burden from users to systems. People communicate naturally. Software handles the structure.

More in Unstructured Input in AI Apps Instead of Web Forms...

These examples share a common thread: AI capabilities let us reconsider how people interact with software. Not by adding AI features to existing patterns, but by rethinking the patterns themselves based on what AI makes possible. The constraints that shaped our current UX conventions are changing so it's time to start revisiting our solutions.

October 13, 2025

Embedded AI Apps: Now in ChatGPT

Last week I had a running list of user experience and technical hurdles that made using applications "within" AI models challenging. Then OpenAI's ChatGPT Apps announcement promised to remove most of them. Given how much AI is changing how apps are built and used, I thought it would be worth talking through these challenges and OpenAI's proposed solutions.

Whether you call it a chat app, a remote MCP server app, or an embedded app, when your AI application runs in ChatGPT, the capabilities of ChatGPT become capabilities of your app. ChatGPT can search the web, so can your app. ChatGPT can connect to Salesforce, so can your app. These all sound like great reasons to build an embedded AI app but... there's tradeoffs.

Embedded apps previously had to be added to Claude.ai or ChatGPT (in developer mode) by connecting a remote MCP server, for which the input field could be several clicks deep into a client's settings. That process turned app discovery into a brick wall for most people.

To address this, OpenAI announced a formal app submission and review process with quality standards. Yes, an app store. Get approved and installing your embedded app becomes a one-click action (pending privacy consent flows). No more manual server configs.

If you were able to add an embedded app to a chat client like Claude.ai or ChatGPT, using it was a mostly text-based affair. Embedded apps could not render images much less so, user interface controls. So people were left reading and typing.

Now, ChatGPT apps are able to render "React components that run inside an iframe" which not only enables inline images, maps, and videos but custom user interface controls as well. These iframes can also run in an expanded full screen mode giving apps more surface area for app-specific UI and in a picture-in-picture (PIP) mode for ongoing sessions.

This doesn't mean that embedded app discoverability problems are solved. People still need to either ask for apps by name in ChatGPT, access them by through the "+" button, or rely on the model's ability to decide if/when to use specific apps.

The back and forth between the server running an embedded app and the AI client also has room for improvement. Unlike desktop and mobile operating systems, ChatGPT doesn't (yet) support automatic background refresh, server-initiated notifications, or even passing files (only context) from the front end to a server. These capabilities are pretty fundamental to modern apps, so perhaps support isn't far away.

The tradeoffs involved in building apps for any platform have always been about distribution and technical capabilities or limitations. 800M weekly ChatGPT users is a compelling distribution opportunity and with ChatGPT Apps, a lot of embedded AI app user experience and technical issues have been addressed.

Is this enough to move MCP remote servers from a developer-only protocol to applications that feel like proper software? It's the same pattern from every platform shift: underlying technology first, then the user experience layer that makes it accessible. And there was definitely big steps forward on that last week.

October 9, 2025

Let the AI do the Onboarding

Anyone that's designed software has likely had to address the "empty state" problem. While an application can create useful stuff, getting users over the initial hurdle of creation is hard. With today's technology, however, AI models can cross the creation chasm so people don't have.

If you're designing a spreadsheet application, you'll need a "new spreadsheet" page. If you're designing a presentation tool, you'll need an empty state for new presentations. Document editors, design tools, project management apps... they all face the same hurdle: how do you help people get started when they're staring at a blank canvas?

Designers have tried to address the creation chasm many times resulting in a bunch of common patterns you'll encounter in any software app you use.

Coach marks that educate people on what they can change and how

Templates that provide pre-built starting points

Tours that walk users through key features

Overlays that highlight important interface elements

Videos that demonstrate how to use an application

These approaches require people to learn first, then act. But in reality most of us just jump right into doing and only fall-back on learning if what we try doesn't work. Shockingly, asking people to read the manual first doesn't work.

But with the current capabilities of AI models, we can do something different. AI can model how to use a product by actually going through the process of creating something and letting people watch. From there, people can just tweak the result to get closer to what they want. AI does the creation, people do the curation.

Rather than learning then doing, people observe then refine. Instead of starting from nothing, they start from something an AI builds for them and making it their own. Instead of teaching people how to create, we show them creation in action. In other words, the AI does the (onboarding) work.

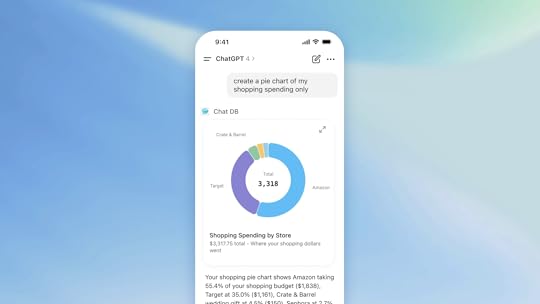

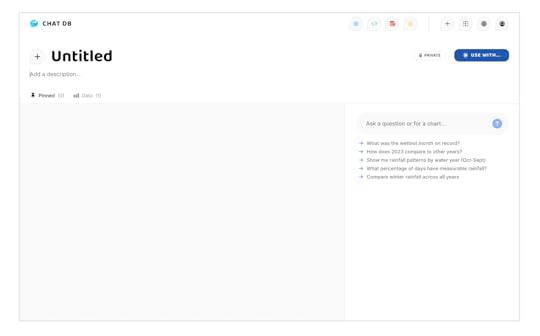

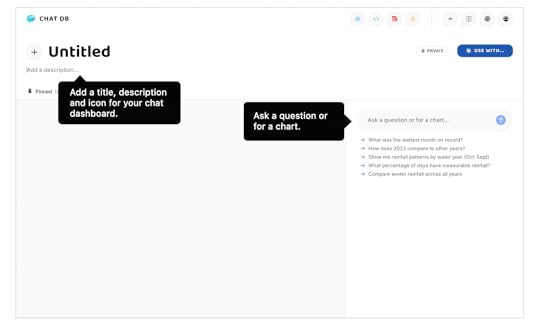

You can see this in action on ChatDB which allows people to instantly understand, visualize, and share data. When you upload a set of data to ChatDB it, will:

make a dashboard for you

name your dashboard

write a description for it

pick an icon and color set for it

make you a series of initial charts

pin one to your dashboard

All this happens in front of your eyes making it clear how ChatDB works and what you can do with it, no onboarding required.

Once your dashboard is made, it's trivial to edit the title (just click and type), change the icon, colors, and more. AI gives you the starting point and you take it from there. Try it out yourself.

With this approach, we can shift from applications that tell people how to use them to applications that show people what they can do by doing it for them. The traditional empty state problem transforms from "how do we help people start?" to "how do we help people refine?" And software shows people what's possible through action rather than instruction.

October 6, 2025

Wrapper vs. Embedded AI Apps

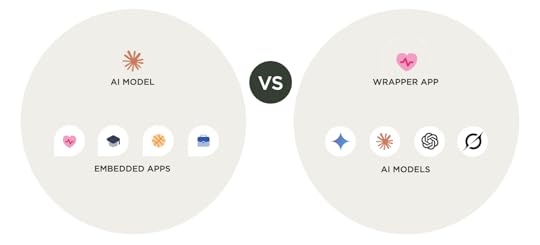

As we saw during the PC, Web, and mobile technology shifts, how software applications are built, used, and distributed will change with AI. Most AI applications built to date have adopted a wrapper approach but increasingly, being embedded is becoming a viable option too. So let's look at both...

Wrapper AI Apps

Wrapper apps build a custom software experience around an AI model(s). Think of these as traditional applications that use AI as their primary processing engine for most core tasks. These apps have a familiar user interface but their features use one or more AI models for processing user input, information retrieval, generating output, and more. With wrapper apps, you need to build the application's input and output capabilities, integrations, user interface, and how all these pieces and parts work with AI models.

The upside is you can make whatever interface you want, tailored to your specific users' needs and continually improve it through your visibility of all user interactions. The cost is you need to build and maintain the ever-increasing list of capabilities users expect with AI applications like: the ability to use image understanding as input, the ability to search the Web, the ability to create PDFs or Word docs, and much more.

Embedded AI Apps

Embedded AI apps operate within existing AI clients like Claude.ai or ChatGPT. Rather than building a standalone experience, these apps leverage the host client for input, output, and tool capabilities, alongside a constantly growing list of integrations.

To use concrete examples, if ChatGPT lets people to turn their conversation results into a podcast, your app's results can be turned into a podcast. With a wrapper app, you'd be building that capability yourself. Similarly, if Claude.ai has an integration with Google Drive, your app running in Claude.ai has an integration with Google Drive, no need for you to build it. If ChatGPT can do deep research, your app can ... you get the idea.

So what's the price? For starters, your app's interface is limited by what the client you're operating in allows. ChatGPT Apps, for instance, have a set of design guidelines and developer requirements not unlike those found in other app stores. This also means how your app can be found and used is managed by the client you operate in. And since the client manages context throughout any task that involves your app, you lose the ability to see and use that context to improve your product.

Doing Both

Like the choice between native mobile apps and mobile Webs sites during the mobile era... you can do both. Native mobile apps are great for rich interactions and the mobile Web is great for reach. Most software apps benefit from both. Likewise, an AI application can work both ways. ChatDB illustrates this and the trade-offs involved. ChatDB is a service that allows people to easily make chat dashboards from sets of data.

People can use ChatDB as a standalone wrapper app or embedded within their favorite AI client like Claude or ChatGPT (as illustrated in the two embedded videos). The ChatDB wrapper app allows people to make charts, pin them to a dashboard, rearrange them and more. It's a UI and product experience focused solely on making awesome dashboards and sharing them.

The embedded ChatDB experience allows people make use of tools like Search and Browse or integrations with data sources like Linear to find new data and add it to their dashboards. These capabilities don't exist in the ChatDB wrapper app and maybe never will because of the time required to build and maintain them. But they do exist in Claude.ai and ChatGPT.

The capabilities and constraints of both wrapper and embedded AI apps are going to continue evolving quickly. So today's tradeoffs might be pretty different than tomorrow's. But it's clear what an application is, how it's distributed, and used is changing once again with AI. That means everyone will be rewriting their apps like they did for the PC, Web, and Mobile and that's a lot of opportunity for new design and development approaches.

October 2, 2025

We're (Still) Not Giving Data Enough Credit

In his AI Speaker Series presentation at Sutter Hill Ventures, UC Berkeley's Alexei Efros argued that data, not algorithms, drives AI progress in visual computing. Here's my notes from his talk: We're (Still) Not Giving Data Enough Credit.

Large data is necessary but not sufficient. We need to learn to be humble and to give the data the credit that it deserves. The visual computing field's algorithmic bias has obscured data's fundamental role. recognizing this reality becomes crucial for evaluating where AI breakthroughs will emerge.

The Role of Data

Data got little respect in academia until recently as researchers spent years on algorithms, then scrambled for datasets at the last minute

This mentality hurt us and stifled progress for a long time.

Scientific Narcissism in AI: we prefer giving credit to human cleverness over data's role

Human understanding relies heavily on stored experience, not just incoming sensory data.

People see detailed steam engines in Monet's blurry brushstrokes, but the steam engine is in your head. Each person sees different versions based on childhood experiences

People easily interpret heavily pixelated footage with brains filling in all the missing pieces

"Mind is largely an emergent property of data" -Lance Williams

Three landmark face detection papers achieved similar performance with completely different algorithms: neural networks. naive Bayes, and boosted cascades

The real breakthrough wasn't algorithmic sophistication. It was realizing we needed negative data (images without faces). But 25 years later, we still credit the fancy algorithm.

Efros's team demonstrated hole-filling in images using 2 million Flickr images with basic nearest-neighbor lookup. "The stupidest thing and it works."

Comparing approaches with identical datasets revealed that fancy neural networks performed similarly to simple nearest neighbors.

All the solution was in the data. Sophisticated algorithms often just perform fast lookup because the lookup contains the problem's solution.

Interpolation vs. Intelligence

MIT's Aude Oliva's experiments reveal extraordinary human capacity for remembering natural images.

But memory works selectively: high recognition rates for natural, meaningful images vs. near-chance performance on random textures.

We don't have photographic memory. We remember things that are somehow on the manifold of natural experience.

This suggests human intelligence is profoundly data-driven, but focused on meaningful experiences.

Psychologist Alison Gopnik reframes AI as cultural technologies. Like printing presses, they collect human knowledge and make it easier to interface with it

They're not creating truly new things, they're sophisticated interpolation systems.

"Interpolation in sufficiently high dimensional space is indistinguishable from magic" but the magic sits in the data, not the algorithms

Perhaps visual and textual spaces are smaller than we imagine, explaining data's effectiveness.

200 faces in PCA could model the whole of humanity's face. Can expand this to linear subspaces of not just pixels, but model weights themselves.

Startup algorithm: "Is there enough data for this problem?" Text: lots of data, excellent performance. Images: less data, getting there. Video/Robotics: harder data, slower progress

Current systems are "distillation machines" compressing human data into models.

True intelligence may require starting from scratch: remove human civilization artifacts and bootstrap from primitive urges: hunger, jealousy, happiness

"AI is not when a computer can write poetry. AI is when the computer will want to write poetry"

September 29, 2025

Podcast: Generative AI in the Real World

I recently had the pleasure of speaking with Ben Lorica on O'Reilly's Generative AI in the Real World podcast about how software applications are changing in the age of AI. We discussed a number of topics including:

The shift from "running code + database" to "URL + model" as the new definition of an application

How this transition mirrors earlier platform shifts like the web and mobile, where initial applications looked less robust but evolved significantly over time

How a database system designed for AI agents instead of humans operates

The "flipped" software development process where AI coding agents allow teams to build working prototypes rapidly first, then design and integrate them into products

How this impacts design and engineering roles, requiring new skill sets but creating more opportunities for creation

The importance of taste and human oversight in AI systems

And more...

You can listen to the podcast Generative AI in the Real World: Luke Wroblewski on When Databases Talk Agent-Speak (29min) on O-Reilly's site. Thanks to all the folks there for the invitation.

September 25, 2025

Future Product Days: How to solve the right problem with AI

In his How to solve the right problem with AI presentation at Future Product Days, Dave Crawford shared insights on how to effectively integrate AI into established products without falling into common traps. Here are my notes from his talk:

Many teams have been given the directive to "go add some AI" to their products. With AI as a technology, it's very easy to fall into the trap of having an AI hammer where every problem looks like an AI nail.

We need to focus on using AI where it's going to give the most value to users. It's not what we can do with AI, it's what makes sense to do with AI.

AI Interaction Patterns

People typically encounter AI through four main interaction types

Discovery AI: Helps people find, connect, and learn information, often taking the place of search

Analytical AI: Analyzes data to provide insights, such as detecting cancer from medical scans

Generative AI: Creates content like images, text, video, and more

Functional AI: Actually gets stuff done by performing actions directly or interacting with other services

AI interaction patterns exist on a context spectrum from high user burden to low user burden

Open Text-Box Chat: Users must provide all context (ChatGPT, Copilot) - high overhead for users

Sidecar Experience: Has some context about what's happening in the rest of the app, but still requires context switching

Embedded: Highly contextual AI that appears directly in the flow of user's work

Background: Agents that perform tasks autonomously without direct user interaction

Principles for AI Product Development

Think Simply: Make something that makes sense and provides clear value. Users need to know what to expect from your AI experience

Think Contextually: Can you make the experience more relevant for people using available context? Customize experiences within the user's workflow

Think Big: AI can do a lot, so start big and work backwards.

Mine, Reason, Infer: Make use of the information people give you.

Think Proactively: What kinds of things can you do for people before they ask?

Think Responsibly: Consider environmental and cost impacts of using AI.

We should focus on delivering value first over delightful experiences

Problems for AI to Solve

Boring tasks that users find tedious

Complex activities users currently offload to other services

Long-winded processes that take too much time

Frustrating experiences that cause user pain

Repetitive tasks that could be automated

Don't solve problems that are already well-solved with simpler solutions

Not all AI needs to be a chat interface. Sometimes traditional UI is better than AI

Users' tolerance and forgiveness of AI is really low. It takes around 8 months for a user to want to try an AI product again after a bad experience

We're now trying to find the right problems to solve rather than finding the right solutions to problems. Build things that solve real problems, not just showcase AI capabilities

Luke Wroblewski's Blog

- Luke Wroblewski's profile

- 86 followers